Abstract

Variable selection is fundamental while dealing with sparse signals that contain only a few number of nonzero elements. This is the case in many signal processing areas extending from high-dimensional statistical modeling to sparse signal estimation. This paper explores a new and efficient approach to model a system with underlying sparse parameters. The idea is to get the noisy observations and estimate the minimum number of underlying parameters with acceptable estimation accuracy. The main challenge is due to the non-convex optimization problem to be solved. The reconstruction stage deals with some suitable objective function in order to estimate the original sparse signal by performing variable selection procedure. This paper introduces a suitable objective function in order to simultaneously recover the true support of the underlying sparse signal while still achieving an acceptable estimation error. It is shown that the proposed method performs the best variable selection compared to the other algorithms, while approaching the lowest least mean squared error in almost all the cases.

Similar content being viewed by others

Notes

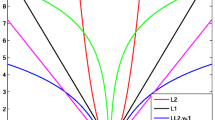

According to the discussion in [24], three necessary properties for penalty function of the least squares criterion which result in oracle properties are as follows: (1) unbiasedness, (2) sparsity and (3) continuity. It is shown that Lasso achieves the last two properties, but this comes at the price of shifting the resulting estimator by a constant parameter, thus losing the unbiasedness property.

The idea of locally linear approximation has been successfully used in [29] in order to maximize the penalized likelihood function.

References

Donoho, D.: Compressed sensing. IEEE Trans. Inf. Theory 52(4), 1289–1306 (2006)

Candes, E., Romberg, J., Tao, T.: Robust uncertainty principles: exact signal reconstruction from highly incomplete frequency information. IEEE Trans. Inf. Theory 52(2), 489–509 (2006)

Chen, S.S., Donoho, D.L., Saunders, M.A.: Atomic decomposition by basis pursuit. SIAM J. Sci. Comput. 20(1), 33–61 (1998)

Tropp, J.: Greed is good: algorithmic results for sparse approximation. IEEE Trans. Inf. Theory 50(10), 2231–2242 (2004)

Li, Y., Osher, S.: Coordinate descent optimization for \(L_{1}\) minimization with application to compressed sensing: greedy algorithm. Inverse Probl Imaging 3(3), 487–503 (2009)

Cetin, M., Malioutov, D.M., and Willsky, A.S.: A variational technique for source localization based on a sparse signal reconstruction perspective. In: Proc. ICASSP, pp. 2965–2968 (2002)

Candes, E.J., Wakin, M., Boyd, S.: Enhancing sparsity by reweighted L1 minimization. J Fourier Anal Appl 14(5), 877–905 (2008)

Chartrand, R.: Exact reconstruction of sparse signals via non-convex minimization. IEEE Signal Process. Lett. 14, 707–710 (2007)

Chartrand, R., Yin, W.: Iteratively reweighted algorithms for compressive sensing. In: Proc. ICASSP, Las Vegas, pp. 3869–3872 (2008)

Miosso, C.J., Borries, R.V., Argaez, M., Velazquez, L., Quintero, C., Potes, C.M.: Compressive sensing reconstruction with prior information by iteratively reweighted least-squares. IEEE Trans. Signal Process. 57(6), 2424–2431 (2009)

Benesty, J., Gay, S.L.: An improved PNLMS algorithm. In: Proc. IEEE ICASSP, pp. 1881–1884 (2002)

Duttweiler, D.L.: Proportionate normalized least-mean-squares adaptation in echo cancellers. IEEE Trans. Speech Audio Process. 8, 508–518 (2000)

Gaensler, T., Gay, S.L., Sondhi, M.M., Benesty, J.: Double-talk robust fast converging algorithms for network echo cancellation., IEEE Trans. Speech Audio Process. 8, 656–663 (2000)

Hoshuyama, O., Gubran, R.A., Sugiyama, A.: A generalized proportionate variable step-size algorithm for fast changing acoustic environments. In: Proc. IEEE ICASSP, pp. IV-161–IV-164 (2004)

Benesty, J., Paleologu, C., Ciochina, S.: Proportionate adaptive filters from a basis pursuit perspective. IEEE Signal Process. Lett. 17(12), 985–988 (2010)

Chen, Y., Gu, Y., Hero, A.O.: Sparse LMS for system identification. In: Proc. IEEE ICASSP, pp. 3125–3128 (2009)

Gu, Y., Jin, J., Mei, S.: \(L_{0}\) norm constraint LMS algorithm for sparse system identification. IEEE Signal Process. Lett. 16(9), 774–777 (2009)

Jin, J., Gu, Y., Mei, S.: A stochastic gradient approach on compressive sensing reconstruction based on adaptive filtering framework. IEEE J. Sel. Top. Signal Process. 4(2), 409–420 (2010)

Eksioglu, E.M.: RLS adaptive filtering with sparsity regularization. In: 10th Int. Conf. on Inf. Sci., Signal Process., and their Applications, pp. 550–553 (2010)

Tibshirani, R.: Regression shrinkage and selection via the Lasso. J. R. Stat. Soc. Ser. B 58(1), 267–288 (1996)

Efron, B., Hastie, T., Johnstone, I., Tibshirani, R.: Least angle regression. Ann. Stat. 32, 407–499 (2004)

Angelosante, D., Giannakis, G.B.: RLS-weighted Lasso for adaptive estimation of sparse signals. In: Proc. IEEE ICASSP, pp. 3245–3248 (2009)

Angelosante, D., Bazerque, J.A., Giannakis, G.B.: Online adaptive estimation of sparse signals: where RLS meets the \(L_{1}\) norm. IEEE Trans. Signal Process. 58(7), 3436–3447 (2010)

Fan, J., Li, R.: Variable selection via non-concave penalized likelihood and its oracle properties. J. Am. Stat. Assoc. 96(456), 1348–1360 (2001)

Yuan, M., Lin, Y.: On the Nonnegative Garotte Estimator, Technical Report. School of Industrial and Systems Engineering, Georgia Inst. of Tech, Georgia (2005)

Zou, H.: The adaptive Lasso and its oracle properties. J. Am. Stat. Assoc. 101(476), 1418–1429 (2006)

Zhao, P., Yu, B.: On model selection consistency of Lasso. J. Mach. Learn. Res. 7, 2541–2563 (2006)

Knight, K., Fu, W.: Asymptotics for Lasso-type estimators. Ann. Stat. 28, 1356–1378 (2000)

Zou, B.H., Li, R.: One-step sparse estimation in non-concave penalized likelihood models. Ann. Stat. 36(4), 1509–1533 (2008)

Donoho, D., Johnstone, I.: Ideal spatial adaptation by wavelet shrinkage. Biometrika 81, 425–455 (1994)

Tinati, M.A., Yousefi Rezaii, T.: Adaptive sparsity-aware parameter vector reconstruction with application to compressed sensing. In: Proc. IEEE HPCS, pp. 350–356 (2011)

Friedman, J., Hastie, T., Hofling, H., Tibshirani, R.: Pathwise coordinate optimization. Ann. Appl. Stat. 1(2), 302–332 (2007)

Ward, R.: Compressed sensing with cross validation. IEEE Trans. Inf. Theory 55(12), 5773–5782 (2009)

Boufounos, P., Duarte, M.F., Baraniuk, R.G.: Sparse signal reconstruction from noisy compressive measurements using cross validation. In: Proc. IEEE Workshop on Statistical, Signal Processing, pp. 299–303 (2007)

Author information

Authors and Affiliations

Corresponding author

Appendices

Appendix A

In (7), writing the first two terms of the Taylor series expansion of \(\mathcal P _{\tau ,\gamma } \left( {\left| {\theta _{j} } \right|} \right)\) about \(\theta _j^o \), as,

Substituting the approximation (36) into (7), the approximated objective function can be considered as,

Finally, excluding the constant terms from (37), the estimator \({\hat{{\varvec{\uptheta }}}}\) is obtained as,

Appendix B

The term \(Z^{(n)}(\mathbf{u})-Z^{(n)}(\mathbf{0})\) could be written as follows using (16),

For the sake of notation simplicity, the rightmost term of the above equation is suppressed in the following, since it will remain unchanged. So, we have the following:

After some manipulations, we will have the following:

Substituting \(\mathbf{v}=\mathbf{y}-\mathbf{X}\varvec{\uptheta }\) in (\(\text{ B}_{1})\), we have

Knowing that \(\lim _{n\rightarrow \infty } \frac{1}{n}\mathbf{X}^{T}\mathbf{X}=\mathbf{C}\), the second term in the right hand of (\(\text{ B}_{2})\) tends to \(\frac{1}{2}\mathbf{u}^{T}\mathbf{Cu}\) as \(n\) goes to infinity. By using the Slutsky’s theorem and central limit theorem, it is easy to show that the first term in the right hand of (\(\text{ B}_{2})\) tends to a zero-mean normal distribution with covariance matrix of \(\sigma ^{2}\mathbf{C}\).

Rights and permissions

About this article

Cite this article

Rezaii, T.Y., Tinati, M.A. & Beheshti, S. Sparsity aware consistent and high precision variable selection. SIViP 8, 1613–1624 (2014). https://doi.org/10.1007/s11760-012-0401-6

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11760-012-0401-6