Abstract

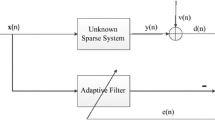

The variable step-size least-mean-square algorithm (VSSLMS) is an enhanced version of the least-mean-square algorithm (LMS) that aims at improving both convergence rate and mean-square error. The VSSLMS algorithm, just like other popular adaptive methods such as recursive least squares and Kalman filter, is not able to exploit the system sparsity. The zero-attracting variable step-size LMS (ZA-VSSLMS) algorithm was proposed to improve the performance of the variable step-size LMS (VSSLMS) algorithm for system identification when the system is sparse. It combines the \({\ell _1}\)-norm penalty function with the original cost function of the VSSLMS to exploit the sparsity of the system. In this paper, we present the convergence and stability analysis of the ZA-VSSLMS algorithm. The performance of the ZA-VSSLMS is compared to those of the standard LMS, VSSLMS, and ZA-LMS algorithms in a sparse system identification setting.

Similar content being viewed by others

References

Widrow, B., Stearns, S.D.: Adaptive Signal Processing. Prentice Hall, Eaglewood Cliffs, NJ (1985)

Haykin, S.: Adaptive Filter Theory. Prentice Hall, Upper Saddle River, NJ (2002)

Gu, Y., Jin, J., Mei, S.: \(\ell _0\) norm constraint LMS algorithm for sparse system identification. IEEE Signal Process. Lett. 16, 774–777 (2009)

Kwong, R., Johnston, E.W.: A variable step size LMS algorithm. IEEE Trans. Signal Process. 40, 1633–1642 (1992)

Shi, K., Shi, P.: Convergence analysis of sparse LMS algorithms with l1-norm penalty based on white input signal. Signal Process. 90, 3289–3293 (2010)

Tibshirani, R.: Regression shrinkage and selection via the lasso. J. R. Stat. Soc. Ser. B 58, 267–288 (1996)

Donoho, D.: Compressive sensing. IEEE Trans. Inf. Theory 52, 1289–1306 (2006)

Bajwa, W.U., Haupt, J., Raz, G. Nowak, R.: Compressed channel sensing. In: Proceedings of the 42nd Annual Conference on Information Sciences and Systems, pp. 5–10 (2008)

Chen, Y., Gu, Y., Hero, A.O.: Sparse LMS for system identification. In: Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing, pp. 3125–3128, Taipei, Taiwan (2009)

Salman, M.S., Jahromi, M.N., Hocanin, A., Kukrer, O.: A zero-attracting variable step-size LMS algorithm for sparse system identification. In: IX International Symposium on Telecommunications, pp. 25–27 (2012)

Kenney, J.F., Keeping, E.S.: Kurtosis. In: Mathematics of Statistics, Pt. 1, 3rd edn., pp. 102–103. Van Nostrand, Princeton, NJ (1962)

Hoyer, P.O.: Non-negative matrix factorization with sparseness constraints. J. Mach. Learn. Res. 5, 1457–1469 (2004)

Gu, Y., Jin, J., Mei, S.: A stochastic gradient approach on compressive sensing signal reconstruction based on adaptive filtering framework. IEEE J. Sel. Top. Signal Process. 4, 409–420 (2010)

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Jahromi, M.N.S., Salman, M.S., Hocanin, A. et al. Convergence analysis of the zero-attracting variable step-size LMS algorithm for sparse system identification. SIViP 9, 1353–1356 (2015). https://doi.org/10.1007/s11760-013-0580-9

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11760-013-0580-9