Abstract

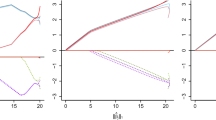

Recently, the design of group sparse regularization has drawn much attention in group sparse signal recovery problem. Two of the most popular group sparsity-inducing regularization models are \(\ell _{1,2}\) and \(\ell _{1,\infty }\) regularization. Nevertheless, they do not promote the intra-group sparsity. For example, Huang and Zhang (Ann Stat 38:1978–2004, 2010) claimed that the \(\ell _{1,2}\) regularization is superior to the \(\ell _1\) regularization only for strongly group sparse signals. This means the sparsity of intra-group is useless for \(\ell _{1,2}\) regularization. Our experiments show that recovering signals with intra-group sparse needs more measurements than those without, by the \(\ell _{1,\infty }\) regularization. In this paper, we propose a novel group sparsity-inducing regularization defined as a mixture of the \(\ell _{1/2}\) norm and the \(\ell _{1}\) norm, referred to as \(\ell _{1/2,1}\) regularization, which can overcome these shortcomings of \(\ell _{1,2}\) and \(\ell _{1,\infty }\) regularization. We define a new null space property for \(\ell _{1/2,1}\) regularization and apply it to establish a recoverability theory for both intra-group and inter-group sparse signals. In addition, we introduce an iteratively reweighted algorithm to solve this model and analyze its convergence. Comprehensive experiments on simulated data show that the proposed \(\ell _{1/2,1}\) regularization is superior to \(\ell _{1,2}\) and \(\ell _{1,\infty }\) regularization.

Similar content being viewed by others

Notes

The group structure on the signal \(\varvec{x}\) can be overlapping or nonoverlapping, for simplicity, we only consider the nonoverlapping case.

The FISTA is not guaranteed to be a descent algorithm; instead, one can apply a variant of FISTA proposed in [6] for further enhancing the performances.

References

Donoho, D.: Compressed sensing. IEEE Trans. Inf. Theory 52, 1289–1306 (2006)

Candès, E., Romberg, J., Tao, T.: Robust uncertainty principles: exact signal reconstruction from highly incomplete frequency information. IEEE Trans. Inf. Theory 52, 489–509 (2006)

Candès, E., Tao, T.: Near optimal signal recovery from random projections: Universal encoding strategies? IEEE Trans. Inf. Theory 52, 5406–5425 (2006)

Baraniuk, R.G.: Compressive sensing. IEEE Signal Process. Mag. 24, 118–121 (2007)

Kose, K., Gunay, O., Cetin, A.E.: Compressive sensing using the modified entropy functional. Digit. Signal Proc. 24, 63–70 (2014)

Karahanoglu, N.B., Erdogan, H.: Compressed sensing signal recovery via forward–backward pursuit. Digit. Signal Proc. 23, 1539–1548 (2013)

Yuan, M., Lin, Y.: Model selection and estimation in regression with grouped variables. J. Roy. Stat. Soc. B 68, 49–67 (2006)

Meier, L., Geer, S.V.D., Buhlmann, P.: The group lasso for logistic regression. J. Roy. Stat. Soc. B 70, 53–71 (2008)

Baraniuk, R.G., Cevher, V., Duarte, M.F., Hegde, C.: Model-based compressed sensing. IEEE Trans. Inf. Theory 56, 1982–2001 (2010)

Friedman, J., Hastie, T., Tibshirani, R.: A note on the group lasso and a sparse group lass. arXiv:1001.0736 [math.ST] (2010)

Jenatton, R., Mairal, J., Obozinski, G., Bach, F.: Proximal methods for hierarchical sparse coding. J. Mach. Learn. Res. 12, 2297–2334 (2011)

Jenatton, R., Gramfort, A., Michel, V., et al.: Multiscale mining of fMRI data with hierarchical structured sparsity. SIAM J. Image Sci. 5, 835–856 (2012)

Sprechmann, P., Ramirez, I., Sapiro, G., Eldar, Y.C.: C-HiLasso: a collaborative Hierarchical sparse modeling framework. IEEE Trans. Signal Process. 59, 4183–4198 (2011)

Jenatton, R., Audibert, J.Y., Bach, F.: Structured variable selection with sparsity-inducing norms. J. Mach. Learn. Res. 12, 2777–2824 (2011)

Shuo, X., Tong, X., Ye, J.: Efficient sparse group feature selection via nonconvex optimization. In: Proceedings of the 30th International Conference on Machine Learning (ICML-13), pp 1–13 (2013)

Angshul, M., Ward, R. K.: Non-Convex Group Sparsity: Application to Color Imaging. ICASSP, pp 469–472 (2010)

Huang, J., Zhang, T., Metaxas, D.: Learning with structured sparsity. J. Mach. Learn. Res. 12, 3371–3412 (2011)

Huang, J., Zhang, T.: The benefit of group sparsity. Ann Stat 38, 1978–2004 (2010)

Liu, J., Ye, J.: Efficient \(\ell _1/\ell _q\) norm regularization. arXiv:1009.4766 (2010)

Mairal, J., Jenatton, R., Obozinski, G., Bach, F.: Convex and network flow optimization for structured sparsity. J. Mach. Learn. Res. 12, 2681–2720 (2011)

Simon, N., Friedman, J., Hastie, T., Tibshirani, R.: A sparse-group lasso. J. Comput. Graph. Stat. 22, 231–245 (2013)

Xu, Z., Zhang, H., Wang, Y.: \(L_{1/2}\) regularizer. Sci. China Ser. F 53, 1159–1169 (2010)

Xu, Z., Chang, X., Xu, F.: \(L_{1/2}\) regularization: a thresholding representation theory and a fast solver. IEEE Trans. Neural Netw. Learn. Syst. 23, 1013–1027 (2012)

Stojnic, M., Parvaresh, F., Hassibi, B.: On the reconstruction of block-sparse signals with and optimal number of measurements. IEEE Trans. Signal Process. 57, 3075–3085 (2009)

Zou, H., Li, R.: One-step sparse estimates in nonconcave penalized likelihood models. Ann. Stat. 36, 1509–1533 (2008)

Wright, S.J., Nowak, R.D., Figueiredo, M.A.T.: Sparse reconstruction by separable approximation. IEEE Trans. Signal Process. 7, 2479–2493 (2009)

Beck, A., Teboulle, M.: A fast iterative shrinkage-thresholding algorithm for linear inverse problems. SIAM J. Image Sci. 2, 183–202 (2009)

Hunter, D., Li, R.: Variable selection using MM algorithms. Ann. Stat. 33, 1617–1642 (2005)

Deng, W., Yin, W., Zhang, Y.: Group sparse optimization by alternating direction method. Rice CAAM report TR11-06 (2011)

Acknowledgments

The authors would like to thank the editors and two reviewers for their valuable comments and suggestions, which led to a substantial improvement of this paper.

Author information

Authors and Affiliations

Corresponding author

Additional information

This work was supported in part by the National Basic Research Program of China (973) (Grant No. 2013CB329404), in part by the National Natural Science Foundation of China (Grant Nos. 11401465, 91230101 and 61572393), the Fundamental Research Funds for the Central Universities (Grant No. xjj2014010), and the Projects funded by China Postdoctoral Science Foundation (Grant No. 2014M560781) and Shaanxi Postdoctoral Science Foundation.

Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

About this article

Cite this article

Liu, S., Zhang, J., Liu, J. et al. \(\ell _{1/2,1}\) group sparse regularization for compressive sensing. SIViP 10, 861–868 (2016). https://doi.org/10.1007/s11760-015-0829-6

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11760-015-0829-6