Abstract

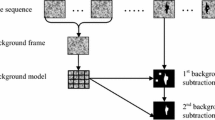

Detecting moving objects in a scene is a fundamental and critical step for many high-level computer vision tasks. However, background subtraction modeling is still an open and challenge problem, particularly in practical scenarios with drastic illumination changes and dynamic backgrounds. In this paper, we present a novel background modeling method focused on dealing with complex environments based on circular shift operator. The background model is constructed by performing circular shifts on the neighborhood of each pixel, which forms a basic region unit. The foreground mask is obtained via two stages. The first stage is to subtract the established background from the current frame to obtain the distance map. The second is to adopt the graph cut on the distance map. In order to adapt to the background changes, the background model is updated with an adaptive update rate. Experimental results on indoor and outdoor videos demonstrate the efficiency of our proposed method.

Similar content being viewed by others

References

Bouwmans, T.: Traditional and recent approaches in background modeling for foreground detection: an overview. Comput. Sci. Rev. 11, 31–66 (2014)

Bouwmans, T., Porikli, F., Horferlin, B., Vacavant, A.: Handbook on ’Background Modeling and Foreground Detection for Video Surveillance: Traditional and Recent Approaches, Implementations, Benchmarking and Evaluation’. CRC Press, Taylor and Francis Group, Boca Raton (2014)

Noceti, N., Destrero, A., Lovato, A., Odone, F.: Combined motion and appearance models for robust object tracking in real-time. In: Proceedings of AVSS, pp. 412–417 (2009)

Zhang, S., Zhou, H., Yao, H., Zhang, Y., Wang, K., Zhang, J.: Adaptive normalhedge for robust visual tracking. Signal Process. 110, 132–142 (2015)

Zhang, S., Zhou, H., Jiang, F., Li, X.: Robust visual tracking using structurally random projection and weighted least squares. IEEE Trans. Circuits Syst. Video Technol. 25(11), 1749–1760 (2015)

Zhang, S., Yao, H., Sun, X., Lu, X.: Sparse coding based visual tracking: review and experimental comparison. Pattern Recognit. 46(7), 1772–1788 (2013)

Zhang, S., Yao, H., Zhou, H., Sun, X., Liu, S.: Robust visual tracking based on online learning sparse representation. Neurocomputing 100, 31–40 (2013)

Zhang, S., Yao, H., Sun, X., Liu, S.: Robust visual tracking using an effective appearance model based on sparse coding. ACM TIST 3(3), 43 (2012)

Zhang, S., Yao, H., Sun, X., Wang, K., Zhang, J., Lu, X., Zhang, Y.: Action recognition based on overcomplete independent components analysis. Inf. Sci. 281, 635–647 (2014)

Jiang, F., Zhang, S., Shen, W., Gao, Y., Zhao, D.: Multi-layered gesture recognition with Kinect. J. Mach. Learn. Res. 16, 227–254 (2015)

Henriques, J.F., Caseiro, R., Martins, P., et al.: Exploiting the circulant structure of tracking-by-detection with kernels. In: Computer Vision-ECCV 2012. Springer, Berlin (2012)

Henriques, J.F., Caseiro, R., Martins, P., et al.: High-speed tracking with kernelized correlation filters. IEEE Trans. Pattern Anal. Mach. Intell. 37(3), 583–596 (2015)

Revaud, J., Douze, M., Cordelia, S., Jégou, H.: Event retrieval in large video collections with circulant temporal encoding. In: Proceedings of IEEE Conference on Computer Vision and Pattern Recognition, pp. 2459–2466 (2013)

Henriques, J.F., Carreira, J., Caseiro, R., Batista, J.: Beyond hard negative mining: efficient detector learning via block-circulant decomposition. In: Proceedings of IEEE International Conference on Computer Vision, pp. 2760–2767 (2013)

Stauffer, C., Grimson, W.E.L.: Adaptive background mixture models for real-time tracking. In: Proceedings of IEEE Computer Society Conference on Computer Vision and Pattern Recognition, vol. 2, pp. 246–252 (1999)

Zivkovic, Z.: Improved adaptive Gaussian mixture model for background subtraction. In: Proceedings of 17th International Conference Pattern Recognit (ICPR), vol. 2., pp. 28–31 (2004)

Lin, H.-H., Chuang, J.-H., Liu, T.-L.: Regularized background adaptation: a novel learning rate control scheme for Gaussian mixture modeling. IEEE Trans. Image Process. 20(3), 822–836 (2011)

Liu, X., Qi, C.: Future-data driven modeling of complex backgrounds using mixture of Gaussians. Neurocomputing 119(7), 439–453 (2013)

Elgammal, A., Harwood, D., Davis, L.: Non-parametric model for background subtraction. In: Proceedings of European Conference on Computer Vision, pp. 751–767 (2000)

Oliver, N.M., Rosario, B., Pentland, A.P.: A Bayesian computer vision system for modeling human interactions. IEEE Trans. Pattern Anal. Mach. Intell. 22(8), 831–843 (2000)

Li, L., Huang, W., Gu, I.Y.-H., Tian, Q.: Statistical modeling of complex backgrounds for foreground object detection. IEEE Trans. Image Process. 13(11), 1459–1472 (2004)

Sheikh, Y., Shah, M.: Bayesian modeling of dynamic scenes for object detection. IEEE Trans. Pattern Anal. Mach. Intell. 27(11), 1778–1792 (2005)

Heikkilä, M., Pietikäinen, M.: A texture-based method for modeling the background and detecting moving objects. IEEE Trans. Pattern Anal. Mach. Intell. 28(4), 657–662 (2006)

Kim, K., Chalidabhongse, T.H., Harwood, D., Davis, L.: Real-time foreground–background segmentation using codebook model. Real-Time Imaging 11(3), 172–185 (2005)

Guo, J.-M., Liu, Y.-F., Hsia, C.-H., Shih, M.-H., Hsu, C.-S.: Hierarchical method for foreground detection using codebook model. IEEE Trans. Circuits Syst. Video Technol. 21(6), 804–815 (2011)

Maddalena, L., Petrosino, A.: A self-organizing approach to background subtraction for visual surveillance applications. IEEE Trans. Image Process. 17(7), 1168–1177 (2008)

Maddalena, L., Petrosino, A.: The SOBS algorithm: what are the limits?. In: Proceedings of IEEE Computer Society Conference on Computer Vision and Pattern Recognition Workshops, pp. 21–26 (2012)

Zhang, S., Yao, H., Liu, S.: Dynamic background subtraction based on local dependency histogram. IJPRAI 23(7), 1397–1419 (2009)

Barnich, O., Van Droogenbroeck, M.: A universal background subtraction algorithm for video sequences. IEEE Trans. Image Process. 20(6), 1709–1724 (2011)

Hofmann, M., Tiefenbacher, P., Rigoll, G.: Background segmentation with feedback: the pixel-based adaptive segmenter. In: Proceedings of IEEE Computer Society Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), pp. 38–43 (2012)

Zhou, X., Yang, C., Yu, W.: Moving object detection by detecting contiguous outliers in the low-rank representation. IEEE Trans. PAMI 35(3), 597–610 (2013)

Haines, T.S.F., Xiang, T.: Background Subtraction with Dirichlet process mixture model. IEEE Trans. PAMI 36(4), 670–683 (2014)

Yadav, D.K., Singh, K.: A combined approach of Kullback–Leibler divergence method and background subtraction for moving object detection in thermal video. Infrared Phys. Technol. 76, 21–31 (2016)

Yadav, D.K., Singh, K.: Moving object detection for visual surveillance using quasi-euclidian distance. In: 2nd International Conference on Computer, Communication and Technology (IC3T-2015), Lecture Notes in Computer Science, AISC Series, Springer, pp 1–8, July 24–26 2015

St-Charles, P., Bilodeau, G., Bergevin, R.: SuBSENSE: a universal change detection method with local adaptive sensitivity. IEEE Trans. Image Process. 24(1), 359–373 (2015)

Seo, J.-W., Kim, S.-D.: Dynamic background subtraction via sparse representation of dynamic textures in a low-dimensional subspace. Signal Image Video Process. 10(1), 29–36 (2016)

Balcilar, M., Sonmez, A.C.: Background estimation method with incremental iterative Re-weighted least squares. Signal Image Video Process. 10(1), 85–92 (2016)

Xia, H., Song, S., He, L.: A modified Gaussian mixture background model via spatiotemporal distribution with shadow detection. Signal Image Video Process. 10(2), 343–350 (2016)

Chacon-Murguia, I.M., Ramirez-Quintana, J.A., Urias-Zavala, D.: Segmentation of video background regions based on a DTCNN-clustering approach. Signal Image Video Process. 9(1), 135–144 (2015)

Gray, R.M.: Toeplitz and Circulant Matrices: A Review. Now Publishers, Norwell (2006)

Davis, P.J.: Circulant Matrices. American Mathematical Society, Providence (1994)

Rother, C., Kolmogorov, V., Blake, A.: Grabcut—interactive foreground extraction using iterated graph cuts. ACM Trans. Gr. 23(3), 309–314 (2004)

Boykov, Y., Jolly, M.P.: Interactive graph cuts for optimal boundary and region segmentation of objects in N-D images. In: Proceedings of Eighth IEEE International Conference on Computer Vision, pp. 105–112, Vancouver, British Columbia, Canada (2001)

Ford, L., Fulkerson, D.: Flows in Networks. Princeton University Press, Princeton (1962)

Goyette, N., Jodoin, P., Porikli, F., Konrad, J., Ishwar, P.: Changedetection.net: a new change detection benchmark dataset. In: IEEE Computer Society Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), pp. 1–8 (2012)

Hofmann, M., Tiefenbacher, P., Rigoll, G.: Background segmentation with feedback: the pixel-based adaptive segmenter. In: IEEE Workshop on Change Detection, CVPR (2012)

Acknowledgments

This work was supported by the by Shanghai University Outstanding Teachers Cultivation Fund Program A30DB1524011-21 and 2015 School Fund Project A01GY15GX48.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Dou, J., Qin, Q. & Tu, Z. Background subtraction based on circulant matrix. SIViP 11, 407–414 (2017). https://doi.org/10.1007/s11760-016-0975-5

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11760-016-0975-5