Abstract

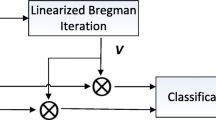

Automatic facial expression recognition has received considerable attention in the research areas of computer vision and pattern recognition. To achieve satisfactory accuracy, deriving a robust facial expression representation is especially important. In this paper, we present an adaptive weighted fusion model (AWFM), aiming to automatically determine optimal weighted values. The AWFM integrates two subspaces, i.e., unsupervised and supervised subspaces, to represent and classify query samples. The unsupervised subspace is formed by differentiated expression samples generated via an auxiliary neutral training set. The supervised subspace is obtained through the reconstruction of intra-class singular value decomposition based on low-rank decomposition from raw training data. Our experiments using three public facial expression datasets confirm that the proposed model can obtain better performance compared to conventional fusion methods as well as state-of-the-art methods from the literature.

Similar content being viewed by others

References

Lee, S.H., Baddar, W.J., Ro, Y.M.: Collaborative expression representation using peak expression and intra class variation face images for practical subject-independent emotion recognition in videos. Pattern Recognit. 54(C), 52–67 (2016)

Yusuf, R., Sharma, D.G., Tanev, I., Shimohara, K.: Evolving an emotion recognition module for an intelligent agent using genetic programming and a genetic algorithm. Artif. Life Robot. 21(1), 85–90 (2016)

Wang, Y., Wang, C., Liang, L.: Sparse representation theory and its application for face recognition. Int. J. Smart Sens. Intell. Syst. 8(1), 107–124 (2015)

Fang, Y., Chang, L.: Multi-instance feature learning based on sparse representation for facial expression recognition. Lect. Notes Comput. Sci. 8935, 224–233 (2015)

Deng, X., Da, F., Shao, H.: Adaptive feature selection based on reconstruction residual and accurately located landmarks for expression-robust 3D face recognition. Signal Image Video Process. 11, 1305–1312 (2017)

Lai, C.C., Ko, C.H.: Facial expression recognition based on two-stage feature extraction. Optik 125(22), 6678–6680 (2014)

Li, H., Ding, H., Huang, D., Wang, Y., Zhao, X., Jean, J.M., Chen, L.: An efficient multimodal 2D+3D feature-based approach to automatic facial expression. Comput. Vis. Image Underst. 140(SCIA), 83–92 (2015)

Turan, C., Lam, K.M.: Region-based feature fusion for facial-expression recognition. In: 2014 IEEE International Conference on Image Processing, ICIP 2014, pp. 5966–5970

Hermosilla, G., Gallardo, F., Farias, G., Martin, C.S.: Fusion of visible and thermal descriptors using genetic algorithms for face recognition systems. Sensors 15(8), 17944–17962 (2015)

Fernandes, S.L., Bala, J.G.: Study on MACE Gabor filters, Gabor wavelets, DCT-neural network, hybrid spatial feature interdependence matrix, fusion techniques for face recognition. Recent Pat. Eng. 9(1), 29–36 (2015)

Han, D., Han, C., Deng, Y., Yang, Y.: Classifier fusion based on inner-cluster class distribution. Appl. Mech. Mater. 44–47, 3220–3224 (2011)

Jia, X., Zhang, Y., Powers, D., Ali, H.B.: Multi-classifier fusion based facial expression recognition approach. KSII Trans. Internet Inf. Syst. 8(1), 196–212 (2014)

Khan, S.A., Usman, M., Riaz, N.: Face recognition via optimized features fusion. J. Intell. Fuzzy Syst. 28(4), 1819–1828 (2015)

Gharsalli, S., Laurent, H., Emile, B., Desquesnes, X.: Various fusion schemes to recognize simulated and spontaneous emotions. In: Proceedings of the VISAPP2015-10th International Conference on Computer Vision Theory and Application, VISIGRAPP, vol. 2, pp. 424–431 (2015)

Turan, C., Lam, K.M.: Region-based feature fusion for facial expression fusion. In: 2014 IEEE International Conference on Image Processing, ICIP, pp. 5966–5970

Xu, Y., Lu, Y.: Adaptive weighted fusion: a novel fusion approach for image classification. Neurocomputing 168, 566–574 (2015)

Sun, Z., Hu, Z.P., Wang, M., Zhao, S.H.: Individual-free representation based classification for facial expression recognition. Signal Image Video Process. 11(4), 597–604 (2017)

Savran, A., Cao, H., Nenkova, A., Verma, R.: Temporal Bayesian fusion for affect sensing: combining video, audio, and lexical modalities. IEEE Trans. Cybern. 45(9), 1927–1941 (2015)

Hayat, M., Bennarmoun, M., El-Sallam, A.A.: An RGB-D based image set classification for robust face recognition from Kinect data. Neurocomputing 171, 889–900 (2016)

Ali, H.B., Powers, D.M.W.: Face and facial expression recognition: fusion based non negative matrix factorization. In: Proceedings of the ICAART 2015-7th International Conference on Agents and Artificial Intelligence, vol. 2, pp. 426–434 (2015)

Lyons, M., Akamatsu, S., Kamachi, M., Gyoba, J.: Coding facial expressions with Gabor wavelets. In: IEEE International Conference on Automatic Face and Gesture Recognition, FG, pp. 200–205 (1998)

Lucey, P., Jeffrey, F.C., Kanade, T., Saragih, J., Ambadar, Z., Matthews, I.: The extended Cohn–Kanade dataset \((\text{CK}+)\): a complete dataset for action unit and emotion-specified expression. In: IEEE Computer Society Conference on Computer Vision and Pattern Recognition-Workshops, CVPRW, pp. 94–101 (2010)

Lundqvist, D., Flykt, A., Ohman, A.: The Karolinska Directed Emotion Faces-KDEF (CD ROM). Department of Clinical Neuroscience, Psychology section, Karolinska Institute, Stockholm (1998)

Liu, Z., Zhang, M., Pu, J., Wu, Q.: Integrating the original and approximate face images to performing collaborative representation based classification. Optik 126(24), 5539–5543 (2015)

Candes, E.J., Li, X.D., Ma, Y., Wright, J.: Robust principal component analysis. J. ACM 58(3), 11:1–11:37 (2011)

Li, L., Li, S., Fu, Y.: Learning low-rank and discriminative dictionary for image classification. Image Vis. Comput. 32(10), 814–823 (2014)

Ross, A., Jain, A.K.: Information fusion in biometrics. Pattern Recognit. Lett. 24(13), 2115–2125 (2003)

Kittler, J., Hatef, M., Duin, R.P.W., Matas, J.: On combining classifiers. IEEE Trans. Pattern Anal. 20(3), 226–239 (1998)

Zhang, S., Zhao, X., Lei, B.: Robust facial expression recognition via compressive sensing. Sensors 12(3), 3747–3761 (2012)

Li, Z., Zhan, T., Xie, B., Cao, J., Zhang, J.: A face recognition algorithm based on collaborative representation. Optik 125(17), 4845–4849 (2014)

Kumar, N., Agrawal, R.K., Jaiswal, A.: A comparative study of linear discriminant and linear regression based methods for expression invariant face recognition. Adv. Intell. Syst. Comput. 264, 23–32 (2014)

Tian, Y.L.: Evaluation of face resolution for expression analysis. In: IEEE Computing Society Conference on Computer Vision and Pattern Recognition Workshops, pp. 82 (2004)

Huang, M.W., Wang, Z.W., Ying, Z.L.: A new method for facial expression recognition based on sparse representation plus LBP. In: Proceedings of the International Congress on Image and Signal Processing, vol. 3, no. 4, pp. 1750–1754 (2010)

Wang, Z., Ying, Z.: Facial expression recognition based on local phase quantization and sparse representation. In: International Conference on Natural Computation, pp. 222–225 (2012)

Sun, Z., Hu, Z.P., Wang, M., Zhao, S.H.: Dictionary learning feature space via sparse representation classification for facial expression recognition. Artif. Intell. Rev. (2017). https://doi.org/10.1007/s10462-017-9554-6

Lee, S.H., Yong, M.R.: Intra-class variation reduction using training expression images for sparse representation based facial expression recognition. IEEE Trans. Affect. Comput. 5(3), 340–351 (2014)

Mohammadi, M.R., Fatemizadeh, E., Maboor, M.H.: PCA-based dictionary building for accurate facial expression recognition via sparse representation. J. Vis. Commun. Image Represent. 25, 1082–1092 (2014)

Sun, Z., Hu, Z.P., Chiong, R., Wang, M., He, W.: Combining the kernel collaboration representation and deep subspace learning for facial expression recognition. J. Circuit Syst. Comput. (2017). https://doi.org/10.1142/S0218126618501219

Acknowledgements

This work is supported by the National Natural Science Foundation of China under Grant Numbers 61071199 and 61771420, Natural Science Foundation of Hebei Province of China under Grant Number F2016203422, and Postgraduate Innovation Project of Hebei Province under Grant Number CXZZBS2017051. The first author would like to acknowledge financial support from the China Scholarship Council, which allows her to study at The University of Newcastle, Australia, as a visiting PhD student for 8 months.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Sun, Z., Hu, Zp., Chiong, R. et al. An adaptive weighted fusion model with two subspaces for facial expression recognition. SIViP 12, 835–843 (2018). https://doi.org/10.1007/s11760-017-1226-0

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11760-017-1226-0