Abstract

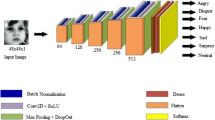

Among the factors contributing to conveying emotional state of an individual is facial expression. It represents the most important nonverbal communication and a challenging task in the field of computer vision. In this work, we propose a combined deep architecture model for facial expression recognition that uses appearance and geometric features extracted separately using convolution layers and supervised decent method, respectively. The proposed model is trained on three public databases [the Extended Cohn Kanade (CK+), the OULU-CASIA VIS, and the JAFFE]. The three databases contain a limited amount of data that we enlarge by adding a step of data augmentation to original images. For further comparison, two additional models that use appearance features only and geometric features only are trained on the same subset of data, to show how the combination of the two deep architectures influences results. On the other hand, in order to investigate the generalization of the combined model, a cross-database evaluation is performed. The obtained results achieve the state-of-the-art and improve recent work, especially in case of cross-database evaluation.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.References

Kamarol, S.K.A., Jaward, M.H., Kälviäinen, H., Parkkinen, J., Parthiban, R.: Joint facial expression recognition and intensity estimation based on weighted votes of image sequences. Pattern Recognit. Lett. 92, 25 (2017)

Mao, Q., Rao, Q., Yu, Y., Dong, M.: Hierarchical Bayesian theme models for multipose facial expression recognition. IEEE Trans. Multimed. 19(4), 861 (2017)

Li, J., Zhang, D., Zhang, J., Zhang, J., Li, T., Xia, Y., Yan, Q., Xun, L.: Facial expression recognition with faster R-CNN. Procedia Comput. Sci 107, 135 (2017)

Mehrabian, A.: Communication without words. Commun. Theory 1, 193–200 (2011)

Ekman, P.: An argument for basic emotions. Cognit. Emot. 6(3–4), 169 (1992)

Mohammadian, A., Aghaeinia, H., Towhidkhah, F.: Incorporating prior knowledge from the new person into recognition of facial expression. Signal Image Video Process. 10(2), 235 (2016)

Yurtkan, K., Demirel, H.: Entropy-based feature selection for improved 3D facial expression recognition. Signal Image Video Process. 8(2), 267 (2014)

Ashir, A.M., Eleyan, A.: Facial expression recognition based on image pyramid and single-branch decision tree. Signal Image Video Process. 11(6), 1017 (2017)

Zarbakhsh, P., Demirel, H.: Low-rank sparse coding and region of interest pooling for dynamic 3D facial expression recognition. Signal Image Video Process. 12(8), 1611–1618 (2018)

Liu, P., Han, S., Meng, Z., Tong, Y.: Facial expression recognition via a boosted deep belief network. In: The IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2014)

LeCun, Y., Haffner, P., Bottou, L., Bengio, Y.: Object recognition with gradient-based learning. In: Shape, Contour and Grouping in Computer Vision, vol. 1681, pp. 319–345. Springer (1999)

Lopes, A.T., de Aguiar, E., De Souza, A.F., Oliveira-Santos, T.: Facial expression recognition with convolutional neural networks: coping with few data and the training sample order. Pattern Recognit. 61, 610 (2017)

Sánchez, A., Ruiz, J.V., Moreno, A.B., Montemayor, A.S., Hernández, J., Pantrigo, J.J.: Differential optical flow applied to automatic facial expression recognition. Neurocomputing 74(8), 1272 (2011)

Fan, X., Tjahjadi, T.: A dynamic framework based on local Zernike moment and motion history image for facial expression recognition. Pattern Recognit. 64, 399 (2017)

Pu, X., Fan, K., Chen, X., Ji, L., Zhou, Z.: Facial expression recognition from image sequences using twofold random forest classifier. Neurocomputing 168, 1173 (2015)

Cruz, E.A.S., Jung, C.R., Franco, C.H.E.: Facial expression recognition using temporal POEM features. Pattern Recognit. Lett. 114, 13–21 (2018)

Jung, H., Lee, S., Yim, J., Park, S., Kim, J.: Joint fine-tuning in deep neural networks for facial expression recognition. In: 2015 IEEE International Conference on Computer Vision (ICCV), pp. 2983–2991. IEEE (2015)

Mlakar, U., Potočnik, B.: Automated facial expression recognition based on histograms of oriented gradient feature vector differences. Signal Image Video Process. 9(1), 245 (2015)

Sun, Z., Hu, Z., Chiong, R., Wang, M., Zhao, S.: An adaptive weighted fusion model with two subspaces for facial expression recognition. Signal Image Video Process. 12(5), 835 (2018)

Barman, A., Dutta, P.: Facial expression recognition using distance and shape signature features. Pattern Recognit. Lett. (2017). https://doi.org/10.1016/j.patrec.2017.06.018

Xiong, X., Torre, F.: Supervised descent method and its applications to face alignment. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 532–539 (2013)

Viola, P., Jones, M.: Rapid object detection using a boosted cascade of simple features. In: Proceedings of the 2001 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, 2001. CVPR 2001, vol. 1, pp. 1–511. IEEE (2001)

Srivastava, N., Hinton, G., Krizhevsky, A., Sutskever, I., Salakhutdinov, R.: Dropout: a simple way to prevent neural networks from overfitting. J. Mach. Learn. Res. 15(1), 1929 (2014)

Zeiler, M.D.: ADADELTA: an adaptive learning rate method. arXiv:1212.5701 (2012)

Salmam, F.Z., Madani, A., Kissi, M.: Facial expression recognition using decision trees. In: 2016 13th International Conference on Computer Graphics, Imaging and Visualization (CGiV), pp. 125–130. IEEE (2016)

Hall, M.A.: Correlation-based feature selection for machine learning, University of Waikato Hamilton (1999)

Devi, M.I., Rajaram, R., Selvakuberan, K.: Generating best features for web page classification. Webology 5(1), 52 (2008)

Happy, S., Routray, A.: Automatic facial expression recognition using features of salient facial patches. IEEE Trans. Affect. Comput. 6(1), 1 (2015)

Lucey, P., Cohn, J.F., Kanade, T., Saragih, J., Ambadar, Z., Matthews, I.: The extended cohn-kanade dataset (ck+): a complete dataset for action unit and emotion-specified expression. In: 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), pp. 94–101. IEEE (2010)

Zhao, G., Huang, X., Taini, M., Li, S.Z., Pietikäinen, M.: Facial expression recognition from near-infrared videos. Image Vis. Comput. 29(9), 607 (2011)

Lyons, M.J., Akamatsu, S., Kamachi, M., Gyoba, J.: Coding facial expressions with Gabor wavelets. In:Proceedings Third IEEE International Conference on Automatic Face and Gesture Recognition, pp 200–205 (1998). https://doi.org/10.1109/AFGR.1998.670949

Chollet, F., et al.: Keras. https://keras.io (2015)

De la Torre, F., Chu, W.S., Xiong, X., Vicente, F., Ding, X., Cohn, J.: IntraFace. In: IEEE International Conference on Automatic Face and Gesture Recognition and Workshops, vol. 1. NIH Public Access (2015)

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Salmam, F.Z., Madani, A. & Kissi, M. Fusing multi-stream deep neural networks for facial expression recognition. SIViP 13, 609–616 (2019). https://doi.org/10.1007/s11760-018-1388-4

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11760-018-1388-4