Abstract

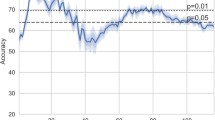

Analysis of brain activities in language perception for individuals with different musical backgrounds can be based upon the study of multichannel electroencephalograhy (EEG) signals acquired in different external conditions. The present paper is devoted to the study of the relationship of mental processes and the perception of external stimuli related to the previous musical education. The experimental set under study included 38 individuals who were observed during perception of music and during listening to foreign languages in four stages, each of which was 5 min long. The proposed methodology is based on the application of digital signal processing methods, signal filtering, statistical methods for signal segment selection and active electrode detection. Neural networks and support vector machine (SVM) models are then used to classify the selected groups of linguists to groups with and without a previous musical education. Our results include mean classification accuracies of 82.9% and 82.4% (with the mean cross-validation errors of 0.21 and 0.22, respectively) for perception of language or music and features based upon EEG power in the beta and gamma EEG frequency bands using neural network and SVM classification models.

Similar content being viewed by others

References

Bidelman, G.: Amplified induced neural oscillatory activity predicts musicians’ benefits in categorical speech perception. Neuroscience 348, 107–113 (2017)

Besedova, P.: Ways of working with music in FLT in primary schools. In: The European Proceedings of Social and Behavioural Sciences EpSBS, vol. 2017, pp. 29–42 (2017)

Ondrakova, J.: Error correction and the ability to use a foreign language without mistakes. In: The European Proceedings of Social and Behavioural Sciences EpSBS, vol. 2017, pp. 979–986 (2017)

Adamos, D., Laskaris, N., Micheloyannis, S.: Harnessing functional segregation across brain rhythms as a means to detect EEG oscillatory multiplexing during music listeninge. J. Neural Eng. 15(3), 036012 (2018)

Nolden, S., Rigoulot, S., Jolicoeur, P., Armony, J.: Effects of musical expertise on oscillatory brain activity in response to emotional sounds. Neuropsychologia 103, 96–105 (2017)

Hsu, J., Zhen, Y., Lin, T., Chiu, Y.: Affective content analysis of music emotion through EEG. Multimed. Syst. 24(2), 195–210 (2018)

Nasir, S., Mahmud, W.: Brain signal analysis using different types of music. Int. J. Integr. Eng. 7(3), 31–36 (2015)

Kumagai, Y., Arvaneh, M., Tanaka, T.: Familiarity affects entrainment of EEG in music listening. Front. Hum. Neurosci. 11(384), 1–8 (2017)

Hortensius, R., Hekele, F., Cross, E.: The perception of emotion in artificial agents. IEEE Trans. Cogn. Dev. Syst. (2018). https://doi.org/10.1109/TCDS.2018.2826921

Nicolae, I., Acqualagna, L., Blankertz, B.: Assessing the depth of cognitive processing as the basis for potential user-state adaptation. Front. Neurosci. 11, 548 (2017)

Dvorak, D., Shang, A., Abdel-Baki, S., Suzuki, W., Fenton, A.: Cognitive behavior classification from scalp EEG signals. IEEE Trans. Neural Syst. Rehabilit. Eng. 26(4), 729–739 (2018)

Steinbeis, N., Koelsch, S.: Comparing the processing of music and language meaning using EEG and fMRI provides evidence for similar and distinct neural representations. PLoS ONE 3(5), 2226 (2008)

Herholz, S., Zatorre, R.: Musical training as a framework for brain plasticity: behavior, function, and structure. Neuron 76(3), 486–502 (2012)

Rodd, J., Davis, M.: How to study spoken language understanding: a survey of neuroscientific methods. Lang. Cogn. Neurosci. 32(7), 805–817 (2017)

Kumar, P., Saini, R., Roy, P., Sahu, P., Dogra, D.: Envisioned speech recognition using EEG sensors. Pers. Ubiquitous Comput. 22(1), 185–199 (2018)

Fritz, J., Poeppel, D., Trainor, L., Schlaug, G., Patel, A., Peretz, I., Rauschecker, J., Halle, J., Stregapede, F., Parsons, L.: The neurobiology of language, speech, and music. Strungmann Forum Rep. 10, 417–459 (2013). MA: MIT Press

Cejnar, P., Vyšata, O., Vališ, M., Procházka, A.: The complex behaviour of a simple neural oscillator model in the human cortex. IEEE Trans. Neural Syst. Rehabilit. Eng. 10, 15 (2018). https://doi.org/10.1109/TNSRE.2018.2883618

Weiss, S., Mueller, H.: The contribution of EEG coherence to the investigation of language. Brain Lang. 85(2), 325–343 (2009)

D’Souza, A., Moradzadeh, L., Wiseheart, M.: Musical training, bilingualism, and executive function: working memory and inhibitory control. Cogn. Res. Princ. Implic. 3(1), e11:1–18 (2018)

Marin, M.: Effects of early musical training on musical and linguistic syntactic abilities. Ann. N. Y. Acad. Sci. 1169, 187–190 (2009)

Moreno, S., Marques, C., Santos, A., Santos, M., Castro, S., Besson, M.: Musical training influences linguistic abilities in 8-year-old children: more evidence for brain plasticity. Cereb. Cortex 19(3), 712–723 (2009)

Habibi, A., Damasio, A., Ilari, B., Elliott Sachs, M., Damasio, H.: Music training and child development: a review of recent findings from a longitudinal study. Ann. N. Y. Acad. Sci. 2018, 1–9 (2018)

Habibi, A., Damasio, A., Ilari, B., Veiga, R., Joshi, A.A., Leahy, R., Haldar, J., Varadarajan, D., Bhushan, C., Damasio, H.: Childhood music training induces change in micro and macroscopic brain structure: results from a longitudinal study. Cereb. Cortex 8, 1–12 (2017)

Besedova, P.: Music as an intercultural medium in foreign language teaching. In: The European Proceedings of Social and Behavioural Sciences EpSBS, vol. 2016, pp. 646–662 (2016)

Vyšata, O., Kukal, J., Procházka, A., Pazdera, L., Vališ, M.: Age-related changes in the energy and spectral composition of EEG. Neurophysiology 44(4), 63–67 (2012)

Tibdewal, M.N., Fate, R.R., Mahadevappa, M., Ray, A.K., Maloka, M.: Classification of artifactual EEG signal and detection of multiple eye movement artifact zones using novel time-amplitude algorithm. Signal Image Video Process. 11(2), 333–340 (2017)

Procházka, A., Kuchyňka, J., Vyšata, O., Schätz, M., Yadollahi, M., Sanei, S., Vališ, M.: Sleep scoring using polysomnography data features. Signal Image Video Process. 12(6), 1043–1051 (2018)

Procházka, A., Schätz, M., Centonze, F., Kuchyňka, J., Vyšata, O., Vališ, M.: Extraction of breathing features using MS kinect for sleep stage detection. Signal Image Video Process. 10(7), 1278–1286 (2016)

Vyšata, O., Procházka, A., Mareš, J., Rusina, R., Pazdera, L., Vališ, M., Kukal, J.: Change in the characteristics of EEG color noise in Alzheimer’s disease. Clin. EEG Neurosci. 45(3), 147–151 (2014)

Gordon, R., Magne, C., Largea, E.: EEG correlates of song prosody: a new look at the relationship between linguistic and musical rhythm. Front. Psychol. 2(352), 26–38 (2011)

Sturm, I.: Analyzing the perception of natural music with EEG and ECoG. Dissertation, Technischen Universität Berlin (2016)

Strong, J.V., Mast, B.T.: The cognitive functioning of older adult instrumental musicians and non-musicians. Aging Neuropsychol. Cogn. 2018, 1–20 (2018)

Zatorre, R.J., Belin, P.: Spectral and temporal processing in human auditory cortex. Cereb. Cortex 11(10), 946–953 (2001)

Hall, D.A., Johnsrude, I.S., Haggard, M.P., Palmer, A.R., Akeroyd, M.A., Summerfield, A.Q.: Spectral and temporal processing in human auditory cortex. Cereb. Cortex 12(2), 140–149 (2002)

Beres, A., Anna, M.: Time is of the essence: a review of electroencephalography (EEG) and event-related brain potentials (ERPs) in language research. Appl. Psychophysiol. Biofeedback 42(4), 247–255 (2017)

Jatoi, M.A., Kamel, N.: Brain source localization using reduced EEG sensors. Signal Image Video Process. 12(8), 1447–1454 (2018)

Naji, M., Firoozabadi, M., Azadfallah, P.: Emotion classification during music listening from forehead biosignals. Signal Image Video Process. 9(6), 1365–1375 (2015)

Rigoulot, S., Armony, J.: Early selectivity for vocal and musical sounds: electrophysiological evidence from an adaptation paradigm. Eur. J. Neurosci. 44(10), 2786–2794 (2016)

Zioga, L., Luft, C., Bhattacharya, J.: Musical training shapes neural responses to melodic and prosodic expectation. Brain Res. 1650, 267–282 (2016)

Procházka, A., Kuchyňka, J., Vyšata, O., Cejnar, P., Vališ, M., Mařík, V.: Multi-class sleep stage analysis and adaptive pattern recognition. Appl. Sci. 8(5), 697 (2018)

Procházka, A., Charvátová, H., Vaseghi, S., Vyšata, O.: Machine learning in rehabilitation assessment for thermal and heart rate data processing. IEEE Trans. Neural Syst. Rehabilit. Eng. 26(6), 1209–1214 (2018)

Yu, M., Xu, M., Li, X., Chen, Z., Song, Y., Liu, J.: The shared neural basis of music and language. Neuroscience 357, 208–219 (2017)

Trainor, L., Shahin, A., Roberts, L.: Understanding the benefits of musical training: effects on oscillatory brain activity. Ann. N. Y. Acad. Sci. 1169, 133–142 (2009)

Wan, C., Schlaug, G.: Music making as a tool for promoting brain plasticity across the life span. Neuroscientist 16(6), 566–577 (2010)

Procházka, A., Vyšata, O., Vališ, M., Ťupa, O., Schätz, M., Mařík, V.: Bayesian classification and analysis of gait disorders using image and depth sensors of Microsoft Kinect. Digital Signal Process. 47(12), 169–177 (2015)

Procházka, A., Vyšata, O., Ťupa, O., Mareš, J., Vališ, M.: Discrimination of axonal neuropathy using sensitivity and specificity statistical measures. Neural Comput. Appl. 25(6), 1349–1358 (2014)

Procházka, A., Kuchyňka, J., Vyšata, O., Cejnar, P., Vališ, M., Mařík, V.: Multi-class sleep stage analysis and adaptive pattern recognition. MDPI: Appl. Sci. 8(5), 697 (2018)

Acknowledgements

The authors would like to express their thanks to all of the individuals who were involved in this research. The whole study was partially supported by the grant projects of the Ministry of Health of the Czech Republic (FN HK 00179906) and of the Charles University in Prague, Czech Republic (PROGRES Q40).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Besedová, P., Vyšata, O., Mazurová, R. et al. Classification of brain activities during language and music perception. SIViP 13, 1559–1567 (2019). https://doi.org/10.1007/s11760-019-01505-5

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11760-019-01505-5