Abstract

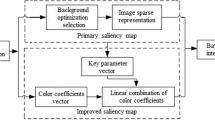

In this paper, a co-learning saliency detection method is proposed via coupled channels and low-rank factorization, by imitating the structural sparse coding and cooperative processing mechanism of two dorsal “where” and ventral “what” pathways in human vision system (HVS). First, images are partitioned into some superpixels and their structural sparsity are explored to locate pure background from image borders. Second, images are processed by two cortical pathways, to cooperatively learn a “where” feature map and a “what” feature map, by taking the background as the dictionary and using sparse coding errors as an indication of saliency. Finally, two feature maps are integrated to generate saliency map. Because the “where” and “what” feature maps are complementary to each other, our method can highlight the salient region and restrain the background. Some experiments are taken on several public benchmarks and the results show its superiority to its counterparts.

Similar content being viewed by others

References

Itti, L., Koch, C., Niebur, E.: A model of saliency-based visual attention for rapid scene analysis. IEEE Trans. Pattern Anal. Mach. Intell. 20(11), 1254–1259 (1998)

Olivier, L.M., et al.: A coherent computational approach to model bottom-up visual attention. IEEE Trans. Pattern Anal. Mach. Intell. 28(5), 802–817 (2006)

Yang, C., et al.: Graph-regularized saliency detection with convex-hull-based center prior. IEEE Signal Process. Lett. 20(7), 637–640 (2013)

Cheng, M., et al.: Global contrast based salient region detection. IEEE Trans. Pattern Anal. Mach. Intell. 37(3), 569–582 (2015)

Yan, J., Zhu, M., Liu, H., Liu, Y.: Visual saliency detection via sparsity pursuit. IEEE Signal Process. Lett. 17(8), 739–742 (2010)

Cao, X., Cheng, Y., Tao, Z., Fu, H.: Co-saliency detection via base reconstruction. In: Proceedings of the 22nd ACM International Conference on Multimedia, November 2014, pp. 997–1000 (2014)

Liu, T., Yuan, Z., Sun, J., Wang, J., Zheng, N., Tang, X., Shum, H.: Learning to detect a salient object. IEEE Trans. Pattern Anal. Mach. Intell. 33(2), 353–367 (2011)

Koch, C., Ullman, S.: Shifts in selective visual attention: towards the underlying neural circuitry. Hum Neurobiol 4(4), 219–227 (1985)

Yang, S., Gao, Q., Wang, S.: Learning a deep representative saliency map with sparse tensors. IEEE Access 7, 117861–117870 (2019)

Zhou, X., Liu, Z., Sun, G., Ye, L., Wang, X.: Improving saliency detection via multiple kernel boosting and adaptive fusion. IEEE Signal Process. Lett. 23(4), 517–521 (2016)

Zhang, L., Sun, J., Wang, T., Min, Y., Lu, H.: Visual saliency detection via kernelized subspace ranking with active learning. IEEE Trans. Image Process. 29, 2258–2270 (2010)

Zhang, D., Meng, D., Han, J.: Co-saliency detection via a self-paced multiple-instance learning framework. IEEE Trans. Pattern Anal. Mach. Intell. 39(5), 865–878 (2017)

Han, J., Cheng, G., Li, Z., Zhang, D.: A unified metric learning-based framework for co-saliency detection. IEEE Trans. Circuits Syst. Video Technol. 28(10), 2473–2483 (2018)

Liu, N., Han, J.: A deep spatial contextual long-term recurrent convolutional network for saliency detection. IEEE Trans. Image Process. 27(7), 3264–3274 (2018)

Liu, N., Han, J., Yang, M.: PiCANet: learning pixel-wise contextual attention for saliency detection. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 3089–3098, Salt Lake City, USA (2018)

Wang, W., Shen, J., Shao, L.: Video Salient object detection via fully convolutional networks. IEEE Trans. Image Process. 27(1), 38–49 (2018)

Lee, G., Tai, Y., Kim, J.: ELD-Net: an efficient deep learning architecture for accurate saliency detection. IEEE Trans. Pattern Anal. Mach. Intell. 40(7), 1599–1610 (2018)

Xia, C., Qi, F., Shi, G.: Bottom–up visual saliency estimation with deep autoencoder-based sparse reconstruction. IEEE Trans Neural Netw Learn Syst 27(6), 1227–1240 (2016)

Cherkassky, V, Mulier, F.M.: Statistical learning theory. In: Learning from data: concepts, theory, and methods. Wiley-IEEE Press (2007). ISNB: 978-0-471-68182-3

Borji, A.: Saliency prediction in the deep learning era: successes and limitations. IEEE Trans. Pattern Anal. Mach. Intell. 5, 4 (2019). https://doi.org/10.1109/tpami.2019.2935715

Zeng, Y., Zhuge, Y., Lu, H., Zhang, L., Qian, M., Yu, Y.: Multi-source weak supervision for saliency detection. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 6067–6076, Long Beach, CA (2019)

Tong, N., et al.: Saliency detection with multi-scale superpixels. IEEE Signal Process. Lett. 21(9), 1035–1039 (2014)

Carpenter, G.A., et al.: The what-and-where filter: a spatial mapping neural network for object recognition and image understanding. Comput. Vis. Image Underst. 69(69), 1–22 (1998)

Achanta, R., et al.: SLIC superpixels compared to state-of-the-art superpixel methods. IEEE Trans. Pattern Anal. Mach. Intell. 34(11), 2274–2282 (2012)

Jiang, H., et al. Automatic salient object segmentation based on context and shape prior. In: British Machine Vision Conference, vol. 110. BMVC Press, Dundee, Scotland, pp. 1–12 (2011)

Stas, G., Lihi, Z.M., Ayellet, T.: Context-aware saliency detection. IEEE Trans. Pattern Anal. Mach. Intell. 34(10), 1915–1926 (2012)

Achanta, R., et al. Frequency-tuned salient region detection. In: CVPR (2009)

Schölkopf, B., Platt, J., Hofmann, T.: Graph-based visual saliency. In: Advances in Neural Information Processing System (NIPS), pp. 545–552 (2007)

Li, J., et al.: Visual saliency based on scale-space analysis in the frequency domain. IEEE Trans. Pattern Anal. Mach. Intell. 35(4), 996–1010 (2013)

Wu, Y.: A unified approach to salient object detection via low rank matrix recovery. In: Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Providence (2012)

Achanta, R., Susstrunk, S.: Saliency detection using maximum symmetric surround. In: 17th International Conference on Image processing (ICIP) (2010)

Hae Jong, S., Peyman, M.: Static and space-time visual saliency detection by self-resemblance. J. Vis. 9(12), 74–76 (2009)

Hou, X., Zhang, L.: Saliency detection: a spectral residual approach. In: CVPR (2007)

Achanta, R., et al.: Salient region detection and segmentation. In: Lecture Notes in Computer Science (LNCS), vol. 5008, pp. 66–75 (2008)

Rahtu, E., et al.: Segmenting salient objects from images and videos. In: 11th European Conference on Computer Vision (ECCV), Greece (2010)

Lingyun, Z., et al.: SUN: a Bayesian framework for saliency using natural statistics. J. Vis. 8(7), 1–20 (2008)

Wang, J., et al.: Saliency detection via background and foreground seed selection. Neurocomputing 152, 359–368 (2015)

Xie, Y., Lu, H.: Visual saliency detection based on Bayesian model. In: IEEE International Conference on Image Processing (ICIP) (2011)

Yang, C., et al.: Saliency detection via graph-based manifold ranking. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2013)

Yan, Q., et al.: Hierarchical saliency detection. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2013)

Acknowledgements

This work was supported by the National Natural Science Foundation of China (Nos. 61771380, 61906145, U1730109, 91438103, 61771376, 61703328, 91438201, U1701267, 61703328); the Equipment pre-research project of the 13th Five-Years Plan (Nos. 6140137050206, 414120101026, 6140312010103, 6141A020223, 6141B06160301, 6141B07090102), the Major Research Plan in Shaanxi Province of China (Nos. 2017ZDXM-GY-103, 017ZDCXL-GY-03-02), the Foundation of the State Key Laboratory of CEMEE (Nos. 2017K0202B, 2018K0101B 2019K0203B, 2019Z0101B), the Science Basis Research Program in Shaanxi Province of China (Nos. 16JK1823, 2017JM6086, 2019JQ-663).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This work was supported by the National Natural Science Foundation of China (Nos. 61771380, 61906145, U1730109, 91438103, 61771376, 61703328, 91438201, U1701267, 61703328).

Rights and permissions

About this article

Cite this article

Gao, Y., Yang, S. Co-learning saliency detection with coupled channels and low-rank factorization. SIViP 14, 1479–1486 (2020). https://doi.org/10.1007/s11760-020-01683-7

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11760-020-01683-7