Abstract

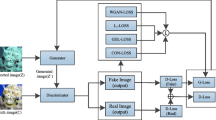

Raw underwater images usually suffer from quality degradation, and their resolutions are lower. To obtain high-resolution underwater images, some super-resolution (SR) algorithms have achieved great visual effect based on the excellent ability of deep convolution neural network. However, most previous works fail to consider the full use of inner information. Besides, simply widening and deepening the network contribute little to performance improvement. In this paper, an attention-guided multi-path cross-convolution neural network (AMPCNet) is proposed for underwater image SR. We present a multi-path cross (MPC)-module, which contains residual blocks and dilated blocks, to enhance the model’s learning capacity and increase abstract feature representation. Specifically, the design of the cross-connections realizes the mutual fusion of local features learned by residual blocks and multi-scale features acquired by dilated blocks. And the combination of dilated convolution and ordinary convolution achieves a trade-off between performance and efficiency. Furthermore, an attention block is presented to adaptively rescale the channel-wise features for more discriminative representations. Finally, the upsample block helps the reconstruction of HR images. Experimental results demonstrate the superiority of our AMPCNet network in terms of both quantitative metrics and visual quality.

Similar content being viewed by others

References

Anwar, S., Li, C.: Diving deeper into underwater image enhancement: a survey. ArXiv190707863 Cs Eess. (2019)

Li, C., Anwar, S., Porikli, F.: Underwater scene prior inspired deep underwater image and video enhancement. Pattern Recognit. 98, 107038 (2020)

Islam, J., Luo, P., Sattar, J.: Simultaneous enhancement and super-resolution of underwater imagery for improved visual perception. 14

Chen, Y., Sun, J., Jiao, W., Zhong, G.: Recovering super-resolution generative adversarial network for underwater images. In: Gedeon, T., Wong, K.W., Lee, M. (eds.) Neural Information Processing, pp. 75–83. Springer International Publishing, Cham (2019)

Islam, M.J., Enan, S.S., Luo, P., Sattar, J.: Underwater image super-resolution using deep residual multipliers. ArXiv190909437 Cs Eess (2020)

Dong, C., Loy, C.C., He, K., Tang, X.: Learning a deep convolutional network for image super-resolution. In: Fleet, D., Pajdla, T., Schiele, B., Tuytelaars, T. (eds.) Computer Vision – ECCV 2014, pp. 184–199. Springer International Publishing, Cham (2014)

Kim, J., Lee, J.K., Lee, K.M.: Accurate image super-resolution using very deep convolutional networks. ArXiv151104587 Cs. (2016)

Dai, T., Cai, J., Zhang, Y., Xia, S.-T., Zhang, L.: Second-order attention network for single image super-resolution. In: 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). pp. 11057–11066. IEEE, Long Beach, CA, USA (2019)

Jiang, Q., Zhou, W., Chai, X., Yue, G., Shao, F., Chen, Z.: A full-reference stereoscopic image quality measurement via hierarchical deep feature degradation fusion. IEEE Trans. Instrum. Meas. 69, 9784–9796 (2020). https://doi.org/10.1109/TIM.2020.3005111

Chen, Y., Li, W., Xia, M., Li, Q., Yang, K.: Super-resolution reconstruction for underwater imaging. Opt. Appl. 41, 841–853 (2011)

Lu, H., Li, Y., Nakashima, S., Kim, H., Serikawa, S.: Underwater image super-resolution by descattering and fusion. IEEE Access. 5, 670–679 (2017)

Chen, Y., Niu, K., Zeng, Z., Pan, Y.: A wavelet based deep learning method for underwater image super resolution reconstruction. IEEE Access. (2020)

Tian, C., Xu, Y., Zuo, W.: Image denoising using deep CNN with batch renormalization. Neural Netw. 121, 461–473 (2020). https://doi.org/10.1016/j.neunet.2019.08.022

Chu, J., Li, X., Zhang, J., Lu, W.: Super-resolution using multi-channel merged convolutional network. Neurocomputing (2019). https://doi.org/10.1016/j.neucom.2019.04.089

He, K., Zhang, X., Ren, S., Sun, J.: Deep residual learning for image recognition. ArXiv151203385 Cs. (2015)

Liu, J., Zhang, W., Tang, Y., Tang, J., Wu, G.: Residual feature aggregation network for image super-resolution. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. pp. 2359–2368 (2020)

Jiang, Q., Gao, W., Wang, S., Yue, G., Shao, F., Ho, Y.-S., Kwong, S.: Blind image quality measurement by exploiting high-order statistics with deep dictionary encoding network. IEEE Trans. Instrum. Meas. 69, 7398–7410 (2020). https://doi.org/10.1109/TIM.2020.2984928

Cai, J., Meng, Z., Ho, C.M.: Residual channel attention generative adversarial network for image super-resolution and noise reduction. In: 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW). pp. 1852–1861. IEEE, Seattle, WA, USA (2020)

Ledig, C., Theis, L., Huszar, F., Caballero, J., Cunningham, A., Acosta, A., Aitken, A., Tejani, A., Totz, J., Wang, Z., Shi, W.: Photo-realistic single image super-resolution using a generative adversarial network. ArXiv160904802 Cs Stat. (2017)

Lim, B., Son, S., Kim, H., Nah, S., Lee, K.M.: Enhanced deep residual networks for single image super-resolution. In: 2017 IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW). pp. 1132–1140. IEEE, Honolulu, HI, USA (2017)

Tian, C., Xu, Y., Li, Z., Zuo, W., Fei, L., Liu, H.: Attention-guided CNN for image denoising. Neural Netw. 124, 117–129 (2020). https://doi.org/10.1016/j.neunet.2019.12.024

Yu, F., Koltun, V.: Multi-scale context aggregation by dilated convolutions. ArXiv151107122 Cs. (2016)

Shi, W., Jiang, F., Zhao, D.: Single image super-resolution with dilated convolution based multi-scale information learning inception module. ArXiv170707128 Cs. (2017)

Fu, J., Liu, J., Tian, H., Li, Y., Bao, Y., Fang, Z., Lu, H.: Dual attention network for scene segmentation. Presented at the Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (2019)

Huang, Z., Wang, X., Huang, L., Huang, C., Wei, Y., Liu, W.: Ccnet: criss-cross attention for semantic segmentation. In: Proceedings of the IEEE International Conference on Computer Vision. pp. 603–612 (2019)

Chen, S., Tan, X., Wang, B., Hu, X.: Reverse attention for salient object detection. In: Proceedings of the European Conference on Computer Vision (ECCV). pp. 234–250 (2018)

Fan, D.-P., Wang, W., Cheng, M.-M., Shen, J.: Shifting more attention to video salient object detection. In: Proceedings of the IEEE conference on computer vision and pattern recognition. pp. 8554–8564 (2019)

Wang, J., Liu, W., Xing, W., Wang, L., Zhang, S.: Attention shake siamese network with auxiliary relocation branch for visual object tracking. Neurocomputing. (2020)

Shen, J., Tang, X., Dong, X., Shao, L.: Visual object tracking by hierarchical attention siamese network. IEEE Trans. Cybern. (2019)

Zhang, Y., Li, K., Li, K., Wang, L., Zhong, B., Fu, Y.: Image super-resolution using very deep residual channel attention networks. In: Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y. (eds.) Computer Vision –ECCV 2018, pp. 294–310. Springer International Publishing, Cham (2018). https://doi.org/10.1016/j.neucom.2019.08.070

Bai, F., Lu, W., Huang, Y., Zha, L., Yang, J.: Densely convolutional attention network for image super-resolution. Neurocomputing 368, 25–33 (2019). https://doi.org/10.1016/j.neucom.2019.11.044

Xue, S., Qiu, W., Liu, F., Jin, X.: Wavelet-based residual attention network for image super-resolution. Neurocomputing 382, 116–126 (2020). https://doi.org/10.1016/j.neucom.2019.06.078

Wang, X., Gu, Y., Gao, X., Hui, Z.: Dual residual attention module network for single image super resolution. Neurocomputing 364, 269–279 (2019)

Mehta, S., Rastegari, M., Caspi, A., Shapiro, L., Hajishirzi, H.: ESPNet: efficient spatial pyramid of dilated convolutions for semantic segmentation. ArXiv180306815 Cs. (2018)

Wang, Z., Ji, S.: Smoothed dilated convolutions for improved dense prediction. ArXiv180808931 Cs. (2019) https://doi.org/10.1145/3219819.3219944

Ziegler, T., Fritsche, M., Kuhn, L., Donhauser, K.: Efficient smoothing of dilated convolutions for image segmentation. ArXiv190307992 Cs. (2019)

Chen, L.-C., Papandreou, G., Schroff, F., Adam, H.: Rethinking atrous convolution for semantic image segmentation. ArXiv170605587 Cs. (2017)

Shi, W., Caballero, J., Huszar, F., Totz, J., Aitken, A.P., Bishop, R., Rueckert, D., Wang, Z.: Real-time single image and video super-resolution using an efficient sub-pixel convolutional neural network. In: 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR). pp. 1874–1883. IEEE, Las Vegas, NV, USA (2016)

He, K., Zhang, X., Ren, S., Sun, J.: Delving deep into rectifiers: surpassing human-level performance on ImageNet classification. In: 2015 IEEE International Conference on Computer Vision (ICCV). pp. 1026–1034. IEEE, Santiago, Chile (2015)

Zhang, K., Zuo, W., Zhang, L.: Deep plug-and-play super-resolution for arbitrary Blur Kernels. In: 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). pp. 1671–1681. IEEE, Long Beach, CA, USA (2019)

Panetta, K., Gao, C., Agaian, S.: Human-visual-system-inspired underwater image quality measures. IEEE J. Ocean. Eng. 41, 541–551 (2016). https://doi.org/10.1109/JOE.2015.2469915

Islam, M.J., Xia, Y., Sattar, J.: Fast underwater image enhancement for improved visual perception. arXiv:1903.09766 [cs]. (2020)

Acknowledgements

This work was supported by the Scientific Research Project of Tianjin Municipal Education Commission [grant number 2019KJ105]. The authors also acknowledge the anonymous reviewers for their helpful comments on the manuscript.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Zhang, Y., Yang, S., Sun, Y. et al. Attention-guided multi-path cross-CNN for underwater image super-resolution. SIViP 16, 155–163 (2022). https://doi.org/10.1007/s11760-021-01969-4

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11760-021-01969-4