Abstract

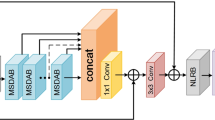

Single image super-resolution (SISR) has important applications in many fields. With the help of this technology, the broadband requirement of image transmission can be reduced, the effect of remote sensing observation can be improved, and the location of lesion cells can be accurately located. Convolutional neural networks (CNNs) using multi-scale feature extraction structure can gain a large amount of information from a low-resolution input, which is helpful to improve the performance of SISR. However, these CNNs usually treat different types of information equally. There is a lot of redundancy in the information obtained, which limits the representation ability of the networks. We proposed an attention-enhanced multi-scale residual block (AMRB), which increases the proportion of useful information by embedding convolutional block attention module. Furthermore, we construct an attention-enhanced multi-scale residual network based on one time feature fusion (OAMRN). Extensive experiments illustrate the necessity of the AMRB and the superiority of proposed OAMRN over the state-of-the-art methods in terms of both quantitative metrics and visual quality.

Similar content being viewed by others

References

Kasiri, S., Ezoji, M.: Single MR-image super-resolution based on convolutional sparse representation. SIViP 14, 1525–1533 (2020). https://doi.org/10.1007/s11760-020-01698-0

Zhang, X., Feng, C., Wang, A., et al.: CT super-resolution using multiple dense residual block based GAN. SIViP 15, 725–733 (2021). https://doi.org/10.1007/s11760-020-01790-5

Xia, H., Cai, N., Wang, H., et al.: Brain MR image super-resolution via a deep convolutional neural network with multi-unit upsampling learning. SIViP 15, 931–939 (2021). https://doi.org/10.1007/s11760-020-01817-x

Farnaz, F., Mehran, Y., Mohammad, F., et al.: Face image super-resolution via sparse representation and wavelet transform. SIViP. 13, 79–86 (2019). https://doi.org/10.1007/s11760-018-1330-9

Elsayed, A., Kongar, E., Mahmood, A., et al.: Unsupervised face recognition in the wild using high-dimensional features under super-resolution and 3D alignment effect. SIViP 12, 1353–1360 (2018). https://doi.org/10.1007/s11760-018-1289-6

Jing, T., Ma, K.K.: A state-space super-resolution approach for video reconstruction. SIViP 3, 217–240 (2009). https://doi.org/10.1007/s11760-008-0078-z

Dong, C., Loy, C., He, K., Tang, X.: Learning a deep convolutional network for image super-resolution. In: ECCV (2014)

Kim, J., Lee, J.K., Lee, K.M.: Accurate image super-resolution using very deep convolutional networks. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (2016)

He, K., Zhang, X., Ren, S., Sun, J.: Deep residual learning for image recognition. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 770–778 (2016)

Ledig, C., et al.: Photo-realistic single image super-resolution using a generative adversarial network. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (2017)

Lim, B., Son, S., Kim, H., et al.: Enhanced deep residual networks for single image super-resolution. In: 2017 IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW). IEEE Computer Society (2017)

Huang, G., Liu, Z., Weinberger, K.Q., van der Maaten, L.: Densely connected convolutional networks. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, vol. 1 (2017)

Tong, T., Li, G., Liu, X., et al.: Image super-resolution using dense skip connections. In: Proceedings of Proceedings of the IEEE International Conference on Computer Vision (2017)

Ren, H., El-Khamy, M., Lee, J.: Image super resolution based on fusing multiple convolution neural networks. In: Proceedings of Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops (2017)

Li, J., Fang, F., Mei, K., et al.: Multi-scale residual network for image super-resolution. In: Proceedings of Proceedings of the European Conference on Computer Vision (ECCV) (2018)

Woo, S., Park, J., Lee, J.Y., et al.: CBAM: Convolutional Block Attention Module. In: Proceedings of the Euro-pean Conference on Computer Vision (ECCV)(2018)

Szegedy, C., Liu, W., Jia, Y., Sermanet, P., Reed, S., Anguelov, D., Erhan, D., Vanhoucke, V., Rabinovich, A., et al.: Going deeper with convolutions. In: CVPR (2015)

Timofte, R., Agustsson, E., Van Gool, L., et al.: Ntire 2017 challenge on single image super-resolution: methods and results. In: Proceedings of Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops(2017)

Bevilacqua, M., Roumy, A., Guillemot, C., Alberi-Morel, M.L.: Low-complexity single-image super-resolution based on nonnegative neighbor embedding. In: Proceedings of the 23rd British Machine Vision Conference (BMVC). BMVA Press, pp. 135.1–135.10 (2012)

Zeyde, R., Elad, M., Protter, M.: On single image scale-up using sparse-representations. In: International Conference on Curves and Surfaces (2010)

Martin, D., Fowlkes, C., Tal, D., Malik, J.: A database of human segmented natural images and its application to evaluating segmentation algorithms and measuring ecological statistics. In: ICCV (2001)

Kingma, D.P., Ba, J.J.a.p.a.: Adam: a method for stochastic optimization. In: ICLR(2015)

Zhang, R., et al.: The unreasonable effectiveness of deep features as a perceptual metric. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (2018)

Acknowledgements

This study was supported by the National Nature Science Foundation of China [No. 61801400]; JSPS KAKENHI [No. JP18F18392].

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Sun, Y., Qin, J., Gao, X. et al. Attention-enhanced multi-scale residual network for single image super-resolution. SIViP 16, 1417–1424 (2022). https://doi.org/10.1007/s11760-021-02095-x

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11760-021-02095-x