Abstract

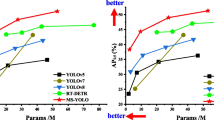

Wildlife protection is crucial to the earth's ecological civilization construction. With the use of computer vision technology, we can efficiently and accurately monitor wildlife. In this paper, a wildlife database is built and a YOLO for wildlife detection (WD-YOLO) is proposed. Firstly, we design a Weighted Path Aggregation Network for the detection of multi-scale wild animals, which fuses the hierarchical feature maps extracted by the backbone network. Secondly, a Neighborhood Analysis Non-Maximum Suppression is proposed to solve the problem of multi-targets overlap in wildlife detection. The prediction results of adjacent animals are retained and the redundant detection boxes are eliminated effectively when performing suppression operations. Finally, in order to improve the generalization performance of the network, CutOut and MixUp are used for image augmentation, so that the model can adapt to different scenarios. Experimental results show that the precision of wildlife detection has improved by 5.543% and the recall has improved by 1.651% compared with the pre-optimized model. The proposed WD-YOLO significantly outperforms the other state-of-the-art one-stage object detection networks in our self-built wildlife dataset.

Similar content being viewed by others

References

Handcock, R.N., Swain, D.L., Patison, K.P., et al.: Monitoring animal behaviour and environmental interactions using wireless sensor networks, GPS collars and satellite remote sensing. Sensors 9(5), 3586–3603 (2009)

Kays, R., Tilak, S., Kranstauber, B, et al.: Monitoring wild animal communities with arrays of motion sensitive camera traps. https://arxiv.org/abs/1009.5718. Accessed 28 September 2010 (2010)

Fernández-Caballero, A., López, M.T., Serrano-Cuerda, J.: Thermal-infrared pedestrian ROI extraction through thermal and motion information fusion. Sensors 14, 6666–6676 (2014)

Hulbert, I.A.R., French, J.: The accuracy of GPS for wildlife telemetry and habitat mapping. J. Appl. Ecol. 38(4), 869–878 (2001)

Liu, X., Yang, T., Yan, B.: Internet of Things for wildlife monitoring. In: 2015 IEEE/CIC International Conference on Communications in China-Workshops (CIC/ICCC). IEEE (2015)

Nguyen, H., Maclagan, S.J., Nguyen, T.D., et al.: Animal recognition and identification with deep convolutional neural networks for automated wildlife monitoring. In: International Conference on Data Science & Advanced Analytics. IEEE (2017)

Feng, W., Ju, W., Li, A., et al.: High-efficiency progressive transmission and automatic recognition of wildlife monitoring images with WISNs. IEEE Access 7, 161412–161423 (2019)

Redmon, J., Divvala, S., Girshick, R., et al.: You only look once: Unified, real-time object detection. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 779–788. IEEE (2016)

Redmon, J., Farhadi, A.: YOLO9000: better, faster, stronger. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR). IEEE, pp. 7263–7271 (2017)

Redmon, J., FarhadI, A.: YOLOv3: An incremental improvement (2018). https://arxiv.org/abs/1804.02767. Accessed 08 April 2018

Bochkovskiy, A., Wang, C.Y., Liao, H.Y.M.: YOLOv4: Optimal Speed and Accuracy of Object Detection (2020). https://arxiv.org/abs/2004.10934. Accessed 23 April 2020

Liu, W., Anguelov, D., Erhan, D., et al.: SSD: single shot multibox detector (2016). In: European Conference on Computer Vision. IEEE, pp. 21–37

Lin, T., Goyal, P., Girshick, R., et al.: Focal loss for dense object detection (2017). In: Proceedings of the IEEE International Conference on Computer Vision. IEEE, pp. 2980–2988

Liu, S., Qi, L., Qin, H., et al.: Path aggregation network for instance segmentation. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR). IEEE, pp. 8759–8768 (2018)

He, K., Zhang, X., Ren, S., et al.: Spatial pyramid pooling in deep convolutional networks for visual recognition. IEEE Trans. Pattern Anal. Mach. Intell. (TPAMI) 37(9), 1904–1916 (2015)

Zheng, Z., Wang, P., Liu, W., et al.: Distance-IoU Loss: Faster and better learning for bounding box regression. In: Proceedings of the AAAI Conference on Artificial Intelligence (AAAI) (2020)

Misra, D.: Mish: A self regularized non-monotonic neural activation function. https://arxiv.org/abs/1908.08681. Accessed 13 August 2020 (2020)

Lin, T., Dollar, P., Girshick, R., et al.: Feature Pyramid Networks for Object Detection (2016). https://arxiv.org/abs/1612.03144. Accessed 09 December 2016

Liu, S., Qi, L., Qin, H., et al.: Path Aggregation Network for Instance Segmentation. In: 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition. IEEE, pp. 8759–8768 (2018)

Bodla, N., Singh, B., Chellappa, R., et al.: Soft-NMS--improving object detection with one line of code. In: Proceedings ofthe IEEE International Conference on Computer Vision (ICCV). IEEE, pp. 5561–5569 (2017)

RGirshick, R., Donahue, J., Darrell, T., et al.: Rich feature hierarchies for accurate object detection and semantic segmentation. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2014). IEEE, pp. 580–587

Zhang, Z., He, T., Zhang, H., et al.: Bag of Freebies for Training Object Detection Neural Networks. https://arxiv.org/abs/1902.04103. Accessed 12 April 2019 (2019)

DeVries, T., Taylor, G.W.: Improved regularization of convolutional neural networks with CutOut. https://arxiv.org/abs/1708.04552. Accessed 29 November 2017 (2017)

Zhang, H., Cisse, M., Dauphin, Y.N. et al. MixUp: Beyond empirical risk minimization. https://arxiv.org/abs/1710.09412. Accessed 27 April 2018 (2017)

Zhou, X., Wang, D., et al.: Objects as Points. https://arxiv.org/abs/1904.07850v2. Accessed 25 April 2019 (2019)

Law, H., Deng, J.: CornerNet: Detecting objects as paired keypoints. In: Proceedings of the European Conference on Computer Vision (ECCV). IEEE, pp. 734–750 (2018)

Acknowledgements

This work was supported by the Key Research and Development Program in Jiangsu Province (No. BE2016739).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Lu, X., Lu, X. An efficient network for multi-scale and overlapped wildlife detection. SIViP 17, 343–351 (2023). https://doi.org/10.1007/s11760-022-02237-9

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11760-022-02237-9