Abstract

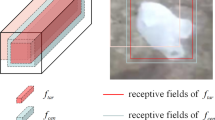

Visual object tracking is often used to track obstacles in autonomous driving tasks. It requires real-time performance while dealing with target deformation and illumination changes. To solve the above problems, this paper proposes a high-precision and real-time visual tracking algorithm for autonomous driving based on the Siamese network. First, our tracker utilizes ensemble learning to fuse two feature extraction branches that are derived from the convolutional neural network. Then, the channel attention mechanism is added before concatenation to redistribute feature weights. Finally, a region proposal network is adopted to generate tracking bounding boxes. Extensive experiments demonstrate that compared with the state-of-the-art algorithms, the proposed method achieves satisfactory results on four benchmark datasets while maintaining a higher frame rate. Also, the qualitative analysis results on the KITTI dataset indicate that our method can meet the challenges in autonomous driving.

Similar content being viewed by others

References

Bertinetto, L., Valmadre, J., Henriques, J.F., Vedaldi, A., Torr, P.H.: Fully-convolutional siamese networks for object tracking. In: European Conference on Computer Vision, pp. 850–865 (2016)

Bhat, G., Johnander, J., Danelljan, M., Khan, F.S., Felsberg, M.: Unveiling the power of deep tracking. In: European Conference on Computer Vision, pp. 483–498 (2018)

Danelljan, M., Bhat, G., Shahbaz Khan, F., Felsberg, M.: Eco: efficient convolution operators for tracking. In: IEEE Conference on Computer Vision and Pattern Recognition, pp. 6638–6646 (2017)

Danelljan, M., Häger, G., Khan, F.S., Felsberg, M.: Discriminative scale space tracking. IEEE Trans. Pattern Anal. Mach. Intell. 39(8), 1561–1575 (2016)

Danelljan, M., Robinson, A., Shahbaz Khan, F., Felsberg, M.: Beyond correlation filters: Learning continuous convolution operators for visual tracking. In: European Conference on Computer Vision, pp. 472–488 (2016)

Dong, X., Shen, J., Dajiang, Yu., Wang, W., Liu, J., Huang, H.: Occlusion-aware real-time object tracking. IEEE Trans. Multimedia 19(4), 763–771 (2016)

Elayaperumal, D., Joo, Y.H.: Aberrance suppressed spatio-temporal correlation filters for visual object tracking. Pattern Recogn. 115, 107922 (2021)

Geiger, A., Lenz, P., Urtasun, R.: Are we ready for autonomous driving? the kitti vision benchmark suite. In: IEEE Conference on Computer Vision and Pattern Recognition, pp. 3354–3361 (2012)

Hu, J., Shen, L., Sun, G.: Squeeze-and-excitation networks. In: IEEE Conference on Computer Vision and Pattern Recognition, pp. 7132–7141 (2018)

Kristan, M., Matas, J., Leonardis, A., Felsberg, M., Cehovin, L., Fernandez, G., Pflugfelder, Rl.: The visual object tracking vot2016 challenge results. In: European Conference on Computer Vision, pp. 1834–1848 (2016)

Kristan, M., et al.: The sixth visual object tracking vot2018 challenge results. In: European Conference on Computer Vision (2018)

Karimi, H.R., Lu, Y.: Guidance and control methodologies for marine vehicles: A survey. Control. Eng. Pract. 111, 104785 (2021)

Li, B., Yan, J., Wu, W., Zhu, Z., Hu, X.: High performance visual tracking with siamese region proposal network. In: IEEE Conference on Computer Vision and Pattern Recognition, pp. 8971–8980 (2018)

Liang, Z., Shen, J.: Local semantic siamese networks for fast tracking. IEEE Trans. Image Process. 29, 3351–3364 (2019)

Lu, Y., Karimi, H.R.: Recursive fusion estimation for mobile robot localization under multiple energy harvesting sensors. IET Control Theory Appl. 16(1), 20–30 (2022)

Moorthy, S., Choi, J.Y., Joo, Y.H.: Gaussian-response correlation filter for robust visual object tracking. Neurocomputing 411, 78–90 (2020)

Real, E., Shlens, J., Mazzocchi, S., Pan, X., Vanhoucke, V.: A large high-precision human-annotated data set for object detection in video. In: IEEE Conference on Computer Vision and Pattern Recognition, pp. 5296–5305 (2017)

Ren, S., He, K., Girshick, R., Sun, J.: Faster r-cnn: towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 39(6), 1137–1149 (2016)

Russakovsky, O., Deng, J., Su, H., Krause, J., Satheesh, S., et al.: Imagenet large scale visual recognition challenge. Int. J. Comput. Vis. 115(3), 211–252 (2015)

Simonyan, K., Zisserman, A.: Very deep convolutional networks for large-scale image recognition. arXiv preprint arXiv:1409.1556, 2014

Sosnovik, I., Moskalev, A., Smeulders, A. W.: Scale equivariance improves siamese tracking. In: IEEE Winter Conference on Applications of Computer Vision, pp. 2765–2774 (2021)

Valmadre, J., Bertinetto, L., Henriques, J., Vedaldi, A.,Torr, P.H.: End-to-end representation learning for correlation filter based tracking. In: IEEE Conference on Computer Vision and Pattern Recognition, pp. 2805–2813 (2017)

Voigtlaender, P., Luiten, J., Torr, P.H., Leibe, B.: Siam r-cnn: Visual tracking by re-detection. In: IEEE Conference on Computer Vision and Pattern Recognition, pages 6578–6588 (2020)

Wang, G., Luo, C., Sun, X., Xiong, Z., Zeng, W.: Tracking by instance detection: A meta-learning approach. In: IEEE Conference on Computer Vision and Pattern Recognition, pp. 6288–6297 (2020)

Wang, Q., Zhang, L., Bertinetto, L., Hu, W., Torr, P. H.: Fast online object tracking and segmentation: A unifying approach. In: IEEE Conference on Computer Vision and Pattern Recognition, pp. 1328–1338 (2019)

Wu, Y., Lim, J., Yang, M. H.: Online object tracking: a benchmark. In: IEEE Conference on Computer Vision and Pattern Recognition, pp. 2411–2418 (2013)

Yi, W., Lim, J., Yang, M.-H.: Object tracking benchmark. IEEE Trans. Pattern Anal. Mach. Intell. 37(9), 1834–1848 (2015)

Zhang, Z., Peng, H.: Deeper and wider siamese networks for real-time visual tracking. In: IEEE Conference on Computer Vision and Pattern Recognition, pp. 4591–4600 (2019)

Zheng, J., Ma, C., Peng, H., Yang, X.: Learning to track objects from unlabeled videos. In: IEEE International Conference on Computer Vision, pp. 13546–13555 (2021)

Zhu, Z., Wang, Q., Li, B., Wu, W., Yan, J., Hu, W.: Distractor-aware siamese networks for visual object tracking. In: European Conference on Computer Vision, pp. 101–117 (2018)

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

Springer Nature or its licensor holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Lyu, P., Wei, M. & Wu, Y. High-precision and real-time visual tracking algorithm based on the Siamese network for autonomous driving. SIViP 17, 1235–1243 (2023). https://doi.org/10.1007/s11760-022-02331-y

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11760-022-02331-y