Abstract

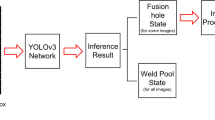

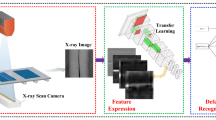

The real-time recognition of weld feature locations in compounded noises is very critical when it comes to robotic weld tracking and intelligent welding. Due to strong noise interference, it is arduous for traditional machine vision to obtain satisfactory detection results. Traditional deep convolution networks carry a huge number of parameters including slow detection speed, and low detection accuracy, which cannot be reached the actual welding requirements. Therefore, this paper proposed a new lightweight detector called Light-YOLO-Welding based on an improved YOLOv4 for detecting weld feature points. Firstly, modification of the backbone network is done to be MobileNetv3, which decreases the network parameters and increases the detection speed. Secondly, the replacement of the path aggregation network is done with Bidirectional Feature Pyramid Network (BiFPN) based on the idea of bidirectional cross-scale connection that integrates richer semantic features and maintains spatial information, applying depthwise separable convolutions in SPP and BiFPN layers. Lastly, to solve the problem of imbalanced positive and negative samples in the assembled data, a class loss function based on AP-Loss is proposed to improve weld feature recognition accuracy. The results show that the mAP of this method is 98.84%, the accuracy is 98.08%, the recall is 97.87%, and the speed is 62.22f/s; the results further confirmed that the method has higher accuracy and speed. The average error of the camera coordinate system key weld points extraction is 0.102 mm. Compared with other methods, the proposed method is more reliable and efficient for detecting weld feature points.

Similar content being viewed by others

Data availability

Our homemade dataset contains 4769 weld images with varying levels of noise. The datasets used and during the current study are available from the corresponding author on reasonable request.

References

John, O., Mpofu, K.: Towards achieving a fully intelligent robotic arc welding: a review. Ind. Robot. 42(5), 475–484 (2015). https://doi.org/10.1108/IR-03-2015-00531

Zou, Y., Chen, X., Gong, G.: A seam tracking system based on a laser vision sensor. Measurement 127, 489–500 (2018). https://doi.org/10.1016/j.measurement.2018.06.020

Jia, Z., Wang, T., He, J., Li, L.: Real-time spatial intersecting seam tracking based on laser vision stereo sensor. Measurement. (2020). https://doi.org/10.1016/j.measurement.2019.106987

Zou, Y., Zhou, W.: Automatic seam detection and tracking system for robots based on laser vision. Mechatronics 63, 102261 (2019). https://doi.org/10.1016/j.mechatronics.2019.102261

Zhou, B., Liu, Y., Xiao, Y., Zhou, R.: Intelligent guidance programming of welding robot for 3D curved welding seam. IEEE Access. 9, 42345–42357 (2021)

Jie, X. et al.: Active and passive vision sensing based weld seam tracking for robotic welding processes. SJT, Univ. (2020). https://d.wanfangdata.com.cn/thesis/D02080208

Du, R., Xu, Y., Hou, Z., Shu, J.: Strong noise image processing for vision-based seam tracking in robotic arc welding. Int. J. Adv. Manuf. Technol. 101(5), 2135–2149 (2019). https://doi.org/10.1007/s00170-018-3115-2

Zou, Y., Chen, T., Chen, X., Li, J.: Robotic seam tracking system combining convolution filter and deep reinforcement learning. Mech. Syst. Signal Process. 165, 108372 (2022). https://doi.org/10.1016/j.ymssp.2021.108372

Yang, L., Fan, J., Huo, B., Li, E., Liu, Y.: Image denoising of sea m images with deep learning for laser vision seam tracking. In IEEE Sens. J. 22(6), 6098–6107 (2022)

Dong, Z., Mai, Z., Yin, S., Wang, J.: A weld line detection robot based on structure light for automatic NDT. Int. J. Adv. Manuf. Technol. 111(7), 1831–1845 (2020). https://doi.org/10.1007/s00170-020-05964-w

Zhao, Z., Luo, J., Wang, Y., Bai, L.: Additive seam tracking technology based on laser vision. Int. J. Adv. Manuf. Technol. 116(1), 197–211 (2021). https://doi.org/10.1007/s00170-021-07380-0

Tian, Y., Liu, H., Li, L., Yuan, G., Feng, J.: Automatic identification of multi-weld seam based on vision sensor with silhouette-mapping. IEEE Sens. J. 21(4), 5402–5412 (2021)

Redmon, J., Divvala, S., Girshick, R.: You only look once: unified, object detection. Comput. Vision Pattern Recognit. (CVPR) (2016). https://doi.org/10.1109/CVPR.2016.91

Redmon, J., Farhadi, A.: Yolov3: An incremental improvement. arXiv preprint https://arxiv.org/abs/1804.02767 (2018)

Bochkovskiy, A., Wang, C. Y.: Yolov4: Optimal speed and accuracy of object detection. arXiv preprint https://arxiv.org/abs/2004.10934 (2020)

Chen, K., Lin, W., Li, J., See, J., Wang, J.: AP-loss for accurate one-stage object detection. IEEE Trans. Pattern Anal. Mach. Intell. 43(11), 3782–3798 (2021)

Zou, Y., Chen, X., Gong, G., Li, J.: A seam tracking system based on a laser vision sensor. Measurement 127, 489–500 (2018). https://doi.org/10.1016/j.measurement.2018.06.020

Wang, W., Liang, Y.: Rock fracture centerline extraction based on Hessian matrix and Steger algorithm. KSII Trans. Internet Inf. Syst. (TIIS) 9(12), 5073–5086 (2015)

Wang, Y., Jing, Z., Ji, Z., Wang, L., Zhou, G., Gao, Q.: Lane detection based on two-stage noise features filtering and clustering. IEEE Sens. J. 22(15), 15526–15536 (2022)

Howard, A., Sandler, M., Chen, B.: in Searching for MobileNetV3. IEEE/CVF International Conference on Computer Vision, 1314–1324 (2019).

Jie, H., Shen, L., Albanie, S., Sun, G., Enhua, W.: Squeeze-and-excitation networks. IEEE Trans. Pattern Anal. Mach. Intell. 42(8), 2011–2023 (2020). https://doi.org/10.1109/TPAMI.2019.2913372

Howard, A., Sandler, M., Chen, B.: Mobilenets: Efficient convolutional neural networks for mobile vision applications. Computer Vision and Pattern Recognition. arXiv preprint https://arxiv.org/abs/1704.04861 (2017)

Sandler, M., Howard, A., Zhu, M.: MobileNetV2: inverted residuals and linear bottlenecks. Comput. Vision Pattern Recognit. (2018). https://doi.org/10.1109/CVPR.2018.00l

Liu, S. Qi, L. Qin, H. Shi J. and Jia J..: Path Aggregation Network for Instance Segmentation. Computer Vision and Pattern Recognition. 8759–8768, arXiv preprint https://arxiv.org/abs/1803.01534v4 (2018)

Tan, M., Pang, R., Le, Q.: EfficientDet: scalable and efficient object detection. Computer Vision and Pattern Recognition (CVPR).10778–10787, arXiv preprint https://arxiv.org/abs/1911.09070 (2020)

Chollet, F.: Xception: Deep learning with depthwise separable convolutions. Computer Vision and Pattern Recognition. 1251–1258, arXiv preprint https://arxiv.org/abs/1610.02357v3 (2017)

Ren, S., He, K., Girshick, R., Sun, J.: Faster R-CNN: towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 39(6), 1137–1149 (2017)

He, K. Gkioxari, G. Dollár, P., Girshick, R.: Mask R-CNN. Int. Conf. Comput. Vision (ICCV). (2017). https://doi.org/10.48550/arXiv.1703.06870

Yan, B., Fan, P., Lei, X., Liu, Z., Yang, F.: A real-time apple targets detection method for picking robot based on improved YOLOv5. Remote Sens. 13(9), 1619 (2021)

Funding

This work was supported by the National Natural Science Foundation of China (NO. 61905178), the Program for Innovative Research Team in University of Tianjin (No. TD13-5036), and the Tianjin Science and Technology Popularization Project (No. 22KPXMRC00090).

Ethics declarations

Conflict of interest

Not applicable.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Song, L., Kang, J., Zhang, Q. et al. A weld feature points detection method based on improved YOLO for welding robots in strong noise environment . SIViP 17, 1801–1809 (2023). https://doi.org/10.1007/s11760-022-02391-0

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11760-022-02391-0