Abstract

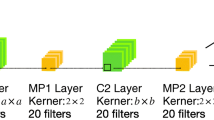

Focus on the machine terminals with the only monocular camera and low computational power without GPU on the Mobile Terminal, it is difficult to realize the real-time dynamic hand gesture when multiple fingertips move in a small range because of the spatiotemporal noise. In this paper, the KOA-CLSTM-based real-time dynamic hand gesture recognition method is proposed for Mobile Terminals. The method consists of three parts: Kernel Optimize Accumulation (KOA), Union Frame Difference (UDF), and CLSTM. First, KOA is put forward to realize gesture extraction under special situations like parallel fingers, juxtaposed fingertips, natural syndactyly, and curved fingertips. Second, UDF facilitates frame feature fusion in both temporal and spatial dimensions, effectively resolving spatiotemporal noise such as inter-frame noise, camera dithering, and background interference on the Mobile Terminal. For the lightweight network CLSTM, which has a 5-block 3D convolutional neural network, it is better than C3D in a similar situation with a classification accuracy of 0.9689 in 0.0267 and an identification accuracy of 0.9682 in less than 0.13 s. It means that our proposed architecture on the Mobile Terminal performs well on fingertip classification and hand gestural recognition.

Similar content being viewed by others

Data availability

The experiment datasets in this essay generated, used or analyzed during the current study are available from the corresponding author on reasonable request.

References

Pal, S.K., et al.: Deep learning in multi-object detection and tracking: state of the art. Appl. Intell. (Dordrecht, Netherlands) 51(9), 6400–6429 (2021)

Jia, L., Zhou, X., Xue, C.: Non-trajectory-based gesture recognition in human-computer interaction based on hand skeleton data. Multimed. Tools Appl. 81(15), 20509–20539 (2022)

Sun, C., et al.: Mask-guided SSD for small-object detection. Appl. Intell. (Dordrecht, Netherlands) 51(6), 3311–3322 (2021)

Xu, M., et al.: A novel dynamic graph evolution network for salient object detection. Appl. Intell. (Dordrecht, Netherlands) 52(3), 2854–2871 (2022)

Li, Y., Zhang, P.: Static hand gesture recognition based on hierarchical decision and classification of finger features. Sci. Prog. (1916) (2022). https://doi.org/10.1177/00368504221086362

Sadeddine, K., et al.: Recognition of user-dependent and independent static hand gestures: application to sign language. J. Vis. Commun. Image Represent. 79, 103193 (2021)

Lazarou, M., Li, B., Stathaki, T.: A novel shape matching descriptor for real-time static hand gesture recognition. Comput. Vis. Image Underst. 210, 103241 (2021)

Dong, Y., Liu, J., Yan, W.: Dynamic hand gesture recognition based on signals from specialized data glove and deep learning algorithms. IEEE Trans. Instrum. Meas. 70, 1–14 (2021)

Tang, H., et al.: Fast and robust dynamic hand gesture recognition via key frames extraction and feature fusion. Neurocomputing (Amsterdam) 331, 424–433 (2019)

Luo, Y., Cui, G., Li, D.: An improved gesture segmentation method for gesture recognition based on CNN and YCbCr. J. Electr. Comput. Eng. (2021). https://doi.org/10.1155/2021/1783246

Wu, X.Y.: A hand gesture recognition algorithm based on DC-CNN. Multimed. Tools Appl. 79(13–14), 9193–9205 (2020)

Zhu, G., et al.: Continuous gesture segmentation and recognition using 3DCNN and convolutional LSTM. IEEE Trans. Multimed. 21(4), 1011–1021 (2019)

Ouyang, X., et al.: A 3D-CNN and LSTM based multi-task learning architecture for action recognition. IEEE Access 7, 40757–40770 (2019)

Luo, J., Zhang, X.: Convolutional neural network based on attention mechanism and Bi-LSTM for bearing remaining life prediction. Appl. Intell. (Dordrecht, Netherlands) 52(1), 1076–1091 (2022)

Zhu, G., et al.: Redundancy and attention in convolutional LSTM for gesture recognition. IEEE Trans. Neural Netw. Learn. Syst. 31(4), 1323–1335 (2020)

De Smedt, Q., Wannous, H., Vandeborre, J.: Heterogeneous hand gesture recognition using 3D dynamic skeletal data. Comput. Vis. Image Underst. 181, 60–72 (2019)

Jin, B., et al.: Robust dynamic hand gesture recognition based on millimeter wave Rader using Atten-TsNN. IEEE Sens. J. 22(11), 10861–10869 (2022)

Jian, C., Liu, X., Zhang, M.: RD-hand: a real-time regression-based detector for dynamic hand gesture. Appl. Intell. (Dordrecht, Netherlands) 52(1), 417–428 (2022)

Jia, L., et al.: MobileNetV3 with CBAM for bamboo stick counting. IEEE Access 10, 53963–53971 (2022)

Amrutha, E., Arivazhagan, S., Sylvia, W.: MixNet: a robust mixture of convolutional neural networks as feature extractors to detect stego images created by content-adaptive steganography. Neural Process. Lett. 54(2), 853–870 (2022)

Han, K., et al.: GhostNets on heterogeneous devices via cheap operations. Int. J. Comput. Vis. 130(4), 1050–1069 (2022)

Han, Q., Liu, J., Jung, C.: Lightweight generative network for image inpainting using feature contrast enhancement. IEEE Access 10, 86458–86469 (2022)

Shin, Y., et al.: PEPSI++: fast and lightweight network for image inpainting. IEEE Trans. Neural Netw. Learn. Syst. 32(1), 252–265 (2021)

Zhang, P., et al.: Efficient lightweight attention network for face recognition. IEEE Access 10, 31740–31750 (2022)

Kong, L., Wang, J., Zhao, P.: YOLO-G: a lightweight network model for improving the performance of military targets detection. IEEE Access 10, 55546–55564 (2022)

Feng, Z., Lee, F., Chen, Q.: SRUNet: stacked reversed U-shape network for lightweight single image super-resolution. IEEE Access 10, 60151–60162 (2022)

Renjun, X., et al.: Fault detection method based on improved faster R-CNN: take ResNet-50 as an example. Geofluids (2022). https://doi.org/10.1155/2022/7812410

Ding, I., Zheng, N.: CNN deep learning with wavelet image fusion of CCD RGB-IR and depth-grayscale sensor data for hand gesture intention recognition. Sensors 22(3), 803 (2022)

Khan, M.S., et al.: Deep learning for ocular disease recognition: an inner-class balance. Comput. Intell. Neurosci. (2022). https://doi.org/10.1155/2022/5007111

Acknowledgements

This work was supported in part by the National Natural Science Foundation of China under Grant No. 61672461 and No. 62073293.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Ethical approval

It is an original paper. The submission is approved by all the authors. If accepted, the work described in this paper will not be published elsewhere. And the study is not split up into several parts to increase the quantity of submissions and submitted to various journals or to one journal over time. No data have been fabricated or manipulated (including images) to support our conclusions. No data, text, or theories by others are presented as if they were our own.

Human or animal participants

This article does not contain any studies with human participants or animals performed by any of the authors.

Informed consent

Informed consent was obtained from all individual participants included in the study.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Hou, X., Cen, S., Zhang, M. et al. KOA-CLSTM-based real-time dynamic hand gesture recognition on mobile terminal. SIViP 17, 1841–1854 (2023). https://doi.org/10.1007/s11760-022-02395-w

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11760-022-02395-w