Abstract

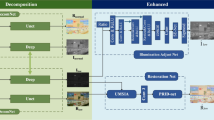

Low-light image enhancement technology is widely used extensively in industry, but it is also a challenging aspect of computer vision tasks. Although existing methods are committed to adjusting the overall brightness of the image, they ignore the problems of region excessive exposure and uneven illumination enhancement. They also introduce new problems, such as noise generation and tone distortion, during the process. To solve these problems, we propose a two-step deep learning-based method that combines a proposed attention-guided network with hierarchical global priors (GPANet) with a Mapping Curve function. In the proposed method, GPANet is first used as a deep curve estimation network to generate a self-adaptive enhanced mapping feature map. Subsequently, a pixel-wise curve is utilized to aid in the generation of natural tone-enhanced images. To evaluate the performance of the proposed method, we conducted comparative experiments with different methods on commonly used public datasets, and the proposed method achieved superior results in terms of naturalness image quality evaluator and lightness order error.

Similar content being viewed by others

References

Li, C., Guo, C., Han, L.H., Jiang, J., Cheng, M.M., Gu, J., Loy, C.C.: Low-light image and video enhancement using deep learning: a survey. IEEE Trans. Pattern Anal. Mach. Intell. 01, 1–1 (2021)

Abdullah-Al-Wadud, M., Kabir, M.H., Dewan, M.A.A., Chae, O.: A dynamic histogram equalization for image contrast enhancement. IEEE Trans. Consum. Electron. 53(2), 593–600 (2007)

Wang, S., Zheng, J., Hu, H.M., Li, B.: Naturalness preserved enhancement algorithm for non-uniform illumination images. IEEE Trans. Image Process. 22(9), 3538–3548 (2013)

Fu, X., Zeng, D., Huang, Y., Zhang, X.P., Ding, X.: A weighted variational model for simultaneous reflectance and illumination estimation. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 2782–2790 (2016)

Guo, X., Li, Y., Ling, H.: Lime: low-light image enhancement via illumination map estimation. IEEE Trans. Image Process. 26(2), 982–993 (2016)

Wei, C., Wang, W., Yang, W., Liu, J.: Deep retinex decomposition for low-light enhancement. In: British Machine Vision Conference 2018, BMVC 2018, Newcastle, UK, September 3–6, 2018, p 155 (2018)

Lv, F., Li, Y., Lu, F.: Attention guided low-light image enhancement with a large scale low-light simulation dataset. Int. J. Comput. Vis. 129(7), 2175–2193 (2021)

Kwon, D., Kim, G., Kwon, J.: Dale: Dark region-aware low-light image enhancement. arXiv preprint arXiv:2008.12493 (2020)

Guo, C., Li, C., Guo, J., Loy, C.C., Hou, J., Kwong, S., Cong, R.: Zero-reference deep curve estimation for low-light image enhancement. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 1780–1789 (2020)

Tao, L., Zhu, C., Xiang, G., Li, Y., Jia, H., Xie, X.: Llcnn: A convolutional neural network for low-light image enhancement. In: 2017 IEEE Visual Communications and Image Processing (VCIP), IEEE, pp. 1–4 (2017)

Jiang, Y., Gong, X., Liu, D., Cheng, Y., Fang, C., Shen, X., Yang, J., Zhou, P., Wang, Z.: Enlightengan: deep light enhancement without paired supervision. IEEE Trans. Image Process. 30, 2340–2349 (2021)

Zhao, H., Shi, J., Qi, X., Wang, X., Jia, J.: Pyramid scene parsing network. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 2881–2890 (2017)

Zhou, S., Li, C., Loy, C.C.: Lednet: Joint low-light enhancement and deblurring in the dark. arXiv preprint arXiv:2202.03373 (2022)

Zhao, Y., Jiang, Z., Men, A., Ju, G.: Pyramid real image denoising network. In: 2019 IEEE Visual Communications and Image Processing, VCIP 2019, Sydney, Australia, December 1–4, 2019, IEEE, pp. 1–4, (2019) https://doi.org/10.1109/VCIP47243.2019.8965754

Hou, Q., Zhou, D., Feng, J.: Coordinate attention for efficient mobile network design. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp 13713–13722 (2021)

Chen, C., Chen, Q., Xu, J., Koltun, V.: Learning to see in the dark. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 3291–3300 (2018)

Cai, J., Gu, S., Zhang, L.: Learning a deep single image contrast enhancer from multi-exposure images. IEEE Trans. Image Process. 27(4), 2049–2062 (2018)

Abdelhamed, A., Lin, S., Brown, M.S.: A high-quality denoising dataset for smartphone cameras. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 1692–1700 (2018)

Wang, X., Xie, L., Dong, C., Shan, Y.: Real-esrgan: Training real-world blind super-resolution with pure synthetic data. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 1905–1914 (2021)

Zamir, S.W., Arora, A., Khan, S., Hayat, M., Khan, F.S., Yang, M.H., Shao, L.: Cycleisp: Real image restoration via improved data synthesis. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 2696–2705 (2020)

Lee, C., Lee, C., Kim, C.S.: Contrast enhancement based on layered difference representation. In: 2012 19th IEEE International Conference on Image Processing, IEEE, pp. 965–968 (2012)

Ma, K., Zeng, K., Wang, Z.: Perceptual quality assessment for multi-exposure image fusion. IEEE Trans. Image Process. 24(11), 3345–3356 (2015)

Mittal, A., Soundararajan, R., Bovik, A.C.: Making a “completely blind’’ image quality analyzer. IEEE Signal Process. Lett. 20(3), 209–212 (2012)

Wang, X., Chen, J., Wang, Z., Liu, W., Satoh, S., Liang, C., Lin, C.: When pedestrian detection meets nighttime surveillance: a new benchmark. In: Bessiere C (ed) Proceedings of the Twenty-Ninth International Joint Conference on Artificial Intelligence, IJCAI 2020, ijcai.org, pp. 509–515 (2020). https://doi.org/10.24963/ijcai.2020/71

Redmon, J., Farhadi, A.: Yolov3: An incremental improvement. CoRR abs/1804.02767 (2018). arXiv:1804.02767

Zhang, S., Benenson, R., Schiele, B.: Citypersons: A diverse dataset for pedestrian detection. In: 2017 IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2017, Honolulu, HI, USA, July 21–26, 2017, IEEE Computer Society, pp. 4457–4465. https://doi.org/10.1109/CVPR.2017.474 (2017)

Acknowledgements

This work was supported in part by the Major Scientific and Technological Projects of CNPC under Grant ZD2019-183-004, and in part by the Fundamental Research Funds for the Central Universities under Grant 20CX05019A.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Gong, A., Li, Z., Wang, H. et al. Attention-guided network with hierarchical global priors for low-light image enhancement. SIViP 17, 2083–2091 (2023). https://doi.org/10.1007/s11760-022-02422-w

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11760-022-02422-w