Abstract

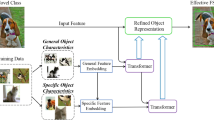

In the problem of few-shot object detection, class prototype knowledge in previous works is not be fully refined and utilized due to lack of instances. We noticed that the application of the output features of the RoI pooling layer has a great influence on the grasp of the prototype features, which motivates us to focus on how to reuse them. Therefore, we propose a multiple knowledge embedding network, which gets improvement in three places in the fine-tuning stage. Introducing attention mechanism to strengthen feature extraction, Up-CoTNet is used to replace the 3\(\times \)3 convolution in Resnet101. Feature enhancement module is added to enforce the common object reasoning for the output of RoI pooling layer. Then, we propose a contrastive learning branch to grasp the information encoded between different feature regions. Experiments on PASCAL VOC and MS COCO datasets show that our model significantly raises the performance by 1.3\(\%\) (+0.5AP) in average compared with previous methods.

Similar content being viewed by others

Materials Availability

The PASCAL VOC dataset can be downloaded in “https://pjreddie.com/projects/pascal-voc-dataset-mirror/”. And the MS COCO dataset can be downloaded in “https://cocodataset.org/”.

References

Chen, P.-Y., Chang, M.-C., Hsieh, J.-W., Chen, Y.-S.: Parallel residual bi-fusion feature pyramid network for accurate single-shot object detection. IEEE Trans. Image Process. 30, 9099–9111 (2021)

Tang, J., Shu, X., Li, Z., Qi, G.-J., Wang, J.: Generalized deep transfer networks for knowledge propagation in heterogeneous domains. ACM Trans. Multimed. Comput. Commun. Appl. 12(4s) (2016)

Shu, X., Qi, G.-J., Tang, J., Wang, J.: Weakly-shared deep transfer networks for heterogeneous-domain knowledge propagation. In Proceedings of the 23rd ACM International Conference on Multimedia, MM’15, pp. 35–44 (2015)

Xiong, C., Li, W., Liu, Y., Wang, M.: Multi-dimensional edge features graph neural network on few-shot image classification. IEEE Signal Process. Lett. 28, 573–577 (2021)

Ren, S., He, K., Girshick, R., Sun, J.: Faster R-CNN: towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 39(6), 1137–1149 (2016)

Redmon, J., Divvala, S., Girshick, R., Farhadi, A.: You only look once: unified, real-time object detection. In 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 779–788 (2016)

Kang, B., Liu, Z., Wang, X., Yu, F., Feng, J., Darrell, T.: Few-shot object detection via feature reweighting. In 2019 IEEE/CVF International Conference on Computer Vision (ICCV), pp. 8419–8428 (2019)

Chen, H., Wang, Y., Wang, G., Qiao, Y.: LSTD: a low-shot transfer detector for object detection. In Proceedings of the AAAI Conference on Artificial Intelligence, vol. 32 (2018)

Wang, Y.-X., Ramanan, D., Hebert, M.: Meta-learning to detect rare objects. In 2019 IEEE/CVF International Conference on Computer Vision (ICCV), pp. 9924–9933 (2019)

Karlinsky, L., Shtok, J., Harary, S., Schwartz, E., Aides, A., Feris, R., Giryes, R., Bronstein, A.M.: Repmet: Representative-based metric learning for classification and few-shot object detection. In 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 5192–5201, (2019)

Li, B., Yang, B., Liu, C., Liu, F., Ji, R., Ye, Q.: Beyond max-margin: Class margin equilibrium for few-shot object detection. In 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 7359–7368 (2021)

Wang, X., Huang, T., Gonzalez, J., Darrell, T., Yu, F.: Frustratingly simple few-shot object detection. In International Conference on Machine Learning, pp. 9919–9928. PMLR (2020)

Sun, B., Li, B., Cai, S., Yuan, Y., Zhang, C.: FSCE: Few-shot object detection via contrastive proposal encoding. In 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 7348–7358 (2021)

Zhang, W., Wang, Y.-X.: Hallucination improves few-shot object detection. In 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 13003–13012 (2021)

Li, Y., Yao, T., Pan, Y., Mei, T.: Contextual transformer networks for visual recognition. IEEE Trans. Pattern Anal. Mach. Intell. p. 1 (2022)

Cheng, G., Wang, J., Li, K., Xie, X., Lang, C., Yao, Y., Han, J.: Anchor-free oriented proposal generator for object detection. IEEE Trans. Geosci. Remote Sens. 60, 1–11 (2022)

Mukilan, P., Semunigus, W.: Human and object detection using hybrid deep convolutional neural network. Signal Image Video Process. pp. 1–11 (2022)

Fei-Fei, L., Fergus, R., Perona, P.: One-shot learning of object categories. IEEE Trans. Pattern Anal. Mach. Intell. 28(4), 594–611 (2006)

Jiang, W., Huang, K., Geng, J., Deng, X.: Multi-scale metric learning for few-shot learning. IEEE Trans. Circuits Syst. Video Technol. 31(3), 1091–1102 (2020)

Feng, R., Zheng, X., Gao, T., Chen, J., Wang, W., Chen, D.Z., Jian, W.: Interactive few-shot learning: limited supervision, better medical image segmentation. IEEE Trans. Med. Imag. 40(10), 2575–2588 (2021)

Khosla, P., Teterwak, P., Wang, C., Sarna, A., Tian, Y., Isola, P., Maschinot, A., Liu, C., Krishnan, D.: Supervised contrastive learning. Adv. Neural. Inf. Process. Syst. 33, 18661–18673 (2020)

Chen, T., Kornblith, S., Norouzi, M., Hinton, G.: A simple framework for contrastive learning of visual representations. In International conference on machine learning, pp. 1597–1607. PMLR (2020)

Wang, Y., Wei, Y., Ma, R., Wang, L., Wang, C.: Unsupervised vehicle re-identification based on mixed sample contrastive learning. Signal Image Video Process. 1–9 (2022)

Parmar, N., Vaswani, A., Uszkoreit, J., Kaiser, L., Shazeer, N., Ku, A., Tran, D.: Image transformer. In International Conference on Machine Learning, pp. 4055–4064. PMLR (2018)

Zhou, M., Xueyang, F., Huang, J., Zhao, F., Liu, A., Wang, R.: Effective pan-sharpening with transformer and invertible neural network. IEEE Trans. Geosci. Remote Sens. 60, 1–15 (2022)

Viola, I., Kanitsar, A., Groller, M.E.: Importance-driven feature enhancement in volume visualization. IEEE Trans. Vis. Comput. Graphics 11(4), 408–418 (2005)

Han, G., Huang, S., Ma, J., He, Y., Chang, S.-F.: Meta Faster R-CNN: towards accurate few-shot object detection with attentive feature alignment. arXiv preprint arXiv:2104.07719 (2021)

Ravichandran, A., Bhotika, R., Soatto, S.: Few-shot learning with embedded class models and shot-free meta training. In 2019 IEEE/CVF International Conference on Computer Vision (ICCV), pp. 331–339 (2019)

Lin, J., Cai, Q., Lin, M.: Multi-label classification of fundus images with graph convolutional network and self-supervised learning. IEEE Signal Process. Lett. 28, 454–458 (2021)

Balduzzi, D., Frean, M., Leary, L., Lewis, J.P., Ma, K.W.-D., McWilliams, B.: The shattered gradients problem: If resnets are the answer, then what is the question? In International Conference on Machine Learning, pp. 342–350. PMLR (2017)

Funding

This work is supported by National Natural Science Foundation of China, 61771334. This work is also supported by National Key Research and Development Program of China (Titled “Brain-inspired General Vision Models and Applications”).

Author information

Authors and Affiliations

Contributions

XG contributed to the conception of the study; YC performed the experiments; JW contributed significantly to analysis and manuscript preparation; All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Ethical approval

This paper is not applicable for both human or animal studies.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Gong, X., Cai, Y. & Wang, J. Multiple knowledge embedding for few-shot object detection. SIViP 17, 2231–2240 (2023). https://doi.org/10.1007/s11760-022-02438-2

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11760-022-02438-2