Abstract

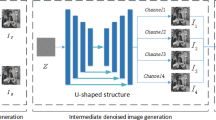

In this work, by analyzing the modeling capability of the unsupervised deep image prior (DIP) network and its uncertainty in recovering the lost details, we aim to significantly boost its denoising effect by jointly exploiting generative and fusion strategies, resulting into a highly effective unsupervised three-stage recovery process. More specifically, for a given noisy image, we first apply two representative image denoisers that, respectively, belong to the internal and external prior-based denoising methods to produce corresponding two initial denoised images. Based on the two initial denoised images, we can randomly generate enough target images with a novel spatially random mixer. Then, we follow the standard DIP denoising routine but with different random inputs and target images to generate multiple complementary samples at separate runs. For more randomness and stability, some of generated samples are dropped out. Finally, the remaining samples are fused with weight maps generated by an unsupervised generative network in a pixel-wise manner, obtaining a final denoised image whose image quality is significantly improved. Extensive experiments demonstrate that, with our boosting strategy, the proposed method remarkably outperforms the original DIP and previous leading unsupervised networks with comparable peak signal-to-noise ratio and structural similarity, and is competitive with state-of-the-art supervised ones, on synthetic and real-world noisy image denoising.

Similar content being viewed by others

Availability of data and materials

The data and materials will publicly available online.

References

Wang, Q., Gao, Q., Wu, L., Sun, G., Jiao, L.: Adversarial multi-path residual network for image super-resolution. IEEE Trans. Image Process. 30, 6648–6658 (2021)

Dong, W., Zhang, L., Shi, G., Li, X.: Nonlocally centralized sparse representation for image restoration. IEEE Trans. Image Process. 22(4), 1620–1630 (2013)

Wu, D., Kim, K., Li, Q.: Low-dose CT reconstruction with Noise2Noise network and testing-time fine-tuning. Med. Phys. 48(12), 7657–7672 (2021)

Song, T.-A., Yang, F., Dutta, J.: Noise2Void: unsupervised denoising of PET images. Phys. Med. Biol. 66(21), 214002 (2021)

Fumio, H., Hiroyuki, O., Kibo, O., Atsushi, T., Hideo, T.: Dynamic PET image denoising using deep convolutional neural networks without prior training datasets. IEEE Access 7, 96594–96603 (2019)

Dong, W., Zhang, L., Shi, G., Li, X.: Nonlocally centralized sparse representation for image restoration. IEEE Trans. Image Process. 22(4), 1620–1630 (2013)

Dabov, K., Foi, A., Katkovnik, V., Egiazarian, K.: Image denoising by sparse 3-d transform-domain collaborative filtering. IEEE Trans. Image Process. 16(8), 2080–2095 (2007)

Buades, A., Coll, B., Morel, J.-M.: Nonlocal image and movie denoising. Int. J. Comput. Vis. 76(2), 123–139 (2008)

Gu, S., Zhang, L., Zuo, W., Feng, X.: Weighted nuclear norm minimization with application to image denoising. In: Conference on Computer Vision and Pattern Recognition (CVPR 2014), Columbus, OH, USA, pp. 2862–2869 (2014)

Zhang, K., Zuo, W., Chen, Y., Meng, D., Zhang, L.: Beyond a Gaussian denoiser: residual learning of deep CNN for image denoising. IEEE Trans. Image Process. 26(7), 3142–3155 (2017)

Dabov, K., Foi, A., Katkovnik, V., Egiazarian, K.: Image denoising by sparse 3-d transform-domain collaborative filtering. IEEE Trans. Image Process. 16(8), 2080–2095 (2007)

Buades, A., Coll, B., Morel, J.-M.: Nonlocal image and movie denoising. Int. J. Comput. Vis. 76(2), 123–139 (2008)

Wu, D., Kim, K., Li, Q.: Low-dose CT reconstruction with Noise2Noise network and testing-time fine-tuning. Med. Phys. 48(12), 7657–7672 (2021)

Ma, K., Li, H., Yong, H., Wang, Z., Meng, D., Zhang, L.: Robust multi-exposure image fusion: a structural patch decomposition approach. IEEE Trans. Image Process. 26(5), 2519–2532 (2017)

Chen, C., Wang, G., Peng, C., Fang, Y., Zhang, D., Qin, H.: Exploring rich and efficient spatial temporal interactions for real-time video salient object detection. IEEE Trans. Image Process. 30, 3995–4007 (2021)

Zhang, K., Zuo, W., Chen, Y., Meng, D., Zhang, L.: Beyond a Gaussian denoiser: residual learning of deep CNN for image denoising. IEEE Trans. Image Process. 26(7), 3142–3155 (2017)

Zhang, K., Zuo, W., Zhang, L.: FFDNet: toward a fast and flexible solution for CNN-based image denoising. IEEE Trans. Image Process. 27(9), 4608–4622 (2018)

Krull, A., Buchholz, T.-O., Jug, F.: Noise2Void—learning denoising from single noisy images. In: Conference on Computer Vision and Pattern Recognition (CVPR 2019), Long Beach, CA, USA, pp. 2129–2137 (2019)

Quan, Y., Chen, M., Pang, T., Ji, H.: Self2Self with dropout: learning self-supervised denoising from single image. In: Conference on Computer Vision and Pattern Recognition (CVPR 2020), Seattle, WA, USA, pp. 1712–1722 (2020)

Niresi, K.F., Chi, C.-Y.: Unsupervised hyperspectral denoising based on deep image prior and least favorable distribution. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 15, 5967–5983 (2022)

Arbeláez, P., Maire, M., Fowlkes, C., Malik, J.: Contour detection and hierarchical image segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 33(5), 898–916 (2011)

Mataev, G., Milanfar, P., Elad, M.: DeepRED: deep image prior powered by RED. In: Conference on Computer Vision and Pattern Recognition (CVPR 2019), Long Beach, CA, USA (2019)

Ulyanov, D., Vedaldi, A., Lempitsky, V.: Deep image prior. Int. J. Comput. Vis. 128(8), 1867–1888 (2020)

Shi, Z., Mettes, P., Maji, S., Snoek, C.G.M.: On measuring and controlling the spectral bias of the deep image prior. Int. J. Comput. Vis. 130(4), 885–908 (2022)

Luo, J., Xu, S., Li, C.: A fast denoising fusion network using internal and external priors. SIViP 15(6), 1275–1283 (2021)

Ma, K., Li, H., Yong, H., Wang, Z., Meng, D., Zhang, L.: Robust multi-exposure image fusion: a structural patch decomposition approach. IEEE Trans. Image Process. 26(5), 2519–2532 (2017)

Xu, J., Huang, Y., Cheng, M.-M., Liu, L., Zhu, F., Xu, Z., Shao, L.: Noisy-as-clean: learning self-supervised denoising from corrupted image. IEEE Trans. Image Process. 29, 9316–9329 (2020)

Timofte, R., Agustsson, E., L.V.G. et al.: Ntire 2017 challenge on single image super-resolution: methods and results. In: Conference on Computer Vision and Pattern Recognition Workshops (CVPRW 2017), Honolulu, HI, USA, pp. 1110–1121 (2017)

Arbeláez, P., Maire, M., Fowlkes, C., Malik, J.: Contour detection and hierarchical image segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 33(5), 898–916 (2011)

Zhang, W., Dong, L., Zhang, T., Xu, W.: Enhancing underwater image via color correction and bi-interval contrast enhancement. Signal Process. Image Commun. 90, 116030 (2021)

Soh, J.W., Cho, S., Cho, N.I.: Meta-transfer learning for zero-shot super-resolution. In: Conference on Computer Vision and Pattern Recognition (CVPR 2020), Seattle, WA, USA, pp. 3513–3522 (2020)

Author information

Authors and Affiliations

Contributions

SX contributed to the conception of the study, and XC wrote the main manuscript text, and JL and XC contributed significantly to analysis and manuscript preparation, and NX conducted experiments. All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Ethics approval

Not applicable.

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This article was supported by National Natural Science Foundation of China under Grants 62162043, 61662044 and 61902168.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Xu, S., Chen, X., Luo, J. et al. A deep image prior-based three-stage denoising method using generative and fusion strategies. SIViP 17, 2385–2393 (2023). https://doi.org/10.1007/s11760-022-02455-1

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11760-022-02455-1