Abstract

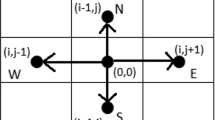

The goal of multi-focus image fusion (MFIF) is to create a single, unified image from multiple images of the same scene with varying depths of focus of foreground and background patterns. To enhance the quality of the fused image, MFIF relies heavily on the precision of the detected focus area. In this paper, we propose a MFIF algorithm using weighted anisotropic diffusion filter (WADF) and a structural gradient. An edge-preserving filter can take the form of either a diffusion of the intensities at the borders or the detection of significantly meaningful edges in an image. WADF is a smart strategy for satisfying such requirements in multi-dimensional space. Image smoothing with an edge-preserving approach is used to create weight map pattern first. After that, the structural gradient-based focus area detection approach is applied to generate the fusion decision map pattern. Finally, a fused image is created by combining the weight map pattern with the fusion decision map pattern via the fusion rule. The performance of the suggested algorithm has been examined using both qualitative and quantitative methods, and it has been demonstrated to be more effective than a select number of other current methods in a number of different tests.

Similar content being viewed by others

Data availability

Enquiries about data availability should be directed to the authors.

References

Liu, Y., Wang, L., Li, H., Chen, X.: Multi-focus image fusion with deep residual learning and focus property detection. Inf. Fusion 86–87, 1–16 (2022)

Piao, Y., Zhang, M., Wang, X., Li, P.: Extended depth of field integral imaging using multi-focus fusion. Opt. Commun. 411, 8–14 (2018)

Yan, X., Qin, H., Li, J.: Multi-focus image fusion based on dictionary learning with rolling guidance filter. J. Opt. Soc. Am. A Opt. Image Sci. 3(34), 432–440 (2017)

Yang, B., Li, S.: Multi-focus image fusion based on spatial frequency and morpho- logical operators. Chin. Opt. Lett. 5(8), 452–453 (2007)

Rahman, M., Liu, S., Wong, C., Lin, S., Liu, S., Kwok, N.: Multi-focal image fusion using degree of focus and fuzzy logic. Digit Signal Process 60, 1–9 (2017)

Li, S., Kang, X., Hu, J., B. Y,: Image matting for fusion of multi-focus images in dynamic scenes. Inf. Fusion 14(2), 147–162 (2013)

Yang, Y., Yang, M., Huang, S., Que, Y., Ding, M., Sun, J.: Multifocus image fusion based on extreme learning machine and human visual system. IEEE Access 5, 6989–7000 (2017)

Gao, C., Song, C., Zhang, Y., Qi, D., Yu, Y.: Improving the performance of infrared and visible image fusion based on latent low-rank representation nested with rolling guided image filtering. IEEE Access 9, 91462–91475 (2021)

Li, W., Jia, L., Du, J.: Multi-modal sensor medical image fusion based on multiple salient features with guided image filter. IEEE Access 7, 173019–173033 (2019)

Zhou, F., Li, X., Li, J., Wang, R., Tan, H.: Multifocus image fusion based on fast guided filter and focus pixels detection. IEEE Access 7, 50780–50796 (2019)

Li, H., Qiu, H., Zhengtao, Yu., Li, Bo.: Multifocus image fusion via fixed window technique of multiscale images and non-local means filtering. Signal Process. 138, 71–85 (2017)

Jianwen, H., Li, S.: The multiscale directional bilateral filter and its application to multisensor image fusion. Inf. Fusion 13(3), 196–206 (2012)

Zhan, K., Xie, Y., Wang, H., Min, Y.: Fast filtering image fusion. J. Electron. Imag. 26(6), 63004 (2017)

Li, H., Qiu, H., Yu, Z., Li, B.: Multifocus image fusion via fixed window technique of multiscale images and non-local means filtering. Signal Process. 138, 71–85 (2017)

Xia, X., Yao, Y., Yin, L., Wu, S., Li, H., Yang, Z.: Multi-focus image fusion based on probability filtering and region correction. Signal Process. 153, 71–82 (2018)

Li, W., Xie, Y., Zhou, H., Han, Y., Zhan, K.: Structure-aware image fusion. Optik (Stuttg) 172, 1–11 (2018)

Zhan, K., Kong, L., Liu, B., He, Y.: Multimodal image seamless fusion. J. Electron. Imag. 28(2), 23027 (2019)

Li, S., Kwok, J.T., Wang, Y.: Combination of images with diverse focuses using the spatial frequency. Inf. Fusion 2(3), 169–176 (2001)

Li, M., Cai, W., Tan, Z.: A region-based multi-sensor image fusion scheme using pulse-coupled neural network. Pattern Recogn. Lett. 27(16), 1948–1956 (2006)

Li, S., Kang, X., Fang, L., Hu, J., Yin, H.: Pixel-level image fusion: a survey of the state of the art. Inf. Fusion 33, 100–112 (2017)

Du, J., Li, W., Tan, H.: Intrinsic image decomposition-based grey and pseudo-color medical image fusion. IEEE Access 7, 56443–56456 (2019)

Xia, Y., Zhang, B., Pei, W., Mandic, D.P.: Bidimensional multivariate empirical mode decomposition with applications in multi-scale image fusion. IEEE Access 7, 114261–114270 (2019)

Ma, L., Hu, Y., Zhang, B., Li, J., Chen, Z., Sun, W.: A new multi-focus image fusion method based on multi-classification focus learning and multi-scale decomposition. Appl. Intell. 53, 1452–1468 (2023). https://doi.org/10.1007/s10489-022-03658-2

Bavirisetti, D.P., Xiao, G., Zhao, J., Dhuli, R., Liu, G.: Multi-scale guided image and video fusion: a fast and efficient approach. Circuits Syst. Signal Process. 38(12), 5576–5605 (2019)

Ke, Y., Ping, R.: A multi-source image fusion algorithm based on gradient regularized convolution sparse representation. J. Syst. Eng. Electron. 31(3), 447–459 (2020)

Xing, C., Cong, Y., Wang, Z., Wang, M.: Fusion of hyperspectral and multispectral images by convolutional sparse representation. IEEE Geosci. Remote Sens. Lett. 19, 1–5 (2022)

Chen, G., Li, L., Jin, W., Qiu, S., Guo, H.: Weighted sparse representation and gradient domain guided filter pyramid image fusion based on low-light-level dual-channel camera. IEEE Photon. J. 11(5), 1–15 (2019)

Sun, J., Zhu, H., Xu, Z., Han, C.: Poisson image fusion based on markov random field fusion model. Inf. Fusion 14(3), 241–254 (2013)

Paul, S., Sevcenco, I., Agathoklis, P.: Multi-exposure and multi-focus image fusion in gradient domain. J. Circuits Syst. Comput. 25(10), 1650123 (2016)

Mitianoudis, N., Stathaki, T.: Pixel-based and region-based image fusion schemes using ica bases. Inf. Fusion 8(2), 131–142 (2007)

Liang, J., He, Y., Liu, D., Zeng, X.: Image fusion using higher order singular value decomposition. IEEE Trans. Image Process. 21(5), 2898–2909 (2012)

Luo, X., Zhang, Z., Zhang, C., Wu, X.: Multi-focus image fusion using hosvd and edge intensity. J. Vis. Commun. Image Represent. 45, 46–61 (2017)

Liu, Y., Jin, J., Wang, Q., Shen, Yi., Dong, X.: Novel focus region detection method for multifocus image. J. Electron. Imag. 22(2), 023017 (2013)

Yang, Y., Tong, S., Huang, S., Lin, P.: Dual-tree complex wavelet transform and image block residual-based multi-focus image fusion in visual sensor networks. Sensors 14(22), 22408–22430 (2014)

Li, H., Liu, X., Zhengtao, Yu., Zhang, Y.: Performance improvement scheme of multifocus image fusion derived by difference images. Signal Process. 128, 474–493 (2016)

Liu, Y., Chen, X., Peng, H., Wang, Z.: Multi-focus image fusion with a deep convolutional neural network. Inf. Fusion 36, 191–207 (2017)

Zhao, W., Wang, D., Lu, H.: Multi-focus image fusion with a natural enhancement via a joint multi-level deeply supervised convolutional neural network. IEEE Trans. Circuits Syst. Video Technol. 29(4), 1102–1115 (2019)

Li, H., Nie, R., Cao, J., Guo, X., Zhou, D., He, K.: Multi-focus image fusion using U-shaped networks with a hybrid objective. IEEE Sens. J. 19(21), 9755–9765 (2019)

He, K., Gong, J., Xie, L., Zhang, X., Xu, D.: Regions preserving edge enhancement for multisensor-based medical image fusion. IEEE Trans. Instrum. Meas. 70, 1–13 (2021)

Yadav, S.P., Yadav, S.: Image fusion using hybrid methods in multimodality medical images. Med. Biol. Eng. Comput. 58(4), 668–687 (2020)

Singh, S., Singh, H., Gehlot, A., et al.: IR and visible image fusion using DWT and bilateral filter. Microsyst. Technol. (2022). https://doi.org/10.1007/s00542-022-05315-7

Junwu, L., Li, B., Jiang, Y.: An infrared and visible image fusion algorithm based on LSWT-NSST. IEEE Access 8, 179857–179880 (2020)

Zhang, K., Wang, M., Yang, S., Jiao, L.: Convolution structure sparse coding for fusion of panchromatic and multispectral images. IEEE Trans. Geosci. Remote Sens. 57(2), 1117–1130 (2019)

Zhang, K., Zhang, F., Feng, Z., Sun, J., Wu, Q.: Fusion of panchromatic and multispectral images using multiscale convolution sparse decomposition. IEEE J. Sel. Topics Appl. Earth Obs. Remote Sens. 14, 426–439 (2021)

Perona, P., Malik, J.: Scale-space and edge detection using anisotropic diffusion. IEEE Trans. Pattern Anal. Mach. Intell. 12(7), 629–639 (1990)

Nejati, M., Samavi, S., Shirani, S.: Multi-focus image fusion using dictionary-based sparse representation. Information Fusion 25, 72–84 (2015)

Yang, Y., Tong, S., Huang, S., Lin, P.: Multifocus image fusion based on NSCT and focused area detection. IEEE Sens. J. 15(5), 2824–2838 (2015)

Tabarsaii, S., Aghagolzade, A., Ezoji, M.: Sparse representation-based multi-focus image fusion in a hybrid of DWT and NSCT. In: 2019 4th International Conference on Pattern Recognition and Image Analysis (IPRIA) (2019)

Hill, P., Achim, A., Al-Mualla, M.E., Bull, D.: Contrast sensitivity of the wavelet, dual tree complex wavelet, curvelet, and steerable pyramid transforms. IEEE Trans. Image Process. 25(6), 2739–2751 (2016)

Li, S., Kang, X., Jianwen, Hu.: Image fusion with guided filtering. IEEE Trans. Image Process. 22(7), 2864–2875 (2013)

Li, S., Kang, X.: Fast multi-exposure image fusion with median filter and recursive filter. IEEE Trans. Consum. Electron. 58(2), 626–632 (2012)

Han, Y., Cai, Y., Cao, Y., Xu, X.: A new image fusion performance metric based on visual information fidelity. Inf. Fusion 14, 127–135 (2013)

Xydeas, C.S., Petrović, V.: Objective image fusion performance measure. Electron. Lett. 36(4), 308–309 (2000)

Wang, Z., Bovik, A.C., Sheikh, H.R., Simoncelli, E.P.: Image quality assessment: from error measurement to structural similarity. IEEE Trans. Image Process. 13(1), 1–44 (2004)

Wang, P., Liu, B.: A novel image fusion metric based on multi-scale analysis. In: IEEE International Conference. Signal Processing (2008)

Chen, Y., Blum, R.S.: A new automated quality assessment algorithm for image fusion. Image Vis. Comput. 27, 1421–1432 (2009)

Tirumala Vasu, G., Palanisamy, P.: Multi-focus image fusion using anisotropic diffusion filter. Soft. Comput. 26(24), 14029–14040 (2022)

Funding

This research received no specific grant from any funding agency.

Author information

Authors and Affiliations

Contributions

T.V.G—Algorithm implementation and simulation. P.P.—Problem statement and Quality metrics.

Corresponding author

Ethics declarations

Conflict of interest

Authors have no conflicts of interest to disclose.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Vasu, G.T., Palanisamy, P. Gradient-based multi-focus image fusion using foreground and background pattern recognition with weighted anisotropic diffusion filter. SIViP 17, 2531–2543 (2023). https://doi.org/10.1007/s11760-022-02470-2

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11760-022-02470-2