Abstract

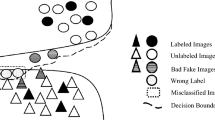

Data augmentation effectively alleviates the over-fitting problem in convolutional neural network-based (CNN-based) models, especially in the limited dataset. However, the inconsistency problem between the augmented sample and its original label is still a critical challenge during the augmentation operation. In this paper, we propose a novel data augmentation scheme named Interpretability-Mask (IM), which exploits the interpretability of the classifier to obtain the most discriminative regions and preserve label invariance. Concretely, we first construct a set-based representation for a sample and its label by superpixel segmentation and the local interpretable model-agnostic explanations (LIME) operator. Secondly, the sample represented by the superpixel set is utilized to synthesize the region-level disturbance augmentation sample with a random removal strategy. Then, the label constructed by the most interpretive superpixel set is applied to maintain the consistency between the augmented sample and its original label. Lastly, the augmentation scheme will be randomly used to the training stage. Extensive experiments are conducted on challenging datasets. A significant improvement in classification performance has achieved with the IM scheme. On the CIFAR-10 dataset, the Top-1 error rate drops by 2.15% at most. On the CIFAR-100 dataset, the Top-1 error rate decreases by up to 3.69%. And the maximum decline of the Top-1 error rate is 3.35% on the Mini-ImageNet. Experimental results manifest the effectiveness and generality of the proposed method.

Similar content being viewed by others

Explore related subjects

Discover the latest articles and news from researchers in related subjects, suggested using machine learning.Data Availability

All datasets involved in this paper are public.

References

Wang, M., Deng, W.: Deep face recognition: a survey. Neurocomputing 429, 215–244 (2021)

Zoph, B., Cubuk, E.D., Ghiasi, G., et al.: Learning data augmentation strategies for object detection. In: European Conference on Computer Vision. Springer, Cham, pp. 566–583 (2020)

Dong, S., Wang, P., Abbas, K.: A survey on deep learning and its applications. Comput. Sci. Rev. 40, 100379 (2021)

Thurnhofer-Hemsi, K., Domínguez, E.: A convolutional neural network framework for accurate skin cancer detection. Neural Process. Lett. 53(5), 3073–3093 (2021)

Lu, H., Du, M., Qian, K., et al.: GAN-based data augmentation strategy for sensor anomaly detection in industrial robots. IEEE Sens. J. 22(18), 17464–17474 (2021)

Ioffe, S., Szegedy, C.: Batch normalization: accelerating deep network training by reducing internal covariate shift. In: International Conference on Machine Learning. PMLR, pp. 448–456 (2015)

Srivastava, N., Hinton, G., Krizhevsky, A., et al.: Dropout: a simple way to prevent neural networks from overfitting. J. Mach. Learn. Res. 15(1), 1929–1958 (2014)

Naveed, H.: Survey: image mixing and deleting for data augmentation. arXiv:2106.07085 (2021)

LingChen, T.C., Khonsari, A., Lashkari, A., et al.: UniformAugment: a search-free probabilistic data augmentation approach. arXiv:2003.14348 (2020)

Zhang, H., Cisse, M., Dauphin, Y.N., et al.: mixup: beyond empirical risk minimization. In: International Conference on Learning Representations (2018)

Shorten, C., Khoshgoftaar, T.M.: A survey on image data augmentation for deep learning. J. Big Data 6(1), 1–48 (2019)

Gong, C., Wang, D., Li, M., et al.: KeepAugment: a simple information-preserving data augmentation approach. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 1055–1064 (2021)

Chen, P., Liu, S., Zhao, H., et al.: Gridmask data augmentation. arXiv:2001.04086 (2020)

Zhao, H., Wang, J., Chen, Z., et al.: SRK-augment: a self-replacement and discriminative region keeping augmentation scheme for better classification. Neural Process. Lett. (2022). https://doi.org/10.1007/s11063-022-11022-1

Achanta, R., Shaji, A., Smith, K., et al.: SLIC superpixels compared to state-of-the-art superpixel methods. IEEE Trans. Pattern Anal. Mach. Intell. 34(11), 2274–2282 (2012)

Ribeiro, M.T., Singh, S., Guestrin, C.: “ Why should I trust you?” Explaining the predictions of any classifier. In: Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, pp. 1135–1144 (2016)

Vinyals, O., Blundell, C., Lillicrap, T., et al.: Matching networks for one shot learning. In: Advances in Neural Information Processing Systems, vol. 29 (2016)

Walawalkar, D., Shen, Z., Liu, Z., et al.: Attentive cutmix: an enhanced data augmentation approach for deep learning based image classification. arXiv:2003.13048 (2020)

Yun, S., Han, D., Oh, S.J., et al.: Cutmix: regularization strategy to train strong classifiers with localizable features. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 6023–6032 (2019)

Cubuk, E.D., Zoph. B., Mane, D., et al.: Autoaugment: learning augmentation strategies from data. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 113–123 (2019)

Cubuk, E.D., Zoph, B., Shlens, J., et al.: Randaugment: practical automated data augmentation with a reduced search space. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, pp. 702–703 (2020)

Lim, S., Kim, I., Kim, T., et al.: Fast autoaugment. In: Advances in Neural Information Processing Systems, vol. 32 (2019)

Zhong, Z., Zheng, L., Kang, G., et al.: Random erasing data augmentation. In: Proceedings of the AAAI Conference on Artificial Intelligence , vol. 34(07), pp. 13001–13008 (2020)

DeVries, T., Taylor, G.W.: Improved regularization of convolutional neural networks with cutout. arXiv:1708.04552 (2017)

Singh, K.K., Lee, Y.J.: Hide-and-seek: forcing a network to be meticulous for weakly-supervised object and action localization. In: 2017 IEEE International Conference on Computer Vision (ICCV). IEEE, pp. 3544–3553 (2017)

Gao, C., Wu, W.: Boosting the transferability of adversarial examples with more efficient data augmentation. In: Journal of Physics: Conference Series, vol. 2189(1). IOP Publishing, p. 012025 (2022)

Huang, S., Wang, X., Tao, D.: Snapmix: semantically proportional mixing for augmenting fine-grained data. In: Proceedings of the AAAI Conference on Artificial Intelligence, vol. 35(2), pp. 1628–1636 (2021)

Chen, J., Shen, D., Chen, W., et al.: HiddenCut: simple data augmentation for natural language understanding with better generalization. arXiv:2106.00149 (2021)

Ma, N., Zhang, X., Zheng, H.T., et al.: Shufflenet v2: practical guidelines for efficient CNN architecture design. In: Proceedings of the European Conference on Computer Vision (ECCV), pp. 116–131 (2018)

Qian, S., Ning, C., Hu, Y.: MobileNetV3 for image classification. In: 2021 IEEE 2nd International Conference on Big Data, Artificial Intelligence and Internet of Things Engineering (ICBAIE). IEEE, pp. 490–497 (2021)

Acknowledgements

This work was supported by the National Natural Science Found of China (Grant No. 62103393).

Funding

National Natural Science Found of China (Grant No. 62103393).

Author information

Authors and Affiliations

Contributions

HZ performed methodology, software, data curation, validation, writing—original draft preparation, writing—review and editing and visualization. JW, SL, PB and MX done writing—review and editing. ZC did supervision, writing—review and editing, project administration and funding acquisition.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Ethical approval

Not applicable.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Zhao, H., Wang, J., Chen, Z. et al. Interpretability-Mask: a label-preserving data augmentation scheme for better classification. SIViP 17, 2799–2808 (2023). https://doi.org/10.1007/s11760-023-02497-z

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11760-023-02497-z