Abstract

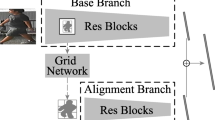

Pedestrian re-identification is highly dependent on discriminative features that enable images to encapsulate an arbitrary combination of multiple scales by different spatial scales. However, current models divide the scale by mechanical horizontal segmentation, which inevitably degenerate the re-identification performance. In this paper, we propose a novel multi-scale network (MSNet) to extract a certain scale feature map through different branches before segmentation. The branches utilize backbone networks composed of multi-scale residual blocks to extract features at different scales. Moreover, the specific segmentation method of the feature map is also based on its scale, which is opposite to the method of the first segmentation and then determine the scale. Moreover, MSNet significantly shortens the training and testing time owing to its lightweight design. Experimental results evidently demonstrate that the proposed MSNet shows superior performance in terms of accuracy, efficiency, and robustness on three open-source data sets, compared with other models. Codes are available at https://github.com/PKY-IMO/MSNet.

Similar content being viewed by others

References

Hu, T., Zhang, H., Zhu, X., et al.: Depth sensor based human detection for indoor surveillance. Future Generat. Comput. Syst. 88, 540–551 (2018)

Ronaldson-Bouchard, K., Vunjak-Novakovic, G.: Organs-on-a-chip: a fast track for engineered human tissues in drug development. Cell Stem Cell 22(3), 310–324 (2018)

Wang, X., Zheng, S., Yang, R., et al.: Pedestrian attribute recognition: a survey. arXiv preprint arXiv:1901.07474 (2019)

Dalal, N., Triggs, B.: Histograms of oriented gradients for human detection. Proc. IEEE Conf. Comput. Vis. Pattern Recogn. 1, 886–893 (2005)

Felzenszwalb, P., Mcallester, D., Ramanan, D.: A Discriminatively Trained, Multiscale, Deformable Part Model. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 1–8 (2008)

Andrew, G., Howard., Menglong, Z., Chen, B., et al.: MobileNets Efficient Convolution. Neural Netw. Mobile Vis. Appl. arXiv preprint arXiv:1704.04861 (2017)

Wang, G., Gong, S., Cheng, J., Hou, Z.: Faster Person Re-Identification. arXiv preprint arXiv:2008.06826 (2020)

Lin, Y.T., Zheng, L., Zheng, Z.D., et al.: Improving person re-identification by attribute and identity learning. Pattern Recogn. 95, 151–161 (2019)

Wang, G., Yuan, Y., Chen, X., et al.: Learning discriminative features with multiple granularities for person re-identification.In: IEEE Conference on Computer Vision Pattern Recognition, pp. 274–282 (2018)

Zheng, F., Deng, C., Sun, X., et al.: Pyramidal person re-identification via multi-loss dynamic trainin. In: IEEE Conference on Computer Vision and Pattern Recognition, pp. 8514–8522 (2019)

Krizhevsky, A., Sutskever, I., Hinton, G.: ImageNet classification with deep convolutional neural networks. Commun. ACM 60(6), 84–90 (2017)

Simonyan, K., Zisserman, A.: Very deep convolutional networks for large-scale image recognition. arXiv preprint arXiv:1409.1556 (2014)

He, K., Zhang, X., Ren, S., et al.: Deep residual learning for image recognition. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 770–778 (2016)

Zhang, X., Zhou, X., Lin, M., et al.: ShuffleNet: an extremely efficient convolutional neural network for mobile devices. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 6848–6856 (2017)

Freeman, I., Roese-Koerner, L., Kummert, A.: EffNet: an efficient structure for convolutional neural networks. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 6–10 (2018)

Wang, Y., Chen, Z., Wu, F., et al.: Person re-identification with cascaded pairwise convolutions. In: IEEE Conference on Computer Vision and Pattern Recognition, pp. 1470-1478 (2018)

Li, W., Zhu, X., Gong, S.: Harmonious attention network for person re-identification. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 2285–2294 (2018)

Sun, Y., Zheng, L., Yang, Y., et al.: Beyond part models: Person retrieval with refined part pooling (and a strong convolutional baseline). In: Proceedings of the European conference on computer vision (ECCV), pp. 480–496 (2018)

Fu, Y., Wei, Y., Zhou, Y., et al.: Horizontal pyramid matching for person re-identification. Proc. AAAI Conf. Artif. Intell. 33, 8295–8302 (2019)

Jiazhen, X., Ting, L., Wei, Y.: Person re-identification by multi-scale local feature selection. Comput Eng Appl, pp. 2590–2600 (2017)

Liu, C., Gong, S., Loy, C.C., et al.: Person re-identification: What features are important?. In: European Conference on Computer Vision, pp. 391–401 (2012)

Qian, X., Fu, Y., Xiang, T., et al.: Pose-normalized image generation for person re-identification. In: European conference on computer vision, pp. 650–667 (2018)

Kaiyang Zhou, Y.Y., Cavallaro, A., Xiang, T.: Omni-scale feature learning for person re-identification. In: IEEE International Conference on Computer Vision, pp. 3702–3712 (2019)

Varior, R.R., Haloi,M., Wang,G.: Gated siamese convolutional neural network architecture for human re-identification. In: European conference on computer vision, pp. 791–808 (2016)

Zhai, Y., Guo, X., et al.: In Defense of the Classification Loss for Person Re-Identification. In: Proceedings of the IEEE on Computer Vision and Recognition, pp. 1526–1535 (2019)

Zheng, L., Shen, L., Tian, L., et al.: Scalable person re-identification: a benchmark. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 1116–1124 (2015)

Li, W., Zhao, R., Xiao, T., et al.: Deepreid: Deep filter pairing neural network for person re-identification. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 152–159 (2014)

Zheng, Z., Zheng, L., Yang, Y.: Unlabeled samples generated by gan improve the person re-identification baseline in vitro. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 3754–3762 (2017)

Zhong, Z., Zheng, L., Kang, G., et al.: Random erasing data augmentation. In: Proceedings of the AAAI Conference on Artificial Intelligence 34(07), 13001–13008 (2020)

Bottou, L.: Stochastic gradient descent tricks. In: Neural Networks: Tricks of the Trade, pp. 421–436. Springer, Heidelberg (2012)

Zhong, Z., Zheng, L., Cao, D., et al.: Re-ranking person re-identification with k-reciprocal encoding. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 1318–1327 (2017)

Liu, Z., Qin, J., Li, A., et al.: Adversarial binary coding for efficient person re-identification. In: 2019 IEEE International Conference on Multimedia and Expo (ICME). IEEE, pp. 700–705 (2019)

Hu, T., Zhu, X., Guo, W., et al.: Efficient interaction recognition through positive action representation. Math Prob Eng, 2013 (2013)

Miao, J., Wu, Y., Liu, P., et al.: Pose-guided feature alignment for occluded person re-identification. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 542–551 (2019)

Acknowledgements

The research work reported in this paper is supported by National Natural Science Foundation of China (42001392, 61701453, 41601431, 61861042), and Fundamental Research Funds for the Central Universities, China University of Geosciences (Wuhan) (No. CUG190607).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Pan, K., Zhao, Y., Wang, T. et al. MSNet: a lightweight multi-scale deep learning network for pedestrian re-identification. SIViP 17, 3091–3098 (2023). https://doi.org/10.1007/s11760-023-02530-1

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11760-023-02530-1