Abstract

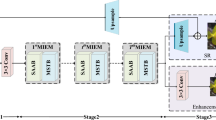

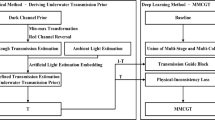

Due to the complexity of underwater scenes, underwater imaging is affected by the absorption and scattering of light through the water medium, resulting in degradation such as color casts, low contrast, and blurring. Many existing deep learning-based methods often fail to consider the impact of image degradation on different color channels, resulting in limited performance. In this work, we propose a progressive attention network based on RGB and HSV color spaces to improve the visual quality of underwater images. Specifically, we first transform the raw image into HSV color space and form RGB and HSV branches, respectively. We separate the color channels in each branch and employ the designed FFT-based aggregated residual dense module to focus on spatial and frequency domain features. Second, we feed the learned features of each channel on the two branches into the triple-color channel-wise attention module separately to balance the color distribution of different channels. Finally, we combine the results from two branches to obtain a high-quality image. Extensive qualitative and quantitative experimental results show that our model outperforms the compared methods. To evaluate the effectiveness of the components of our model, we conduct a series of ablation experiments.

Similar content being viewed by others

Data availability

The open real-world underwater image datasets used in this paper were acquired from the Internet. UIEB dataset: https://li-chongyi.github.io/proj_benchmark.html UFO-120 dataset: http://irvlab.cs.umn.edu/resources/ufo-120-datase.

References

Zhou, Y., Li, B., Wang, J., Rocco, E., Meng, Q.: Discovering unknowns: context-enhanced anomaly detection for curiosity-driven autonomous underwater exploration. Pattern Recognit. 131, 108860 (2022). https://doi.org/10.1016/j.patcog.2022.108860

Tang, C., von Lukas, U.F., Vahl, M., Wang, S., Wang, Y., Tan, M.: Efficient underwater image and video enhancement based on Retinex. Signal Image Video Process. 13, 1011–1018 (2019). https://doi.org/10.1007/s11760-019-01439-y

Iqbal, K., Odetayo, M., James, A., Salam, R.A., Talib, A.Z.H.: Enhancing the low quality images using unsupervised colour correction method. In: IEEE International Conference on Systems, Man and Cybernetics, pp. 1703-1709 (2010). https://doi.org/10.1109/ICSMC.2010.5642311

Zhou, J., Yang, T., Chu, W., Zhang, W.: Underwater image restoration via backscatter pixel prior and color compensation. Eng. Appl. Artif. Intell. 111, 104785 (2022). https://doi.org/10.1016/j.engappai.2022.104785

Peng, Y.T., Cosman, P.C.: Underwater image restoration based on image blurriness and light absorption. IEEE Trans. Image Process. 26(4), 1579–1594 (2017). https://doi.org/10.1109/TIP.2017.2663846

Drews Jr, P., do Nascimento, E., Moraes, F., Botelho, S., Campos, M.: Transmission estimation in underwater single images. In: IEEE International Conference on Computer Vision Workshops(ICCVW), pp. 825–830 (2013). https://doi.org/10.1109/ICCVW.2013.113

Fabbri, C., Islam, M.J., Sattar, J.: Enhancing underwater imagery using generative adversarial networks. In: IEEE International Conference on Robotics and Automation (ICRA), pp. 7159–7165 (2018). https://doi.org/10.1109/ICRA.2018.8460552

Chen, X., Zhang, P., Quan, L., Yi, C., Lu, C.: Underwater image enhancement based on deep learning and image formation model (2021). arXiv preprint arXiv:2101.00991

Liu, P., Wang, G., Qi, H., Zhang, C., Zheng, H., Yu, Z.: Underwater image enhancement with a deep residual framework. IEEE Access 7, 94614–94629 (2019). https://doi.org/10.1109/access.2019.2928976

Zhou, J., Sun, J., Zhang, W., Lin, Z.: Multi-view underwater image enhancement method via embedded fusion mechanism. Eng. Appl. Artif. Intell. 121, 105946 (2023). https://doi.org/10.1016/j.engappai.2023.105946

Li, K., Wu, L., Qi, Q., Liu, W., Gao, X., Zhou, L., Song, D.: Beyond single reference for training: underwater image enhancement via comparative learning. IEEE Trans. Circuits Syst. Video Technol. (2022). https://doi.org/10.1109/TCSVT.2022.3225376

Sun, K., Meng, F., Tian, Y.: Underwater image enhancement based on noise residual and color correction aggregation network. Digit. Signal Process. 129, 103684 (2022). https://doi.org/10.1016/j.dsp.2022.103684

Islam, M.J., Xia, Y., Sattar, J.: Fast underwater image enhancement for improved visual perception. IEEE Robot. Autom. Lett. 5(2), 3227–3234 (2020). https://doi.org/10.1109/LRA.2020.2974710

Zhou, J., Zhang, D., Zhang, W.: Cross-view enhancement network for underwater images. Eng. Appl. Artif. Intell. 121, 105952 (2023). https://doi.org/10.1016/j.engappai.2023.105952

Wang, Y., Guo, J., Gao, H., Yue, H.: UIEC2-Net: CNN-based underwater image enhancement using two color space. Signal Process. Image Commun. 96, 116250 (2021). https://doi.org/10.1016/j.image.2021.116250

Xiao, Z., Han, Y., Rahardja S, Ma, S.: USLN: a statistically guided lightweight network for underwater image enhancement via dual-statistic white balance and multi-color space stretch (2022). arXiv preprint arXiv:2209.02221

Ma, Z., Oh, C.: A wavelet-based dual-stream network for underwater image enhancement. In: IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pp. 2769–2773 (2022). https://doi.org/10.1109/ICASSP43922.2022.9747781

Li, C., Anwar, S., Hou, J., Cong, R., Guo, C., Ren, W.: Underwater image enhancement via medium transmission-guided multi-color space embedding. IEEE Trans. Image Process. 30, 4985–5000 (2021). https://doi.org/10.1109/TIP.2021.3076367

Yang, H., Zhou, D., Cao, J., Zhao, Q.: DPNet: detail-preserving image deraining via learning frequency domain knowledge[J]. Digit. Signal Process. 130, 103740 (2022). https://doi.org/10.1016/j.dsp.2022.103740

Mao, X., Liu, Y., Liu, F., Li, Q., Shen, W., Wang, Y.: Intriguing findings of frequency selection for image deblurring (2021). arXiv e-prints, arXiv: 2111.11745

Long, Y., Jia, H., Zhong, Y., Jiang, Y., Jia, Y.: RXDNFuse: a aggregated residual dense network for infrared and visible image fusion. Inf. Fusion 69, 128–141 (2021). https://doi.org/10.1016/j.inffus.2020.11.009

Dai, L., Liu, X., Li, C., Chen, J.: Awnet: attentive wavelet network for image ISP. In: European Conference on Computer Vision(ECCV), pp. 185–201 (2020). https://doi.org/10.1007/978-3-030-67070-2_11

Jiang, Z., Li, Z., Yang, S., Fan, X., Liu, R.: Target oriented perceptual adversarial fusion network for underwater image enhancement. IEEE Trans. Circuits Syst. Video Technol. 32(10), 6584–6598 (2022). https://doi.org/10.1109/TCSVT.2022.3174817

Zamir, S.W., Arora, A., Khan, S., Hayat, M., Khan, F.S., Yang, M., Shao, L.: Learning enriched features for real image restoration and enhancement. In: European Conference on Computer Vision(ECCV), pp. 492–511 (2020). https://doi.org/10.1007/978-3-030-58595-2_30

Qin, X., Wang, Z., Bai, Y., Xie, X., Jia, H.: FFA-Net: feature fusion attention network for single image dehazing. In: Proceedings of the AAAI conference on artificial intelligence, vol. 34(07), pp. 11908–11915 (2020). https://doi.org/10.1609/aaai.v34i07.6865

Johnson, J., Alahi, A., Li, F.: Perceptual losses for real-time style transfer and super-resolution. In: European Conference on Computer Vision(ECCV), pp. 694–711 (2016). https://doi.org/10.1007/978-3-319-46475-6_43

Li, C., Guo, C., Ren, W., Cong, R., Hou, J., Kwong, S., Tao, D.: An underwater image enhancement benchmark dataset and beyond. IEEE Trans. Image Process. 29, 4376–4389 (2019). https://doi.org/10.1109/TIP.2019.2955241

Islam, M.J., Luo, P., Sattar, J.: Simultaneous enhancement and super-resolution of underwater imagery for improved visual perception (2020). arXiv preprint, arXiv:2002.01155

Loshchilov, I., Hutter, F.: SGDR: Stochastic gradient descent with warm restarts. In: International Conference on Learning Representations(ICLR), pp. 24–26 (2017)

Zhang, W., Zhuang, P., Sun, H.H., Li, G., Kwong, S., Li, C.: Underwater image enhancement via minimal color loss and locally adaptive contrast enhancement. IEEE Trans. Image Process. 31, 3997–4010 (2022). https://doi.org/10.1109/TIP.2022.3177129

Zhang, W., Jin, S., Zhuang, P., Liang, Z., Li, C.: Underwater image enhancement via piecewise color correction and dual prior optimized contrast enhancement. IEEE Signal Process. Lett. 1–5 (2023). https://doi.org/10.1109/LSP.2023.3255005

Korhonen, J., You, J.: Peak signal-to-noise ratio revisited: is simple beautiful? In: Fourth International Workshop on Quality of Multimedia Experience, pp. 37–38 (2012). https://doi.org/10.1109/QoMEX.2012.6263880

Wang, Z., Bovik, A., Sheikh, H., Simoncelli, E.: Image quality assessment: from error visibility to structural similarity. IEEE Trans. Image Process. 13(4), 600–612 (2004). https://doi.org/10.1109/tip.2003.819861

Sheikh, H.R., Bovik, A.C.: Image information and visual quality. IEEE Trans. Image Process. 15(2), 430–444 (2006). https://doi.org/10.1109/tip.2005.859378

Panetta, K., Gao, C., Agaian, S.: Human-visual-system-inspired underwater image quality measures. IEEE J. Ocean. Eng. 41, 541–551 (2015). https://doi.org/10.1109/JOE.2015.2469915

Yang, M., Sowmya, A.: An underwater color image quality evaluation metric. IEEE Trans. Image Process. 24, 6062–6071 (2015). https://doi.org/10.1109/TIP.2015.2491020

Xiao, Z., Han, Y., Rahardja, S., Ma Y.: USLN: a statistically guided lightweight network for underwater image enhancement via dual-statistic white balance and multi-color space stretch (2022). arXiv preprint arXiv:2209.02221

Funding

This work was partially supported by the Key Project of Natural Science Research in Universities of Anhui Province (KJ2021A1408), the Key Project of Natural Science Research in Universities of Anhui Province (2022AH040332), the Anhui Province Quality Improvement Cultivation Project (2022TZPY040), and the Natural Science Research Project of Chuzhou Polytechnic (YJZ-2021-04).

Author information

Authors and Affiliations

Contributions

All authors have made contributions to this work. Individual contributions are as follows: M.M. was involved in conceptualization, methodology, software, and writing—original draft preparation; S.W. was involved in methodology, writing—review and editing, and funding acquisition. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no conflict of interest.

Ethical approval

Not applicable.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Miao, M., Wang, S. PA-ColorNet: progressive attention network based on RGB and HSV color spaces to improve the visual quality of underwater images. SIViP 17, 3405–3413 (2023). https://doi.org/10.1007/s11760-023-02562-7

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11760-023-02562-7