Abstract

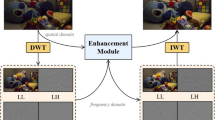

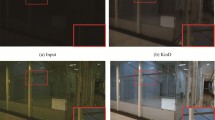

Digital images captured from the real world are inevitably affected by light and noise. Moreover, the downstream high-level visual tasks, such as the computer vision-based object detection and semantic segmentation can be improved by adjusting the visibility of dark scenes. Although the approaches built upon deep learning have achieved great success in the low-light enhancement field, the significant influence of semantic features and noise is always overlooked. Therefore, a new unsupervised optical enhancement model based on semantic perception and noise suppression is proposed in this paper. First, the enhancement factor mapping is adopted to extract the low-light image features. Then, the progressive curve enhancement is utilized to adjust the curve. Compared with the fully supervised learning method, the well-built network is trained with unpaired images in this paper. Second, under the guidance of semantic feature embedding module, the low-light enhancement can preserve rich semantic information. Additionally, the self-supervised noise removal module is employed to effectively avoid noise interference and elevate image quality. Experimental outcomes and analysis indicate that the proposed scheme can not only generate the enhanced images of visually pleasing and artifact free, but also be applied to multiple downstream visual tasks.

Similar content being viewed by others

Availability of data and materials

The data that support the findings of this study are available on request from the corresponding author.

References

Tu, Z., Talebi, H., Zhang, H., Yang, F.: MAXIM: multi-axis MLP for image processing. In: Proceedings of IEEE Conference on Computer Vision and Pattern Recognition, pp. 5769–5780 (2022)

Chen, Y., Xia, R., Yang, K., Zou, K.: MFFN: image super-resolution via multi-level features fusion network. Vis Comput 1–16 (2023). https://doi.org/10.1007/s00371-023-02795-0

Dong, Z., Liu, Y., Feng, Y., Wang, Y., Xu, W., Chen, Y., Tang, Q.: Object detection method for high resolution remote sensing imagery based on convolutional neural networks with optimal object anchor scales. Int. J. Remote Sens. 43(7), 2698–2719 (2022)

Zhang, J., Liu, Y., Guo, C., Zhan, J.: Optimized segmentation with image inpainting for semantic mapping in dynamic scenes. Appl. Intell. 53(2), 2173–2188 (2022)

Wang, S., Zheng, J., Hu, H., Li, B.: Naturalness preserved enhancement algorithm for non-uniform illumination images. IEEE Trans. Image Process. 22(9), 3538–3548 (2013)

Li, M., Liu, J., Yang, W., Sun, X., Guo, Z.: Structure-revealing low-light image enhancement via robust retinex model. IEEE Trans. Image Process. 27(6), 2828–2841 (2018)

Iqbal, M., Alib, S.S., Riaz, M.M., Ghafoora, A., Ahmadaet, A.: Color and white balancing in low-light image enhancement. Optik 209, 164260 (2020)

Kim, G., Kwon, J.: Deep illumination-aware dehazing with low-light and detail enhancement. IEEE Trans. Intell. Transp. Syst. 23(3), 2494–2508 (2021)

Lu, Y., Gao, Y., Guo, Y., Xu, W., Hu, X.: Low-light image enhancement via gradient prior-aided network. IEEE Access. 10, 92583–92596 (2022)

Liu, R., Ma, L., Zhang, J., Fan, X., Luo, Z.: Retinex-inspired unrolling with cooperative prior architecture search for low-light image enhancement. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 10561–10570 (2021)

Hu, J., Guo, X., Chen, J., Liang, G., Deng, F., Lam, T.: A two-stage unsupervised approach for low light image enhancement. IEEE Robot. Autom. Lett. 6(4), 8363–8370 (2021)

Zhao, Z., Xiong, B., Wang, L., Ou, Q., Yu, L., Kuang, F.: RetinexDIP: a unified deep framework for low-light image enhancement. IEEE Trans. Circuits Syst. Video Technol. 32(3), 1076–1088 (2021)

Cheng, H., Shi, X.: A simple and effective histogram equalization approach to image enhancement. Digit. Signal Process. 14(2), 158–170 (2004)

Jobson, D.J., Rahman, Z., Woodell, G.A.: Properties and performance of a center/surround retinex. IEEE Trans. Image Process. 6(3), 451–462 (1997)

Jobson, D.J., Rahman, Z., Woodell, G.A.: A multiscale retinex for bridging the gap between color images and the human observation of scenes. IEEE Trans. Image Process. 6(7), 965–976 (1997)

Guo, X., Li, Y., Ling, H.: LIME: low-light image enhancement via illumination map estimation. IEEE Trans. Image Process. 26(2), 982–993 (2016)

Chen, Y., Xia, R., Zou, K., Yang, K.: RNON: image inpainting via repair network and optimization network. Int. J. Mach. Learn. Cybern. 1–17 (2023). https://doi.org/10.1007/s13042-023-01811-y

Zhao, L., Wang, K., Zhang, J.: Learning deep texture-structure decomposition for low-light image restoration and enhancement. Neurocomputing 524, 126–141 (2023)

Lore, K.G., Akintayo, A., Sarkar, S.: LLNet: a deep autoencoder approach to natural low-light image enhancement. Pattern Recogn. 6, 650–662 (2017)

Wei, C., Wang, W., Y ang, W., Liu, J.: Deep retinex decomposition for low-light enhancement. In: Proceeding of British Machine Vision Conference, pp. 1–12 (2018)

Zhang, Y., Zhang, J., Guo, X.: Kindling the darkness: a practical low-light image enhancer. In: Proceeding of ACM International Conference on Multimedia, pp. 1632–1640 (2019)

Jiang, Y., Gong, X., Liu, D., Cheng, Y., Fang, C., Shen, X., Yang, J., Zhou, P., Wang, Z.: EnlightenGAN: deep light enhancement without paired supervision. IEEE Trans. Image Process. 30, 2340–2349 (2021)

Chen, Y., Xia, R., Zou, K., Yang, K.: FFTI: image inpainting algorithm via features fusion and two-steps inpainting. J. Vis. Commun. Image Represent. 91, 103776 (2023)

Guo, C., Li, C., Guo, J., Loy, C. C., Hou, J., Kwong, S., Cong, R.: Zero-reference deep curve estimation for low-light image enhancement. In: Proceeding of IEEE Conference on Computer Vision and Pattern Recognition, pp. 1780–1789 (2020)

Chollet, F.: Xception: deep learning with depthwise separable convolutions. In: Proceeding of IEEE Conference on Computer Vision and Pattern Recognition, pp. 1251–1258 (2017)

Zheng, S., Gupta, G.: Semantic-guided zero-shot learning for low-light image/video enhancement. In: Proceeding of IEEE Winter Conference on Applications of Computer Vision, pp. 581–590 (2022)

Aakerberg, A., Johansen, A.S., Nasrollahi, K., Moeslund, T.B.: Semantic segmentation guided real-world super-resolution. In: Proceeding of IEEE Winter Conference on Applications of Computer Vision, pp. 449–458 (2022)

Lin, T.Y., Dollar, P., Girshick, R., He, K.M., Hariharan, B., Belongie, S.: Feature pyramid networks for object detection. In: Proceedings of IEEE Conference on Computer Vision and Pattern Recognition, pp. 936–944 (2017)

Zhang, K., Zuo, W., Chen, Y., Meng, D., Zhang, L.: Beyond a gaussian denoiser: residual learning of deep CNN for image denoising. IEEE Trans. Image Process. 26(7), 3142–3155 (2017)

Chen, Z., Jiang, Y., Liu, D., Wang, Z.: CERL: a unified optimization framework for light enhancement with realistic noise. IEEE Trans. Image Process. 31, 4162–4172 (2022)

Fu, J., Liu, J., Tian, H., Li, Y., Bao, Y., Fang, Z., Lu, H.: Dual attention network for scene segmentation. In: Proceeding of IEEE Conference on Computer Vision and Pattern Recognition, pp. 3146–3154 (2019)

Zhao, H., Gallo, O., Frosio, I., Kautz, J.: Loss functions for image restoration with neural networks. IEEE Trans. Comput. Imaging 3(1), 47–57 (2016)

Blau, Y., Mechrez, R., Timofte, R.: The 2018 PIRM challenge on perceptual image super-resolution. In: Proceedings of the European Conference on Computer Vision Workshops, pp. 1–18 (2018)

Mittal, A., Moorthy, A.K., Bovik, A.C.: No-reference image quality assessment in the spatial domain. IEEE Trans. Image Process. 21(12), 4695–4708 (2012)

Fu, X., Liao, Y., Zeng, D., Huang, Y., Zhang, X., Ding, X.: A probabilistic method for image enhancement with simultaneous illumination and reflectance estimation. IEEE Trans. Image Process. 24(12), 4965–4977 (2015)

Ma, K., Zeng, K., Wang, Z.: Perceptual quality assessment for multi-exposure image fusion. IEEE Trans. Image Process. 24(11), 3345–3356 (2015)

Vonikakis, V., Kouskouridas, R., Gasteratos, A.: On the evaluation of illumination compensation algorithms. Multimed. Tools Appl. 77(8), 9211–9231 (2018)

Lee, C., Lee, C., Kim, C.S.: Contrast enhancement based on layered difference representation of 2D histograms. IEEE Trans. Image Process. 22(12), 5372–5384 (2013)

Howard, A., Sandler, M., Chu, G., Chen, L.C., Chen, B., Tan, M.X., Wang, W.J., Zhu, Y.K., Pang, R.M., Vasudevan, V., Le, Q.V., Adam, H.: Searching for mobilenetv3. In: Proceeding of IEEE International Conference on Computer Vision, pp. 1314–1324 (2019)

Funding

This work is supported by National Natural Science Foundation of China (Grant No. 52172379).

Author information

Authors and Affiliations

Contributions

MZ: Formal analysis, Methodology, Software, Writing—original draft, Writing—review and editing. LL: Funding acquisition, Supervision. DJ: Writing—review and editing.

Corresponding author

Ethics declarations

Conflict of interest

The authors have no competing interests to declare that are relevant to the content of this article.

Ethical approval

This declaration is not applicable.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Zhang, M., Liu, L. & Jiang, D. Joint semantic-aware and noise suppression for low-light image enhancement without reference. SIViP 17, 3847–3855 (2023). https://doi.org/10.1007/s11760-023-02613-z

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11760-023-02613-z