Abstract

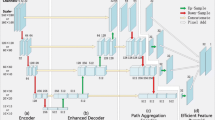

Deep learning models have shown powerful feature extraction capabilities in brain tumor segmentation tasks to use medical image information and help doctors make disease diagnoses. However, due to the diverse shapes of brain tumors and the variability of brain imaging, the segmentation effect of the model on brain tumors has specific room for improvement. The Polyp-PVT network is adopted as the basic model based on its advantages of fast running speed and high segmentation accuracy. The attentional selective fusion (ASF) module is introduced to replace the CFM module. The channel shuffle module is introduced before the ASF module—PVT-ASF-CS network is proposed. This is helpful to improve the ability of feature fusion and segmentation effect of the network. At the same time, information mixing between channels can be completed without increasing the computation and parameter quantity. Based on the Brats2019 dataset, the ablation experiment of the PVT-ASF-CS network was conducted and compared with other models. The Dice coefficient and HD were used as evaluation indexes to evaluate the segmentation effect of the model. The experimental results show that the proposed PVT-ASF-CS model can extract more adequate and detailed features, and has a better segmentation effect on brain tumors.

Similar content being viewed by others

Data availability

All the data included in this study are available upon request by contacting the corresponding author.

References

Zarnie, L.: From survivorship to end-of-life discussions for brain tumor patients. Neuro Oncol. Pract. 8(03), 231–232 (2021)

Liu, Y., Liu, S., Li, G., et al.: Association of high-dose radiotherapy with improved survival in patients with newly diagnosed low-grade gliomas. Cancer 128(05), 328–340 (2021)

Jingsi, C.: Advances in the application of computer-aided diagnostics in medical imaging and medical image processing––a review of medical imaging and medical image processing. J. Trop. Crops 42(06), 1813–1813 (2021)

Ronneberger, O., Fischer, P., Brox T.: U-Net: convolutional networks for biomedical image segmentation[C]. Lecture notes in computer science, Cham, Switzerland, pp. 234–241. (2015)

Roy, AG., Navab, N., Navab, N et al.: Concurrent spatial and channel squeeze and excitation in fully convolutional networks[C]. In: Proc. of the medical image computing and computer-assisted intervention, Cham, Switzerland, pp. 421–429 (2018)

Ni, Z.L., Bian, G.B., Zhou, X.H., et al.: RAUNet: residual attention U-net for semantic segmentation of cataract surgical instruments. Lect. Notes Comput. Sci. 11954(04), 139–149 (2019)

Trebing, K., Staǹczyk, T., Mehrkanoon, S.: SmaAt-UNet: precipitation nowcasting using a small attention-unet architecture. Pattern Recognit. Lett. 145(06), 178–186 (2021)

Wei, P., Tong, M.: Attention-DPU: dual-path UNet with an attention mechanism for ultrasound image segmentation. J. Phys: Conf. Ser. 1693(01), 1–5 (2020)

Zheng, H., Yza, B., Yld, E., et al.: GCAUNet: a group cross-channel attention residual Unet for slice based brain tumor segmentation. Biomed. Signal Process. Control 70(02), 7–12 (2021)

Howard, A.G., Zhu, M., Chen, B., et al.: MobileNets: efficient convolutional neural networks for mobile vision applications. IEEE/CVF Conf. Comput. Vis. Pattern Recognit. CVPR. 16(05), 4480–4490 (2017)

Han, K., Wang, Y., Tian, Q., et al.: GhostNet: More features from cheap operations. IEEE/CVF Conf. Comput. Vis. Pattern Recognit. CVPR. 77(02), 1577–1586 (2020)

Yu, C., Xiao, B., Gao, C., et al.: Lite-HRNet: a lightweight high-resolution network. IEEE/CVF Conf. Comput. Vis. Pattern Recognit. CVPR 17(04), 10435–10445 (2021)

Vaswani, A., Shazeer, N., Parmar, N., et al.: Attention is all you need. Adv. Neural Inform. Process. Syst. 12(02), 6000–6010 (2017)

Dosovitskiy, A., Beyer, L., Kolesnikov, A., et al.: An image is worth 16x16 words: transformers for image recognition at scale. Int. Conf. Learn. Represent. 11(01), 1–22 (2020)

Pan, Z., Zhuang, B., Liu, J., et al.: Scalable visual transformers with hierarchical pooling. Comput. Vis. Pattern Recognit. 10619(02), 1–10 (2021)

Wang, W., Xie, E., Li, X., et al.: Pyramid vision transformer: a versatile backbone for dense prediction without convolutions. IEEE Int. Conf. Comput. Vis. 21(01), 1–15 (2021)

Wang, W., Xie, E., Li, X., et al.: PVTv2: Improved baselines with pyramid vision transformer. IEEE Int. Conf. Comput. Vis. 2106(07), 1–6 (2021)

Dong, B., Wang, W., Fan, D., et al.: Polyp-PVT: Polyp segmentation with pyramid vision transformers. IEEE Int. Conf. Comput. Vis. 2108(01), 1–11 (2021)

Guo, M.H., Xu, T.X., Liu, J.J., Liu, Z.N., Jiang, P.T., Mu, T.J., Zhang, S.H., Martin, R.R., Cheng, M.M., Hu, S.M.: Attention mechanisms in computer vision: a survey. Comput. Vis. Med. 3, 331–368 (2022)

Ma, F., Sun, B., Li, S.: Robust facial expression recognition with convolutional visual transformers. In IEEE Trans. Affect. Comput. 26(10), 1–11 (2021)

Zhang, X., Zhou, X., Lin, M., et al.: ShuffleNet: An extremely efficient convolutional neural network for mobile devices. IEEE/CVF Conf. Comput. Vis. Pattern Recognit. 10(02), 6848–6856 (2018)

Kong, J., Yang, C., Xiao, Y et al.: A graph-related high-order neural network architecture via feature aggregation enhancement for identification application of diseases and pests. Computational Intelligence and Neuroscience (2022).

Bakas, S., Akbari, H., Sotiras, A., et al.: Advancing the cancer genome atlas glioma MRI collections with expert segmentation labels and radiomic features. Sci Data 06(04), 1–13 (2017)

Bakas, S., Reyes, M., Jakab, A., et al.: Identifying the best machine learning algorithms for brain tumor segmentation, progression assessment, and overall survival prediction in the BRATS challenge. Sci. Data 44(10), 1–29 (2018)

Menze, B., Jakab, A., Bauer, S., et al.: The multimodal brain tumor image segmentation benchmark (BRATS). IEEE Trans. Med. Imaging 30(10), 1993–2024 (2015)

Tustison Nicholas, J., Avants Brian, B., Cook Philip, A., et al.: N4ITK: Improved N3 bias correction. IEEE Trans. Med. Imaging 29(06), 1310–1320 (2010)

Wang, W., Chen, C., Ding, M., et al.: TransBTS: Multimodal brain tumor segmentation using transformer. Med. Image Comput. Comput. Assist. Interv.—MICCAI 2021. 12901(22), 109–119 (2021)

Hatamizadeh, A., Yang, D., Roth, H., et al.: UNETR: Transformers for 3D medical image segmentation. IEEE/CVF Winter Conf. Appl. Comput. Vis. (WACV) 10504(89), 1748–1758 (2022)

Zhou, C., Ding, C., Wang, X., et al.: One-pass multi-task networks with cross-task guided attention for brain tumor segmentation. IEEE Trans. Image Process. 29(99), 1–14 (2020)

Funding

This research was received by the natural science foundation of Heilongjiang Province (No. LH2020F033), the national natural science youth foundation of China (No.11804068) and research project of the Heilongjiang Province Health Commission (No. 20221111001069).

Author information

Authors and Affiliations

Contributions

CL contributed to the conception of the study and contributed significantly to analysis and manuscript preparation; XY and MZ made important contributions in making adjustments to the structure of the paper, revising the paper, editing the manuscript and English polished; LZ polished, edited and checked the article during the submission process; YX carried on the data analysis of the paper; XM performed the experiment, the data analyses and wrote the manuscript. All authors reviewed the manuscript.

Corresponding authors

Ethics declarations

Conflict of interest

The authors declare that there is no conflict of interest regarding the publication of this article.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Lan, C., Yu, X., Zhang, L. et al. Brain tumor image segmentation based on improved Polyp-PVT. SIViP 17, 4019–4027 (2023). https://doi.org/10.1007/s11760-023-02632-w

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11760-023-02632-w