Abstract

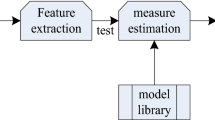

Stuttering or stammering is considered as the most important parameter in the speech recognition algorithm. For the conversion of stuttered speech into readable text, first it is vital to detect the stuttered speech. In many existing models, the exactness of the recognition system is degraded because of the additional noise present in the speech signals. Therefore, proposed a bald eagle search algorithm based vanilla long-short term memory (BES-vanilla LSTM), a system for identifying the stuttered speech among the number of speech signals. Initially, the dataset collected from the TORGO database undergoes preprocessing phase for the elimination of unwanted noise signals present in the input speech signals using the spectral subtraction method. Further, the preprocessed signals are passed to the feature extraction phase. In this phase, the pitch frequencies are extracted from the input signals. Then the extracted pitch frequencies are passed to the input layer of the Vanilla LSTM for stuttered speech recognition. Using the fitness value of the BES algorithm, the LSTM recognizes the stuttered speech and is given at the output layer. The proposed system is implemented in the python tool. The comparison of the suggested system's performance is made with the other existing system by metrics such as f1-score, recall, accuracy and precision, and the overall efficiency of the designed system is studied.

Similar content being viewed by others

Availability of data and materials

Data sharing not applicable to this article as no datasets were generated or analyzed during the current study.

References

Debnath, S., Roy, P.: Appearance and shape-based hybrid visual feature extraction: toward audio–visual automatic speech recognition. Signal Image Video Process. 15, 25–32 (2021). https://doi.org/10.1007/s11760-020-01717-0

Sun, L., Huang, Y., Li, Q., Li, P.: Multi-classification speech emotion recognition based on two-stage bottleneck features selection and MCJD algorithm. Signal Image Video Process. 16, 1253–1261 (2022). https://doi.org/10.1007/s11760-021-02076-0

Shilandari, A., Marvi, H., Khosravi, H., Wang, W.: Speech emotion recognition using data augmentation method by cycle-generative adversarial networks. Signal Image Video Process. 16, 1955–1962 (2022). https://doi.org/10.1007/s11760-022-02156-9

Wang, D., Wang, X., Lv, S.: An overview of end-to-end automatic speech recognition. Symmetry 11(8), 1018 (2019). https://doi.org/10.3390/sym11081018

Abdel-Hamid, O., Mohamed, A.R., Jiang, H., Deng, L., Penn, G., Yu, D.: Convolutional neural networks for speech recognition. IEEE/ACM Trans. Audio Speech Lang. Process. 22(10), 1533–1545 (2014). https://doi.org/10.1109/TASLP.2014.2339736

Alharbi, S., Alrazgan, M., Alrashed, A., Alnomasi, T., Almojel, R., Alharbi, R., Alharbi, S., Alturki, S., Alshehri, F., Almojil, M.: Automatic speech recognition: Systematic literature review. IEEE Access 9, 131858–131876 (2021). https://doi.org/10.1109/ACCESS.2021.3112535

Nassif, A.B., Shahin, I., Attili, I., Azzeh, M., Shaalan, K.: Speech recognition using deep neural networks: A systematic review. IEEE access 7, 19143–19165 (2019). https://doi.org/10.1109/ACCESS.2019.2896880

Yu, D., Deng, L.: Automatic speech recognition. In: IFIP International Conference on ICT Systems Security and Privacy Protection, pp. 416–430. Springer, Cham (2016). https://doi.org/10.1007/978-1-4471-5779-3

Schneider, S., Baevski, A., Collobert, R., Auli, M.: wav2vec: unsupervised pre-training for speech recognition. arXiv preprint arXiv:1904.05862 (2019). https://doi.org/10.48550/arXiv.1904.05862

Kahn, J., Lee, A., Hannun, A.: Self-training for end-to-end speech recognition. In: ICASSP 2020–2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). IEEE (2020). https://doi.org/10.1109/ICASSP40776.2020.9054295

Guo, J., Sainath, T.N., Weiss, R.J.: A spelling correction model for end-to-end speech recognition. In: ICASSP 2019–2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). IEEE (2019). https://doi.org/10.1109/ICASSP.2019.8683745

Feng, S., Kudina, O., Halpern, B.M., Scharenborg, O.: Quantifying bias in automatic speech recognition. arXiv preprint arXiv:2103.15122 (2021). https://doi.org/10.48550/arXiv.2103.15122

Park, D.S., Chan, W., Zhang, Y., Chiu, C.C., Zoph, B., Cubuk, E.D., Le, Q.V.: Specaugment: a simple data augmentation method for automatic speech recognition. arXiv preprint arXiv:1904.08779 (2019). https://doi.org/10.48550/arXiv.1904.08779

Hinton, G., Deng, L., Yu, D., Dahl, G.E., Mohamed, A.R., Jaitly, N., Senior, A., Vanhoucke, V., Nguyen, P., Sainath, T.N., Kingsbury, B.: Deep neural networks for acoustic modeling in speech recognition: The shared views of four research groups. IEEE Signal Process. Mag. 29(6), 82–97 (2021). https://doi.org/10.1109/MSP.2012.2205597

Yao, Z., Wu, D., Wang, X., Zhang, B., Yu, F., Yang, C., Peng, Z., Chen, X., Xie, L., Lei, X.: Wenet: Production oriented streaming and non-streaming end-to-end speech recognition toolkit. arXiv preprint arXiv:2102.01547 (2021). https://doi.org/10.48550/arXiv.2102.01547

Ma, P., Petridis, S., Pantic, M.: End-to-end audio-visual speech recognition with conformers. In: ICASSP 2021–2021 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). IEEE (2021). https://doi.org/10.1109/ICASSP39728.2021.9414567

Shi, B., Hsu, W.N., Mohamed, A.: Robust Self-Supervised Audio-Visual Speech Recognition. arXiv preprint arXiv:2201.01763 (2022). https://doi.org/10.48550/arXiv.2201.01763

Shi, Y., Wang, Y., Wu, C., Yeh, C.F., Chan, J., Zhang, F., Le, D., Seltzer, M.: Emformer: Efficient memory transformer based acoustic model for low latency streaming speech recognition. In: ICASSP 2021–2021 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). IEEE (2021). https://doi.org/10.1109/ICASSP39728.2021.9414560

Kashevnik, A., Lashkov, I., Axyonov, A., Ivanko, D., Ryumin, D., Kolchin, A., Karpov, A.: Multimodal corpus design for audio-visual speech recognition in vehicle cabin. IEEE Access 9, 34986–35003 (2021). https://doi.org/10.1109/ACCESS.2021.3062752

Yu, W., Zeiler, S., Kolossa, D.: Fusing information streams in end-to-end audio-visual speech recognition. In: ICASSP 2021–2021 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). IEEE (2021). https://doi.org/10.1109/ICASSP39728.2021.9414553

Shahamiri, S.R.: Speech vision: An end-to-end deep learning-based dysarthric automatic speech recognition system. IEEE Trans. Neural Syst. Rehabil. Eng. 29, 852–861 (2021). https://doi.org/10.1109/TNSRE.2021.3076778

Dong, L., Xu, S., Xu, B.: Speech-transformer: a no-recurrence sequence-to-sequence model for speech recognition. In: 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). IEEE (2018). https://doi.org/10.1109/ICASSP.2018.8462506

Han, W., Zhang, Z., Zhang, Y., Yu, J., Chiu, C.C., Qin, J., Gulati, A., Pang, R., Wu, Y.: Contextnet: improving convolutional neural networks for automatic speech recognition with global context. arXiv preprint arXiv:2005.03191 (2020). https://doi.org/10.48550/arXiv.2005.03191

Ravanelli, M., Zhong, J., Pascual, S., Swietojanski, P., Monteiro, J., Trmal, J., Bengio, Y.: Multi-task self-supervised learning for robust speech recognition. In: ICASSP 2020–2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). IEEE (2020). DOI: https://doi.org/10.1109/ICASSP40776.2020.9053569

Subramanian, A.S., Weng, C., Watanabe, S., Yu, M., Yu, D.: Deep learning based multi-source localization with source splitting and its effectiveness in multi-talker speech recognition. Comput. Speech Lang. 75, 101360 (2022). https://doi.org/10.1016/j.csl.2022.101360

Veisi, H., Haji Mani, A.: Persian speech recognition using deep learning. Int. J. Speech Technol. 23(4), 893–905 (2020). https://doi.org/10.1007/s10772-020-09768-x

Ismail, A., Abdlerazek, S., El-Henawy, I.M.: Development of smart healthcare system based on speech recognition using support vector machine and dynamic time warping. Sustainability 12(6), 2403 (2020). https://doi.org/10.3390/su12062403

Kwon, S.: A CNN-assisted enhanced audio signal processing for speech emotion recognition. Sensors 20(1), 183 (2019). https://doi.org/10.3390/s20010183

Bansal, S., Kamper, H., Lopez, A., Goldwater, S.: Towards speech-to-text translation without speech recognition. arXiv preprint arXiv:1702.03856 (2017). https://doi.org/10.48550/arXiv.1702.03856

Funding

This research did not receive any specific grant from funding agencies in the public, commercial, or not-for-profit sectors.

Author information

Authors and Affiliations

Contributions

Authors S.P., V.K., N.P.J., and G.V.S.R. have contributed equally to the work.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Ethical approval

All applicable institutional and/or national guidelines for the care and use of animals were followed.

Informed consent

For this type of study, formal consent is not required.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Premalatha, S., Kumar, V., Jagini, N.P. et al. Development of vanilla LSTM based stuttered speech recognition system using bald eagle search algorithm. SIViP 17, 4077–4086 (2023). https://doi.org/10.1007/s11760-023-02639-3

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11760-023-02639-3