Abstract

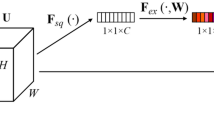

Automatic helmet-wearing detection is an effective way to prevent head injuries for construction site workers. However, the current helmet detection algorithms still need to improve, such as low accuracy of small target recognition and poor adaptability to complex scenes. This paper proposes possible improvements to YOLOv5 and calls it BiFPN Detection CBAM YOLOv5(BDC-YOLOv5). Regarding the problem of error detection, two modifications are offered. First, an additional detection layer is introduced on YOLOv5, and more detection heads are used to detect targets of different scales, thus improving the detection ability of the model in complex scenarios. Then, the Bidirectional Feature Pyramid Network (BiFPN) is introduced, and the shallow semantic features can be fused better by adding jump connections, which significantly reduces the detection error rate of the model. In terms of reducing the missed detection rate, the Convolutional Block Attention Module (CBAM) was added to the original YOLOv5, thus making the model more focused on all helpful information. Finally, the BiFPN, the additional detection layer, and the CBAM module are combined simultaneously in YOLOv5, which reduces the model’s false detection and missed detection rate while improving the detection ability of small-scale objects. Experimental results on the public dataset Safety-Helmet-Wearing-Dataset(SHWD) show a mean average precision (Map) improvement of 2.6\(\%\) compared to the original YOLOv5, which reflects the significant improvement in the target monitoring capability of the model. To demonstrate the performance of the proposed BDC-YOLOv5 model, a series of comparative experiments with other mainstream algorithms are carried out.

Similar content being viewed by others

Data availability

The public dataset SHWD is available at https://github.com/njvisionpower/Safety-Helmet-Wearing-Dataset (accessed on 17 December 2019).

References

Park M.Palinginis E.Brilakis, I.: Detection of Construction Workers in Video Frames for Automatic Initialization of Vision Trackers. Construction Research Congress 2012, West Lafayette, Indiana, United States,940-949(2012)

Qi, F., Heng, L., Xiaochun, L., Lieyun, D., Hanbin, L.: Detecting Non-Hardhat Use by a Deep Learning Method from Fairfield Surveillance Videos. Autom. Constr. 85, 1–9 (2018)

Wen C.Y., Chiu S.H., Liaw J.J., Chuan-Pin L.: The safety helmet detection for ATM’s surveillance system via the modified Hough transform. IEEE 37th Annual 2003 International Carnahan Conference on Security Technology,364-369(2003)

Dalal, N., Triggs, B.: Histograms of oriented gradients for human detection. 2005 IEEE computer society conference on computer vision and pattern recognition,Vol.1,886-893(2005)

Lowe, D. G.: Object recognition from local scale-invariant features. Proc of IEEE International Conference on Computer Vision, 1150-1157(1999)

Harwood, D., Ojala, T., Pietikinen, M.: Texture classification by center-symmetric auto-correlation, using Kullback discrimination of distributions. Pattern Recogn. Lett. 16, 1–10 (1995)

He, K., Zhang, X., Ren, S.: Spatial pyramid pooling in deep convolutional networks for visual recognition. IEEE Trans. Pattern Anal. Mach. Intell. 9, 1904–1916 (2015)

Kelm, A., Lauat, L., Meins-Becker, A.: Mobile passive Radio Frequency Identification (RFID) portal for automated and rapid control of Personal Protective Equipment (PPE) on construction sites. Autom. Construct. 36, 38–52 (2013)

Dong, S., He, Q., Li, H.: Automated PPE misuse identification and assessment for safety performance enhancement. ICCREM, pp. 204-214(2015)

Zhang, Y., Qiu, M., Tsai, C.W.: Health-CPS: healthcare cyber-physical system assisted by cloud and big data. IEEE Syst. J. 11, 88–95 (2015)

Wu, Z., Shen, C., Van Den Hengel, A.: Wider or deeper: Revisiting the resnet model for visual recognition. Pattern Recogn. 90, 119–133 (2019)

Ren, S., He, K., Girshick, R.: Faster R-CNN: Towards real-time object detection with region proposal networks. Proceedings of the 28th International Conference on Neural Information Processing Systems. Cambridge: MIT Press.91-99(2015)

Liu, W., Anguelov, D., Erhan, D.: Ssd: Single shot multibox detector. European conference on computer vision. Springer,21-37(2016)

Wu, J., Cai, N., Chen, W.: Automatic detection of hardhats worn by construction personnel: a deep learning approach and benchmark dataset. Autom. Constr. 106, 102894 (2019)

Ma, D., Dong, L., Xu, W.: A method of infrared small target detection in strong wind wave backlight conditions. Remote Sensing,20(2021)

Tan, M., Pang, R., Le, Q. V.: Efficientdet: Scalable and efficient object detection. Proceedings of the IEEE/CVF conference on computer vision and pattern recognition. pp. 10781-10790 (2020)

Shrestha, K., Shrestha, P.P., Bajracharya, D., Yfantis, E.A.: Hard-Hat Detection for Construction Safety Visualization. Journal of Construction Engineering (2015)

Redmon, J., Farhadi, A.: YOLO9000: better, faster, stronger[. Proceedings of the IEEE conference on computer vision and pattern recognition. pp. 7263-7271 (2017)

Misra, D.: Mish: A self regularized non-monotonic activation function. [5arXiv preprint (2019) arXiv:1908.08681 (2019)

Purkait, P., Zhao, C., Zach, C.: SPP-Net: Deep absolute pose regression with synthetic views. arXiv preprint arXiv:1712.03452 (2017)

Sun, X., Wu, P., Hoi, S.C.H.: Face detection using deep learning: an improved faster RCNN approach. Neurocomputing 299, 42–50 (2018)

Sengupta, A., Ye, Y., Wang, R.: Going deeper in spiking neural networks: VGG and residual architectures. Front. Neurosci. 13, 95 (2019)

Redmon, J., Divvala, S., Girshick, R.: You only look once: Unified, real-time object detection. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Las Vegas: IEEE, pp. 779-788 (2016)

Huang, G., Liu, S., Van der Maaten, L.: Condensenet: An efficient densenet using learned group convolutions. Proceedings of the IEEE conference on computer vision and pattern recognition. pp. 2752-2761 (2018)

Redmon, J., Farhadi, A.: Yolov3: An incremental improvemen. arXiv preprint arXiv:1804.02767 (2018)

Liu, S., Huang, D., Wang, Y.: Learning spatial fusion for single-shot object detection. arXiv preprint arXiv:1911.09516 (2019)

Tian, Z., Shen, C., Chen, H.: Fcos: Fully convolutional one-stage object detection. Proceedings of the IEEE/CVF international conference on computer vision. pp. 9627-9636 (2019)

Wang, D., He, D.: Channel pruned YOLO V5s-based deep learning approach for rapid and accurate apple fruitlet detection before fruit thinning. Biosys. Eng. 6, 271–281 (2021)

Zhou, F., Zhao, H., Nie, Z.: Safety helmet detection based on YOLOv5. 2021 IEEE International conference on power electronics, computer applications(ICPECA).IEEE, pp. 6-11 (2021)

Ye, J., Yuan, Z., Qian, C.: Caa-yolo: Combined-attention-augmented yolo for infrared ocean ships detection. Sensors 10, 3782 (2022)

Zhu, X., Lyu, S., Wang, X.: TPH-YOLOv5: Improved YOLOv5 based on transformer prediction head for object detection on drone-captured scenarios. Proceedings of the IEEE/CVF international conference on computer vision. pp. 2778-2788 (2021)

Zhang, H., Yan, X., Li, H.: Real-time alarming, monitoring, and locating for non-hard-hat use in construction. J. Constr. Eng. Manag. 145(3), 04019006 (2020)

Girshick, R., Donahue, J., Darrell, T.: Rich feature hierarchies for accurate object detection and semantic segmentation. Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition. Columbus, pp. 580-587 (2014)

Ghiasi, G., Lin, T. Y., Le, Q. V.: Nas-fpn: Learning scalable feature pyramid architecture for object detection. Proceedings of the IEEE/CVF conference on computer vision and pattern recognition. pp. 7036-7045 (2019)

Wang, K., Liew, J. H., Zou, Y.: Panet: Few-shot image semantic segmentation with prototype alignment. Proceedings of the IEEE/CVF International Conference on Computer Vision. pp. 9197-9206 (2019)

Liu, S., Qi, L., Qin, H.: path Aggregation Network for Instance Segmentation. Proceedings ofthe 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 8759-8768 (2018)

Wang, Y., Wang, C., Zhang, H.: Automatic ship detection based on RetinaNet using multi-resolution Gaofen-3 imagery. Remote Sens. 5, 531 (2019)

Woo, S., Park, J., Lee, J. Y.: Cbam: Convolutional block attention module. Proceedings of the European conference on computer vision (ECCV). pp. 3-19 (2018)

Dai, J., Li, Y., He, K.: R-FCN: Object Detection via Region-based Fully Convolutional Networks. Proceedings of the 30th International Conference on Neural Information Processing Systems, pp. 379-387 (2016)

Zheng, Z., Wang, P., Liu, W.: Distance-IoU loss: faster and better learning for bounding box regression. Proc. AAAI Conf. Artif. Intell. 34, 12993–13000 (2020)

Wu, H., He, Z., Gao, M.: GCEVT: Learning Global Context Embedding for Vehicle Tracking in Unmanned Aerial Vehicle Videos. IEEE Geoscience and Remote Sensing Letters (2022)

Wu, H., Nie, J., He, Z.: One-shot multiple object tracking in UAV videos using task-specific fine-grained features. Remote Sens. 14(16), 3853 (2022)

Wu, H., Nie, J., Zhu, Z.: Learning task-specific discriminative representations for multiple object tracking. Neural Comput. Appl. 35(10), 7761–7777 (2023)

Su, P., Liu, M., Ma, S.: Based on the improved YOLOXs helmet detection. [48]Computer system application, pp. 1-10 (2023)

Funding

This work has been supported by the National Natural Science Foundation of China (62166042, U2003207), Natural Science Foundation of Xinjiang, China (2021D01C076), and Strengthening Plan of National Defense Science and Technology Foundation of China (2021-JCJQ-JJ-0059).

Author information

Authors and Affiliations

Contributions

Conceptualization,LZ and TT curation LZ and AH; formal analysis, LZ and TT; methodology, LZ and TT; experimentation, LZ; writing—original draft, LZ; writing—review & editing TT, and AH. All authors have read and agreed to the published version of the manuscript.

Corresponding author

Ethics declarations

Conflicts of interest

The authors declare no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Zhao, L., Tohti, T. & Hamdulla, A. BDC-YOLOv5: a helmet detection model employs improved YOLOv5. SIViP 17, 4435–4445 (2023). https://doi.org/10.1007/s11760-023-02677-x

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11760-023-02677-x