Abstract

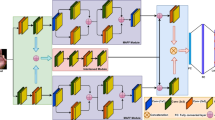

Gesture recognition has been widely used in many human–computer interaction applications, which is one of the most intuitive and natural ways for humans to communicate with computers. However, it remains a challenging problem due to the interference such as variety of backgrounds, hand similar object, and lighting changes. In this article, a lightweight static gesture recognition network, named as PEA-YOLO, was put forward. The network adopts the idea of adaptive spatial feature pyramid and combines the attention mechanism and multi-path feature fusion method to improve the localization and recognition performance of gesture features. First, Efficient Channel Attention module was added after the backbone network to focus the model’s attention on the gesture. Second, Feature Pyramid Network was replaced by Path Aggregation Network to localize the gesture better. Finally, Adaptive Spatial Feature Fusion module was added before the Yolo head to further reduce false detections rate in gesture recognition. The experiments conducted on the OUHANDS and NUSII datasets show that PEA-YOLO could achieve favorable performance with only 8.57 M parameters in static gesture recognition. Compared with other state of the arts, the proposed lightweight network has obtained a highest accuracy with a much high speed and few parameters.

Similar content being viewed by others

Data availability

The data and materials that support the findings of this study are available on request from the authors.

References

Redrovan, D.V., Kim, D.: Hand gestures recognition using machine learning for control of multiple quadrotors. In: 2018 IEEE Sensors Applications Symposium (SAS), pp. 1–6 (2018). https://doi.org/10.1109/SAS.2018.8336782

Zhou, W., Chen, K.: A lightweight hand gesture recognition in complex backgrounds. Displays (2022)

Padam Priyal, S., Bora, P.K.: A robust static hand gesture recognition system using geometry based normalizations and krawtchouk moments. Pattern Recognit. 46(8), 2202–2219 (2013)

Avraam, M.: Static gesture recognition combining graph and appearance features. Int. J. Adv. Res. Artif. Intell. 3(2) (2014)

Wu, C.H., Chen, W.L., Lin, C.H.: Depth-based hand gesture recognition. Multimedia Tools Appl. 75(12), 7065–7086 (2016)

Wu, X.Y.: A hand gesture recognition algorithm based on DC-CNN. Multimedia Tools Appl. 79(13–14), 9193–9205 (2020)

Yadav, K.S., Anish Monsley, K., Laskar, R.H.: Gesture objects detection and tracking for virtual text entry keyboard interface. Multimedia Tools Appl. 82(4), 5317–5342 (2023)

Wang, W., He, M., Wang, X., Ma, J., Song, H.: Medical gesture recognition method based on improved lightweight network. Appl. Sci. 12(13), 6414 (2022)

Diwan, T., Anirudh, G., Tembhurne, J.V.: Object detection using yolo: challenges, architectural successors, datasets and applications. Multimedia Tools Appl. 1–33 (2022)

Yadav, K.S., Laskar, R.H., Ahmad, N., et al.: Exploration of deep learning models for localizing bare-hand in the practical environment. Eng. Appl. Artif. Intell. 123, 106253 (2023)

Sun, S., Han, L., Wei, J., Hao, H., Huang, J., Xin, W., Zhou, X., Kang, P.: Shufflenetv2-yolov3: a real-time recognition method of static sign language based on a lightweight network. Signal Image Video Process. 1–9 (2023)

Lim, J.-S., Astrid, M., Yoon, H.-J., Lee, S.-I.: Small object detection using context and attention. In: 2021 International Conference on Artificial Intelligence in Information and Communication (ICAIIC), pp. 181–186. IEEE (2021)

Zhang, Y., Yi, P., Zhou, D., Yang, X., Yang, D., Zhang, Q., Wei, X.: Csanet: channel and spatial mixed attention cnn for pedestrian detection. IEEE Access 8, 76243–76252 (2020). https://doi.org/10.1109/ACCESS.2020.2986476

Liu, S., Qi, L., Qin, H., Shi, J., Jia, J.: Path aggregation network for instance segmentation. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 8759–8768 (2018)

Liu, S., Huang, D., Wang, Y.: Learning spatial fusion for single-shot object detection. arXiv preprint arXiv:1911.09516 (2019)

Li, X., Pan, J., Xie, F., Zeng, J., Li, Q., Huang, X., Liu, D., Wang, X.: Fast and accurate green pepper detection in complex backgrounds via an improved Yolov4-tiny model. Comput. Electron. Agric. 191, 106503 (2021)

Matilainen, M., Sangi, P., Holappa, J., Silvén, O.: Ouhands database for hand detection and pose recognition. In: 2016 Sixth International Conference on Image Processing Theory, Tools and Applications (IPTA), pp. 1–5. IEEE (2016)

Pisharady, P.K., Vadakkepat, P., Loh, A.P.: Attention based detection and recognition of hand postures against complex backgrounds. Int. J. Comput. Vis. 101, 403–419 (2013)

Funding

This research was funded in part by the State Key Laboratory of ASIC & System (2021KF010) and National Natural Science Foundation of China (Grant No. 61404083).

Author information

Authors and Affiliations

Contributions

WZ contributed to conceptualization, methodology, resources, supervision, writing—review and editing, project administration. XL contributed to methodology, software, validation, formal analysis, investigation, data curation, writing—original draft, and visualization.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no conflicts of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Zhou, W., Li, X. PEA-YOLO: a lightweight network for static gesture recognition combining multiscale and attention mechanisms. SIViP 18, 597–605 (2024). https://doi.org/10.1007/s11760-023-02755-0

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11760-023-02755-0