Abstract

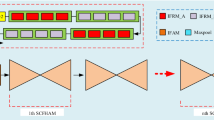

The traditional multi-person human pose estimation method has several problems including low real-time detection effect, low recognition efficiency, and a large number of calculation parameters. In this paper, we propose a lightweight network SP-YOLO based on the YOLO-Pose algorithm for real-time human pose estimation. Firstly, a lightweight ghost spatial pyramid pooling-fast (GSPPF) module is proposed by using GhostNet instead of convolution in the CSPDarkNet backbone network to make the pooling structure do layer-by-layer maxpooling operation and improve the speed of the backbone network. The ultra-lightweight attention mechanism called the shuffle attention (SA) module, is added between layers. Secondly, the neck structure is enhanced, and a more efficient slim path aggregation network (Slim-PAN) module is proposed to optimize the input relationship in the pyramid-enhanced feature structure. The loss function is refined to assign different weights to human pose detection and keypoint detection. Finally, the SP-YOLO method is evaluated through dataset evaluation and real-time detection experiments. A comparison with several other methods on the COCO2017-Keypoints dataset demonstrates that SP-YOLO improves the average precision (AP) by 1.5% compared to the original YOLO-Pose while reducing the number of parameters by 7.9 M. In the real-time detection experiment, the proposed method proves to be effective for end-to-end real-time detection of human poses.

Similar content being viewed by others

Data availability

The data come from the common dataset.

References

Li, Y., Jia, S., Li, Q.: BalanceHRNet: an effective network for bottom-up human pose estimation. Neural Netw. 161, 297–305 (2023). https://doi.org/10.1016/j.neunet.2023.01.036

Miki, D., Abe, S., Chen, S., et al.: Robust human pose estimation from distorted wide-angle images through iterative search of transformation parameters. SIViP 14, 693–700 (2020). https://doi.org/10.1007/s11760-019-01602-5

Lu, H., Shao, X., Xiao, Y.: Pose estimation with segmentation consistency. IEEE Trans. Image Process. 22(10), 4040–4048 (2013). https://doi.org/10.1109/TIP.2013.2268975

Wan, T., Luo, Y., Zhang, Z., et al.: TSNet: tree structure network for human pose estimation. SIViP 16, 551–558 (2022). https://doi.org/10.1007/s11760-021-01999-y

Ke, X., Liu, T., Li, Z.: Human attribute recognition method based on pose estimation and multiple-feature fusion. SIViP 14, 1441–1449 (2020). https://doi.org/10.1007/s11760-020-01690-8

Dayarathna, T., Muthukumarana, T., Rathnayaka, Y., Denman, S., de Silva, C., Pemasiri, A., Ahmedt-Aristizabal, D.: Privacy-preserving in-bed pose monitoring: a fusion and reconstruction study. Expert Syst. Appl. 213, 119139 (2023). https://doi.org/10.1016/j.eswa.2022.119139

Gomes, M.E.N., Macêdo, D., Zanchettin, C., De-Mattos-Neto, P.S.G., Oliveira, A.: Multi-human fall detection and localization in videos. Comput. Vision Image Understand. 220, 103442 (2022). https://doi.org/10.1016/j.cviu.2022.103442

Cao, D., Liu, W., Xing, W., et al.: Human pose estimation based on feature enhancement and multi-scale feature fusion. SIViP 17, 643–650 (2023). https://doi.org/10.1007/s11760-022-02271-7

Xiao, B., Wu, H., Wei, Y.: Simple baselines for human pose estimation and tracking. In European conference on computer vision (ECCV), (2018). https://doi.org/10.48550/arXiv.1804.06208

Chen, Y., Wang, Z., Peng, Y., Zhang, Z., Yu, G., Sun, J.: Cascaded pyramid network for multi-person pose estimation. In: The IEEE conference on computer vision and pattern recognition (CVPR), (2018). https://doi.org/10.48550/arXiv.1711.07319

Sun, K., Xiao, B., Liu, D., Wang, J.: Deep high-resolution representation learning for human pose estimation. In: The IEEE conference on computer vision and pattern recognition (CVPR), (2019). https://doi.org/10.48550/arXiv.1902.09212

Cheng, B., Xiao, B., Wang, J., Shi, H., Huang, T.S., Zhang, L.: HigherHRNet: scale-aware representation learning for bottom-up human pose estimation. In: The IEEE conference on computer vision and pattern recognition (CVPR), (2020). https://doi.org/10.48550/arXiv.1908.10357

Pishchulin, L., Insafutdinov, E., Tang, S., Andres, B., Andriluka, M., Gehler, P.V., Schiele, B:. DeepCut: joint subset partition and labeling for multi person pose estimation. In: The IEEE conference on computer vision and pattern recognition (CVPR), (2016), pp. 4929–4937. https://doi.org/10.1109/CVPR.2016.533

Insafutdinov, E., Pishchulin, L., Andres, B., Andriluka, M., Schieke, B.: DeeperCut: a deeper, stronger, and faster multi-person pose estimation model. In: The European conference on computer vision (ECCV), (2016). https://doi.org/10.1007/978-3-319-46466-4_3

Cao, Z., Hidalgo, G., Simon, T., Wei, S.E., Sheikh, Y.: OpenPose: realtime multi-person 2D pose estimation using part affinity fields. IEEE Trans. Pattern Anal. Mach. Intell. 43(1), 172–186 (2021). https://doi.org/10.1109/TPAMI.2019.2929257

Yang, S., Feng, Z., Wang, Z., Li, Y., Zhang, S., Quan, Z., Xia, S., Yang, W.: Detecting and grouping keypoints for multi-person pose estimation using instance-aware attention. Pattern Recogn. 136, 109232 (2023). https://doi.org/10.1016/j.patcog.2022.109232

Xu, Y., Piao, Z., Zhang, Z., Liu, W., Gao, S.: SUNNet: a novel framework for simultaneous human parsing and pose estimation. Neurocomputing 444, 349–355 (2021). https://doi.org/10.1016/j.neucom.2020.01.123

Lamas, A., Tabik, S., Montes, A.C., Pérez-Hernández, F., García, J., Olmos, R., Herrera, F.: Human pose estimation for mitigating false negatives in weapon detection in video-surveillance. Neurocomputing 489, 488–503 (2022). https://doi.org/10.1016/j.neucom.2021.12.059

Hou, Q., Zhou, D., Feng, J.: Coordinate attention for efficient mobile network design. In: 2021 IEEE/CVF conference on computer vision and pattern recognition (CVPR), Nashville, TN, USA, pp. 13708–13717, (2021). https://doi.org/10.1109/CVPR46437.2021.01350

Hu, J., Shen, L., Sun, G.: Squeeze-and-excitation networks. In: IEEE conference on computer vision and pattern recognition (CVPR), (2018). https://doi.org/10.48550/arXiv.1709.01507

Woo, S., Park, J., Lee, J.Y., Kweon, I.S.: CBAM: convolutional block attention module. In: The European conference on computer vision (ECCV), (2018). https://doi.org/10.1007/978-3-030-01234-2_1

Zhang, Q., Yang, Y.: SA-Net: shuffle attention for deep convolutional neural networks. arXiv preprint, (2021). https://doi.org/10.48550/arXiv.2102.00240

Jiang, C., Huang, K., Zhang, S., Wang, X., Xiao, J., Goulermas, Y.: Aggregated pyramid gating network for human pose estimation without pre-training. Pattern Recogn. 138, 109429 (2023). https://doi.org/10.1016/j.patcog.2023.109429

Zhong, F., Li, M., Zhang, K., Hu, J., Liu, L.: DSPNet: a low computational-cost network for human pose estimation. Neurocomputing 423, 327–335 (2021). https://doi.org/10.1016/j.neucom.2020.11.003

Maji, D., Nagori, S., Mathew, M., Poddar, D.: YOLO-Pose: enhancing YOLO for multi-person pose estimation using object keypoint similarity loss. arXiv preprint, (2022). https://doi.org/10.48550/arXiv.2204.06806

Han, K., Wang, Y., Tian, Q., Guo, J., Xu, C., Xu, C.: GhostNet: more features from cheap operations. In: IEEE conference on computer vision and pattern recognition (CVPR), (2020). https://doi.org/10.48550/arXiv.1911.11907

Lin, T.Y., Maire, M., Belongie, S., Hays, J., Perona, P., Ramanan, D., Dollar, P., Zitnick, C.L.: Microsoft COCO: common objects in context. In: The European conference on computer vision (ECCV), vol. 8693, pp. 740-755, (2014)

Newell, A., Yang, K., Deng, J.: Stacked hourglass networks for human pose estimation. In: The European conference on computer vision (ECCV), (2016). https://doi.org/10.48550/arXiv.1603.06937

Geng, Z., Sun, K., Xiao, B., Zhang, Z., Wang, J.: Bottom-Up Human Pose Estimation Via Disentangled Keypoint Regression. In: The IEEE conference on computer vision and pattern recognition (CVPR), (2021). https://doi.org/10.48550/arXiv.2104.02300

Osokin, D.: Real-time 2D multi-person pose estimation on CPU: lightweight OpenPose. arXiv preprint, 2018. https://doi.org/10.48550/arXiv.1811.12004

Funding

The authors wish to express their gratitude to the National Ministry of Science and Technology Innovation Method Special(2020IM020700).

Author information

Authors and Affiliations

Contributions

The first draft of the manuscript was written by YZ and all authors commented on previous versions of the manuscript. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Conflict of interest

The authors have no relevant financial or non-financial interests to disclose.

Ethical approval

Not applicable.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Zhang, Y., Wang, Z., Li, M. et al. SP-YOLO: an end-to-end lightweight network for real-time human pose estimation. SIViP 18, 863–876 (2024). https://doi.org/10.1007/s11760-023-02812-8

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11760-023-02812-8