Abstract

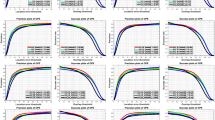

Multitask learning combining visual object tracking and other computer vision tasks has received increasing attention from researchers. Among them, the SiamMask algorithm can accomplish both object tracking and object segmentation tasks by utilizing a Siamese backbone network and a three-branch regression head. The mask refinement branch is the core innovation part of the SiamMask, which hierarchically integrates the features of the search region and the tracking correlation score maps. However, SiamMask and its subsequent improved algorithms do not fully integrated the target semantic information contained in multi-scale features into the mask refinement branch. To address the above problems, a module named inverted residual attention block is proposed, which combines the inverted residual structure and channel attention mechanism. The channel attention mechanism can effectively enhance the key information of the object and suppress the background noises by assigning weights to the feature channels output by different convolution kernels, thereby better handling the motion and deformation of the tracking object. Based on the proposed module and spatial attention mechanism, a novel multi-scale feature fusion method of the search region and tracking correlation score maps is proposed. The spatial attention mechanism can help the network focus on the region where the object is located and reduce the sensitivity to background interference, thus improving the accuracy and stability of tracking. Under the condition of using the same hardware and datasets, ablation experiments prove that the proposed improvements for the mask refinement branch are effective. Compared with the baseline SiamMask, the proposed method has achieved comparable segmentation results on the DAVIS datasets with improved speed. The expected average overlap on VOT-2018 has increased by 3.7%. The total number of parameters is reduced by 6.6%, including a 53.2% reduction in the number of parameters in the mask refinement branch.

Similar content being viewed by others

Code availability

Code will be available on request.

References

Bao, L., Wu, B., Liu, W.: CNN in MRF: Video object segmentation via inference in a CNN-based higher-order spatio-temporal MRF. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 5977–5986 (2018)

Bertinetto, L., Valmadre, J., Henriques, JF., et al.: Fully-convolutional siamese networks for object tracking. In: Computer Vision–ECCV 2016 Workshops: Amsterdam, The Netherlands, October 8–10 and 15–16, 2016, Proceedings, Part II 14, pp. 850–865 , Springer, Berlin (2016)

Bhat, G., Danelljan, M., Gool, LV., et al.: Learning discriminative model prediction for tracking. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 6182–6191 (2019)

Caelles, S., Maninis, KK., Pont-Tuset, J., et al.: One-shot video object segmentation. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 221–230 (2017)

Chen, B.X., Tsotsos, J.K.: Fast visual object tracking with rotated bounding boxes. (2019) arXiv preprint arXiv:1907.03892

Chen, X., Yan, B., Zhu, J., et al.: Transformer tracking. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 8126–8135 (2021)

Chen, Y., Pont-Tuset, J., Montes, A., et al.: Blazingly fast video object segmentation with pixel-wise metric learning. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 1189–1198 (2018)

Cheng, J., Tsai, Y.H., Wang, S., et al.: Segflow: joint learning for video object segmentation and optical flow. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 686–695 (2017)

Cheng, J., Tsai, Y.H., Hung, W.C., et al.: Fast and accurate online video object segmentation via tracking parts. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 7415–7424 (2018)

Cho, S., Lee, H., Woo, S., et al.: Pmvos: pixel-level matching-based video object segmentation (2020) arXiv preprint arXiv:2009.08855

Chu, Q., Ouyang, W., Li, H., et al.: Online multi-object tracking using cnn-based single object tracker with spatial-temporal attention mechanism. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 4836–4845 (2017)

Danelljan, M., Bhat, G., Shahbaz Khan, F., et al.: Eco: efficient convolution operators for tracking. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 6638–6646 (2017)

Gündoğdu, E., Alatan, A.A.: The visual object tracking vot2016 challenge results (2016)

He, A., Luo, C., Tian. X., et al.: Towards a better match in siamese network based visual object tracker. In: Proceedings of the European Conference on Computer Vision (ECCV) Workshops, (2018)

He, K., Zhang, X., Ren, S., et al.: Deep residual learning for image recognition. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 770–778 (2016)

Howard, A., Sandler, M., Chu, G., et al.: Searching for mobilenetv3. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 1314–1324 (2019)

Hu, J., Shen, L., Sun, G.: Squeeze-and-excitation networks. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 7132–7141 (2018)

Jampani, V., Gadde, R., Gehler, P.V.: Video propagation networks. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 451–461 (2017)

Kristan, M., Leonardis, A., Matas, J., et al.: The sixth visual object tracking vot2018 challenge results. In: Proceedings of the European Conference on Computer Vision (ECCV) Workshops, (2018)

Li, B., Yan, J., Wu, W., et al.: High performance visual tracking with siamese region proposal network. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 8971–8980 (2018)

Li, B., Wu, W., Wang, Q., et al.: Siamrpn++: Evolution of siamese visual tracking with very deep networks. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 4282–4291 (2019)

Li, X., Loy, C.C.: Video object segmentation with joint re-identification and attention-aware mask propagation. In: Proceedings of the European Conference on Computer Vision (ECCV), pp. 90–105 (2018)

Lin, T.Y., Maire, M., Belongie, S., et al.: Microsoft coco: Common objects in context. In: Computer Vision–ECCV 2014: 13th European Conference, Zurich, Switzerland, September 6–12, 2014, Proceedings, Part V 13, pp. 740–755. Springer (2014)

Oh, S.W., Lee, J.Y., Sunkavalli, K., et al.: Fast video object segmentation by reference-guided mask propagation. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 7376–7385 (2018)

Oh, S.W., Lee, J.Y., Xu, N., et al.: Video object segmentation using space-time memory networks. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 9226–9235 (2019)

Perazzi, F., Pont-Tuset, J., McWilliams, B., et al.: A benchmark dataset and evaluation methodology for video object segmentation. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 724–732 (2016)

Perazzi, F., Khoreva, A., Benenson, R., et al.: Learning video object segmentation from static images. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 2663–2672 (2017a)

Perazzi, F., Khoreva, A., Benenson, R., et al.: Learning video object segmentation from static images. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 2663–2672 (2017b)

Pinheiro, P.O., Lin, T.Y., Collobert, R., et al.: Learning to refine object segments. In: Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, October 11–14, 2016, Proceedings, Part I 14, pp. 75–91. Springer (2016)

Pont-Tuset, J., Perazzi, F., Caelles, S., et al.: The 2017 davis challenge on video object segmentation (2017) arXiv preprint arXiv:1704.00675

Ronneberger, O., Fischer, P., Brox, T.: U-net: convolutional networks for biomedical image segmentation. In: Medical Image Computing and Computer-Assisted Intervention - MICCAI, pp. 234–241 (2015)

Russakovsky, O., Deng, J., Su, H., et al.: Imagenet large scale visual recognition challenge. Int. J. Comput. Vis. 115, 211–252 (2015)

Shin Yoon, J., Rameau, F., Kim, J., et al.: Pixel-level matching for video object segmentation using convolutional neural networks. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 2167–2176 (2017)

Vaswani, A., Shazeer, N., Parmar, N., et al.: Attention is all you need. Adv. Neural Inf. Process. Syst. 30 (2017)

Voigtlaender, P., Leibe, B.: Online adaptation of convolutional neural networks for the 2017 davis challenge on video object segmentation. In: The 2017 DAVIS Challenge on Video Object Segmentation-CVPR Workshops (2017)

Wang, F., Jiang, M., Qian, C., et al.: Residual attention network for image classification. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 3156–3164 (2017)

Wang, Q., Zhang, L., Bertinetto, L., et al.: Fast online object tracking and segmentation: a unifying approach. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 1328–1338 (2019)

Wang, Q., Wu, B., Zhu, P., et al.: Eca-net: Efficient channel attention for deep convolutional neural networks. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 11534–11542 (2020)

Xu, N., Yang, L., Fan, Y., et al.: Youtube-vos: a large-scale video object segmentation benchmark (2018) arXiv preprint arXiv:1809.03327

Yan, B., Zhang, X., Wang, D., et al.: Alpha-refine: boosting tracking performance by precise bounding box estimation. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 5289–5298 (2021)

Yang, K., He, Z., Zhou, Z., et al.: Siamatt: Siamese attention network for visual tracking. Knowledge-based systems 203, 106079 (2020)

Yang, L., Wang, Y., Xiong, X., et al.: Efficient video object segmentation via network modulation. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 6499–6507 (2018)

Zhang, Z., Peng, H., Fu, J., et al.: Ocean: Object-aware anchor-free tracking. In: Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, August 23–28, 2020, Proceedings, Part XXI 16, pp. 771–787. Springer (2020)

Zhu, Z., Wang, Q., Li, B., et al.: Distractor-aware siamese networks for visual object tracking. In: Proceedings of the European Conference on Computer Vision (ECCV), pp. 101–117 (2018)

Funding

This work was supported by the talent introduction project of Xihua University under Grant No. Z212028.

Author information

Authors and Affiliations

Contributions

XB was involved in conceptualization, methodology, software and experiments, writing—original draft preparation and editing. CG helped in conceptualization, methodology, writing—review and editing.

Corresponding author

Ethics declarations

Conflict of interest

The authors have no competing interests to declare that are relevant to the content of this article.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Bian, X., Guo, C. SiamMaskAttn: inverted residual attention block fusing multi-scale feature information for multitask visual object tracking networks. SIViP 18, 1305–1316 (2024). https://doi.org/10.1007/s11760-023-02827-1

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11760-023-02827-1