Abstract

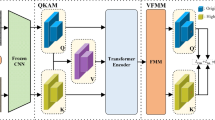

With the increasing of multi-source heterogeneous data, flexible retrieval across different modalities is an urgent demand in industrial applications. To allow users to control the retrieval results, a novel fabric image retrieval method is proposed in this paper based on multi-modal feature fusion. First, the image feature is extracted using the modified pre-trained convolutional neural network to separate macroscopic and fine-grained features, which are then selected and aggregated by the multi-layer perception. The feature of the modification text is extracted by long short-term memory networks. Subsequently, the two features are fused in a visual-semantic joint embedding space by gated and residual structures to control the selective expression of separable image features. To validate the proposed scheme, a fabric image database for multi-modal retrieval is created as the benchmark. Qualitative and quantitative experiments indicate that the proposed method is practicable and effective, which can be extended to other similar industrial fields, like wood and wallpaper.

Similar content being viewed by others

Data availability

The data that support the findings of this study are available from the corresponding author upon reasonable request.

References

Tamura, H., Yokoya, N.: Image database systems: a survey. Pattern Recogn. 17, 29–43 (1984)

Zhang, N., Xiang, J., Wang, L., Pan, R.: Research progress of content-based fabric image retrieval. Text. Res. J. 93, 1401–1418 (2023)

Hosseini, S.S., Yamaghani, M.R., Poorzaker Arabani, S.: Multi-modal modelling of human emotion using sound, image and text fusion. Signal Image Video P (2023). https://doi.org/10.1007/s11760-023-02707-8

Dhiman, G., Kumar, A.V., Nirmalan, R., Sujitha, S., Srihari, K., Yuvaraj, N., et al.: Multi-modal active learning with deep reinforcement learning for target feature extraction in multi-media image processing applications. Multimed. Tools Appl. 82, 5343–5367 (2023)

Han, X., Wu, Z., Huang, P.X., Zhang, X., Zhu, M., Li, Y., et al.: Automatic spatially-aware fashion concept discovery. In: 2017 IEEE International Conference on Computer Vision (ICCV). pp. 1472–1480 (2017)

Vo, N., Jiang, L., Sun, C., Murphy, K., Li, L.J., Fei-Fei, L., et al.: Composing text and image for image retrieval - an empirical odyssey. In: 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). pp. 6432–6441 (2019)

Zhen, L., Hu, P., Wang, X., Peng, D.: Deep supervised cross-modal retrieval. In: 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). pp. 10386–10395 (2019)

Yuan, L., Chen, Y., Wang, T., Yu, W., Shi, Y., Jiang, Z. et al.: Tokens-to-token vit: Training vision transformers from scratch on imagenet. arXiv preprint arXiv: 2101.11986 (2021)

Graham, B., El-Nouby, A., Touvron, H., Stock, P., Joulin, A., Jégou, H. et al.: Levit: a vision transformer in convnet’s clothing for faster inference. In: 2021 IEEE/CVF International Conference on Computer Vision (ICCV). pp. 12239–12249 (2021)

Xie, L., Wang, J., Zhang, B., Tian, Q.: Fine-grained image search. IEEE T Multimed. 17, 636–647 (2015)

Zhang, N., Xiang, J., Wang, L., Gao, W., Pan, R.: Image retrieval of wool fabric. Part i: based on low-level texture features. Text. Res. J. 89, 4195–4207 (2019)

Zhang, N., Xiang, J., Wang, L., Xiong, N., Gao, W., Pan, R.: Image retrieval of wool fabric. Part ii: based on low-level color features. Text. Res. J. 90, 797–808 (2020)

Xiang, J., Zhang, N., Pan, R., Gao, W.: Fabric retrieval based on multi-task learning. IEEE T Image Process. 30, 1570–1582 (2021)

Yu Y., L.S., Choi Y., Kim G.: Curlingnet: Compositional learning between images and text for fashion iq data. arXiv preprint arXiv: 2003.12299 (2020)

Wu, Y., Wang, S., Song, G., Huang, Q.: Online asymmetric metric learning with multi-layer similarity aggregation for cross-modal retrieval. IEEE T Image Process. 28, 4299–4312 (2019)

Zhao, B., Feng, J., Wu, X., Yan, S.: Memory-augmented attribute manipulation networks for interactive fashion search. In: 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR). pp. 6156–6164 (2017)

Wei, X.-S., Luo, J.-H., Wu, J., Zhou, Z.-H.: Selective convolutional descriptor aggregation for fine-grained image retrieval. IEEE T. Imag. Proc. 26, 2868–2881 (2017)

Feng, F., Wang, X., Li, R., Ahmad, I.: Correspondence autoencoders for cross-modal retrieval. ACM Trans. Multimed. Comput. Commun. Appl. 12, 26 (2015)

Liu, H., Feng, Y., Zhou, M., Qiang, B.: Semantic ranking structure preserving for cross-modal retrieval. Appl. Intell. 51, 1802–1812 (2021)

Peng, Y., Qi, J.: Cm-gans: cross-modal generative adversarial networks for common representation learning. ACM Trans. Multimed. Comput. Commun. Appl. 15, 22 (2019)

Anwaar, M.U., Labintcev, E., Kleinsteuber, M.: Compositional learning of image-text query for image retrieval. In: 2021 IEEE Winter Conference on Applications of Computer Vision (WACV). pp. 1139–1148 (2021)

Zhao, Y., Song, Y., Jin, Q.: Progressive learning for image retrieval with hybrid-modality queries, In: Proceedings of the 45th International ACM SIGIR Conference on Research and Development in Information Retrieval, Association for Computing Machinery, Madrid, Spain, 2022, pp. 1012–1021.

Tian, Y., Newsam, S., Boakye, K.: Fashion image retrieval with text feedback by additive attention compositional learning. In: 2023 IEEE/CVF Winter Conference on Applications of Computer Vision (WACV). pp. 1011–1021 (2023)

Yang, Q., Ye, M., Cai, Z., Su, K., Du, B.: Composed image retrieval via cross relation network with hierarchical aggregation transformer. IEEE T Image Process 32, 4543–4554 (2023)

Matkowski, W.M., Chai, T., Kong, A.W.K.: Palmprint recognition in uncontrolled and uncooperative environment. IEEE T Inf Foren Sec 15, 1601–1615 (2020)

Simonyan, K., Zisserman, A.: Very deep convolutional networks for large-scale image recognition. arXiv preprint arxiv:1409.1556 (2014)

Antol, S., Agrawal, A., Lu, J., Mitchell, M., Batra, D., Zitnick, C.L., et al.: Vqa: visual question answering. In: 2015 IEEE International Conference on Computer Vision (ICCV). pp. 2425–2433 (2015)

Xiong, R., Wang, Y., Tang, P., Cooke, N.J., Ligda, S.V., Lieber, C.S., et al.: Predicting separation errors of air traffic controllers through integrated sequence analysis of multimodal behaviour indicators. Adv. Eng. Inform. 55, 101894 (2023)

Funding

This work was supported by National Natural Science Foundation of China (No.62202202), the Fundamental Research Funds for the Central Universities (No.JUSRP123001) and Applied Research Project of Public Welfare Technology of Zhejiang Province (No.LGG21F030007).

Author information

Authors and Affiliations

Contributions

Conceptualization: Ning Zhang, Yixin Liu; Methodology: Ning Zhang, Jun Xiang; Formal analysis and investigation: Yixin Liu, Jun Xiang; Writing—original draft preparation: Ning Zhang; Writing—review and editing: Yixin Liu, Ruru Pan; Supervision: Ruru Pan. All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Conflict of interest

We declare that we do not have any commercial or associative interest that represents a conflict of interest in connection with the work submitted.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Zhang, N., Liu, Y., Li, Z. et al. Fabric image retrieval based on multi-modal feature fusion. SIViP 18, 2207–2217 (2024). https://doi.org/10.1007/s11760-023-02889-1

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11760-023-02889-1