Abstract

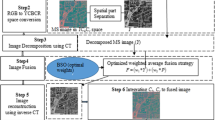

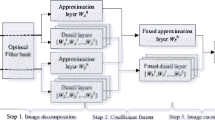

This paper proposes a multi-modal fusion algorithm for image filtering based on the guidance of local extrema maps. The image is subjected to a smoothing process using a locally extremal maps-guided image filter, and the difference from the original image forms the detail layer, while the smoothed image serves as the base layer. To preserve image details, both the base and detail layers undergo a resolution reduction process, establishing a multi-scale decomposition hierarchy. The base layer images at each level are decomposed using wavelet transform into low-frequency and high-frequency coefficients. For the low-frequency component, a region-energy fusion rule with adaptive weight allocation based on spatial and gradient information is employed, while the high-frequency component undergoes fusion using the AGPCNN fusion rule with neuron weight assignment initialized. The detail layer is fused using a weighted averaging fusion rule. Finally, the base layer images are fused through wavelet inverse transform. For the fusion of base and detail layers, a bottom layer employs the absolute maximum principle, while the top layer utilizes an average weighted fusion rule. Through subjective and objective analysis, experimental results indicate that the proposed fusion model algorithm not only effectively preserves edge and texture details but also maintains good color fidelity and spectral distortion control. Comparative analysis with eight other advanced fusion algorithms demonstrates superior fusion performance.

Similar content being viewed by others

Data Availability Statement

The data used to support the findings of this study are available from the corresponding author upon request.

References

Zhang, P., Jiang, Q., Cai, L., Wang, R., Wang, P., Jin, X.: Attention-based F-UNet for Remote Sensing Image Fusion. In: IEEE 23rd Int Conf on High Performance Computing & Communications; 7th Int Conf on Data Science & Systems; 19th Int Conf on Smart City; 7th Int Conf on Dependability in Sensor, Cloud & Big Data Systems & Application (HPCC/DSS/SmartCity/DependSys). Haikou, Hainan, China 2021, 81–88 (2021). https://doi.org/10.1109/HPCC-DSS-SmartCity-DependSys53884.2021.00038

Chu, F., Liu, H., Wang, Z., Cao, Z.: Super-resolution Reconstruction of Airborne Remote Sensing Images based on Multi-scale Fusion. In: 2022 3rd International Conference on Big Data, Artificial Intelligence and Internet of Things Engineering (ICBAIE), Xi’an, China, pp. 648–651 (2022). https://doi.org/10.1109/ICBAIE56435.2022.9985886.

Ma, W., et al.: A multi-scale progressive collaborative attention network for remote sensing fusion classification. IEEE Trans. Neural Netw. Learn. Syst. 34(8), 3897–3911 (2023). https://doi.org/10.1109/TNNLS.2021.3121490

Tan, W., Xiang, P., Zhang, J., Zhou, H., Qin, H.: Remote sensing image fusion via boundary measured dual-channel PCNN in multi-scale morphological gradient domain. IEEE Access 8, 42540–42549 (2020). https://doi.org/10.1109/ACCESS.2020.2977299

Basheer, P. I., Prasad, K. P., Gupta, A. D., Pant, B., Vijavan, V. P., Kapila, D.: Optimal fusion technique for multi-scale remote sensing images based on DWT and CNN. In: 2022 8th International Conference on Smart Structures and Systems (ICSSS), Chennai, India, 2022, pp. 1–6. https://doi.org/10.1109/ICSSS54381.2022.9782239.

Li, S., Kang, X., Fang, L., Hu, J., Yin, H.: Pixel-level image fusion: A survey of the state of the art. Inform. Fusion, 33, 100–112, ISSN 1566–2535. https://doi.org/10.1016/j.inffus.2016.05.004.

Kumar, K. V., Sathish, A.: A comparative study of various multimodal medical image fusion techniques—A review. In: Seventh International conference on Bio Signals, Images, and Instrumentation (ICBSII). Chennai, India, pp 1–6 (2021). https://doi.org/10.1109/ICBSII51839.2021.9445149

Sebastian, J., King, G.R.G.: Fusion of multimodality medical images—a review. In: Smart Technologies, Communication and Robotics (STCR). Sathyamangalam, India, pp 1–6 (2021). https://doi.org/10.1109/STCR51658.2021.9588882

Wang, Q., Chen, W., Wu, X., Li, Z.: Detail-enhanced multi-scale exposure fusion in YUV color space. IEEE Trans. Circuits Syst. Video Technol. 30(8), 2418–2429 (2020). https://doi.org/10.1109/TCSVT.2019.2919310

Li, Y., Liu, M., Han, K.: Overview of multi-exposure image fusion. In: 2021 International Conference on Electronic Communications, Internet of Things and Big Data (ICEIB), Yilan County, Taiwan, 2021, pp. 196–198. https://doi.org/10.1109/ICEIB53692.2021.9686453.

Li, H., Wang, J., Han, C.: Image mosaic and hybrid fusion algorithm based on pyramid decomposition. In: 2020 International Conference on Virtual Reality and Visualization (ICVRV), Recife, Brazil, 2020, pp. 205–208. https://doi.org/10.1109/ICVRV51359.2020.00049.

Li, D., Dong, X., Wang, K., Zhou, M., Chen, H., Su, J.: Image fusion algorithm based on Laplacian pyramid and principal component analysis transforms. In: 2022 International Conference on Computer Network, Electronic and Automation (ICCNEA), Xi'an, China, 2022, pp. 31–35. https://doi.org/10.1109/ICCNEA57056.2022.00018.

Vajpayee, P., Panigrahy, C., Kumar, A.: Medical image fusion by adaptive Gaussian PCNN and improved Roberts operator. SIViP 17, 3565–3573 (2023). https://doi.org/10.1007/s11760-023-02581-4

Jana, M., Basu, S., Das, A.: NSCT-DCT based Fourier Analysis for Fusion of Multimodal Images. In: 2021 IEEE 8th Uttar Pradesh Section International Conference on Electrical, Electronics and Computer Engineering (UPCON), Dehradun, India, 2021, pp. 1–6. https://doi.org/10.1109/UPCON52273.2021.9667618.

Jie, Y., Li, X., Wang, M., Zhou, F., Tan, H.: Medical image fusion based on extended difference-of-Gaussians and edge-preserving. Expert Syst. Appl. 227, 120301. ISSN 0957–4174 (2023). https://doi.org/10.1016/j.eswa.2023.120301

Jana, M., Basu, S., Das, A.: Fusion of Multimodal Images using Parametrically Optimized PCNN and DCT based Fourier Analysis. In: IEEE Delhi Section Conference (DELCON). New Delhi, India 2022, 1–7 (2022). https://doi.org/10.1109/DELCON54057.2022.9753411

Zhang, Y., Lee, H. J.: Infrared and visible image fusion based on multi-scale decomposition and texture preservation model. In: 2021 International Conference on Computer Engineering and Artificial Intelligence (ICCEAI), Shanghai, China, 2021, pp. 335–339 (2021). https://doi.org/10.1109/ICCEAI52939.2021.00067.

Wu, S., Zhang, K., Yuan, X., Zhao, C.: Infrared and visible image fusion by using multi-scale transformation and fractional-order gradient information. In: ICASSP 2023–2023 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Rhodes Island, Greece, 2023, pp. 1–5 (2023). https://doi.org/10.1109/ICASSP49357.2023.10096652.

Yu, Z., Wenhao, X., Shunli, Z., Jianjun, S., Ran, W., Xiangzhi, B., Li, Z., Qing, Z.: Local extreme map guided multi-modal brain image fusion. Front. Neurosci. 16 (2022). https://doi.org/10.3389/fnins.2022.1055451.

Veshki, F. G., Ouzir, N., Vorobyov, S. A., Ollila, E.: Multimodal image fusion via coupled feature learning. Signal Process. 200, 108637. ISSN 0165–1684 (2022). https://doi.org/10.1016/j.sigpro.2022.108637.

Jie, Y., Li, X., Tan, H., Zhou, F., Wang, G.: Multi-modal medical image fusion via multi-dictionary and truncated Huber filtering. Biomed. Signal Process. Control 88(Part B), 105671. ISSN 1746–8094 (2024). https://doi.org/10.1016/j.bspc.2023.105671.

Lindeberg, T.: Scale-space theory in computer vision. Lecture Notes in Computer Science (1993)

Witkin A. P. Scale-space fltering. In: Proceedings of 8th Int. Joint Conf. Art. Intell., 1983, pp 1019–1022

Simonoff, J.S.: Smoothing Methods in Statistics (Springer Series in Statistics). Springer-Verlag, Berlin (1996)

He, K., Sun, J., Tang, X.: Guided image filtering. IEEE Trans. Pattern Anal. Mach. Intell. 35(6), 1397–1409 (2013). https://doi.org/10.1109/TPAMI.2012.213

Acknowledgements

This work is sponsored by Natural Science Foundation of Xinjiang Uygur Autonomous Region (Grant No. 2022D01C425), the Postgraduate Course Scientific Research Project of Xinjiang University (Grant No. XJDX2022YALK11)

Author information

Authors and Affiliations

Contributions

M.S. and X.Z. made significant contributions to conceptualization and methodology. M.S. , X.Z. and Y.N. were involved in the software development and validation. M.S. and X.Z. actively participated in the writing of the original draft. X.Z. , M.S. and Y.L. made substantial contributions to the writing, reviewing, and editing process. X.Z. provided research materials. X.Z. played a crucial role in supervision and funding acquisition. All authors critically reviewed the manuscript.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that there are no conflicts of interest regarding the publication of this article.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Sun, M., Zhu, X., Niu, Y. et al. Multi-modal remote sensing image fusion method guided by local extremum maps-guided image filter. SIViP 18, 4375–4383 (2024). https://doi.org/10.1007/s11760-024-03079-3

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11760-024-03079-3