Abstract

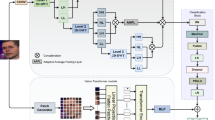

Face manipulation is the process of modifying facial features in videos or images to produce a variety of artistic or deceptive effects. Face manipulation detection looks for altered or falsified visual media in order to differentiate between real and fake facial photographs or videos. The intricacy of the techniques used makes it difficult to detect face manipulation, particularly in the context of technologies like DeepFake. This paper presents an efficient framework based on Hybrid Learning and Kernel Principal Component Analysis (KPCA) to extract more extensive and refined face-manipulating attributes. The proposed method utilizes the EfficientNetV2-L model for feature extraction, topped up with KPCA for feature dimensionality reduction, to distinguish between real and fake facial images. The proposed method is robust to various facial manipulations techniques such as identity swap, expression swap, attribute-based manipulation, and entirely synthesized faces. In this work, data augmentation is used to solve the problem of class imbalance present in the dataset. The proposed method has less execution time while achieving an accuracy of 99.3% and an F1 Score of 0.98 on the Diverse Fake Face Dataset (DFFD).

Similar content being viewed by others

Availability of data and materials

The dataset analyzed during the study is available via the web link: DFFD-https://cvlab.cse.msu.edu/project-ffd.html.

Notes

Deepfakes:https://github.com/deepfakes/faceswap.

FaceApp:https://faceapp.com/app.

References

Aloraini, M.: FaceMD: convolutional neural network-based spatiotemporal fusion facial manipulation detection. SIViP 17(1), 247–255 (2023). https://doi.org/10.1007/s11760-022-02227-x

Burges, C.J.C., Smola, A.J., Scholkopf, B.: Advances in kernel methods. Support Vector Learn. 53, 220 (1999)

Choi, Y., Choi, M., Kim, M. et al.: Stargan: Unified generative adversarial networks for multi-domain image-to-image translation. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 8789–8797 (2018)

Dang, H., Liu, F., Stehouwer, J., et al.: On the detection of digital face manipulation. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern recognition, pp. 5781–5790 (2020)

Feeney, M.: Deepfake Laws Risk Creating More Problems Than They Solve. Regulatory Transparency Project (2021)

Hubálovský, Š, Trojovský, P., Bacanin, N., et al.: Evaluation of deepfake detection using YOLO with local binary pattern histogram. PeerJ Comput. Sci. 8, e1086 (2022)

Jiang, L., Li, R., Wu, W., et al.: DeeperForensics-1.0: a large-scale dataset for real-world face forgery detection. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp. 2889–2898 (2020)

Kalpana, V., Jayalakshmi, M., Kishore, V.V.: Medical image forgery detection by a novel segmentation method With KPCA. Cardiometry 24, 1079–1085 (2022)

Karras, T., Aila, T., Laine, S. et al.: Progressive growing of GANs for improved quality, stability, and variation. arXiv preprint arXiv:1710.10196 (2017)

Karras, T., Laine, S., Aila, T.: A style-based generator architecture for generative adversarial networks. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 4401–4410 (2019)

Liu, Z., Luo, P., Wang, X., et al.: Deep learning face attributes in the wild. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 3730–3738 (2015)

Matern, F., Riess, C., Stamminger, M.: Exploiting visual artifacts to expose deepFakes and face manipulations. In: 2019 IEEE Winter Applications of Computer Vision Workshops (WACVW), pp. 83–92, IEEE (2019)

Rossler, A., Cozzolino, D., Verdoliva, L., et al.: FaceForensics++: learning to detect manipulated facial images. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 1–11 (2019)

Sabah, H.: A detection of deep fake in face images using deep learning. Wasit J. Comput. Math. Sci. 1(4), 60–71 (2022). https://doi.org/10.31185/wjcm.92

Schölkopf, B., Smola, A., Müller, K.R.: Kernel principal component analysis. Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics), vol. 1327(3), pp. 583–588 (1997). https://doi.org/10.1007/bfb0020217

Solaiyappan, S., Wen, Y.: Machine learning based medical image deepFake detection: a comparative study. Mach. Learn. Appl. 8, 100298 (2022)

Stroebel, L., Llewellyn, M., Hartley, T., et al.: A systematic literature review on the effectiveness of DeepFake detection techniques. J. Cyber Secur. Technol. 7(2), 83–113 (2023)

Suganthi, S.T., Ayoobkhan, M.U.A., Bacanin, N., et al.: Deep learning model for deep fake face recognition and detection. PeerJ Comput. Sci. 8, e881 (2022)

Taloba, A.I., Eisa, D.A., Ismail, S.S.I.: A comparative study on using principle component analysis with different text classifiers. arXiv preprint arXiv:1807.03283. (2018)

Tan, M., Le, Q.V.: EfficientNetV2: smaller models and faster training. Proc. Mach. Learn. Res. 139, 10096–10106 (2021). arXiv:2104.00298

Thies, J., Zollhofer, M., Stamminger, M., et al.: Face2Face: real-time face capture and reenactment of rgb videos. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 2387–2395 (2016)

Thies, J., Zollhöfer, M., Nießner, M.: Deferred neural rendering: image synthesis using neural textures. ACM Trans. Gr. (TOG) 38(4), 1–12 (2019)

Tolosana, R., Vera-Rodriguez, R., Fierrez, J., et al.: An introduction to digital face manipulation. In: Handbook of Digital Face Manipulation and Detection: From DeepFakes to Morphing Attacks, pp. 3–26. Springer International Publishing Cham (2022)

Funding

No funding was obtained for this study.

Author information

Authors and Affiliations

Contributions

Rahul Thakur: Conceptualization, Methodology, Validation, Writing-Original Draft, Visualization, Formal Analysis Rajesh Rohilla: Formal Analysis, Resources, Writing-Review & Editing, Supervision.

Corresponding author

Ethics declarations

Ethical approval

Not applicable for this article.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Thakur, R., Rohilla, R. An effective framework based on hybrid learning and kernel principal component analysis for face manipulation detection. SIViP 18, 4811–4820 (2024). https://doi.org/10.1007/s11760-024-03117-0

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11760-024-03117-0