Abstract

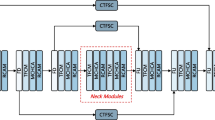

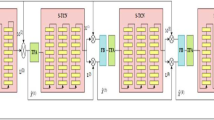

Speech enhancement is a fundamental task for acoustic signal processing, which is still an unsolved challenge. Recently, with the rapid development of deep learning, data-driven approaches based on a variety of different modules in machine learning have made great progress in speech enhancement. Each of these basic modules have unique advantages as well as certain limitations. Inspired by the blocks’ unique preferences and the distinguishing feature of speech signals, we proposed a multi-stage strength estimation network with cross-attention for single-channel speech enhancement in this paper. The proposed method consists of a feature-wised fusion block using the attention mechanism and the strength estimation block using FFT and sequential representations (FTB). We first describe the speech enhancement problem mathematically, after which we compared the proposed method with some well-known speech enhancement methods on the 50-h DNS and LibriFSD50K dataset, showing that the proposed method can pay full attention to both time and frequency domains and achieve satisfying results. Further ablation studies are also carried out to prove the effectiveness of each section of the proposed method, and the results show the effectiveness of the proposed method. By the exhibit of the proposed method, we show the effectiveness of improving the performance of speech enhancement models by utilizing modules with different properties, which pointing out a promising direction for the future development.

Similar content being viewed by others

Data availability statements

All datasets used in our research are public access from internet.

References

Priyanka, S.S., Kumar, T.K.: Multi-channel speech enhancement using early and late fusion convolutional neural networks. Signal Image Video Process. 17(4), 973–979 (2023)

Gay, S.L., Benesty, J.: Acoustic Signal Processing for Telecommunication vol. 551. Springer, Berlin (2012)

Shen, Y.-L., Huang, C.-Y., Wang, S.-S., Tsao, Y., Wang, H.-M., Chi, T.-S.: Reinforcement learning based speech enhancement for robust speech recognition. In: ICASSP 2019-2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pp. 6750–6754 (2019). IEEE

Reddy, C.K.A., Shankar, N., Bhat, G.S., Charan, R., Panahi, I.: An individualized super-gaussian single microphone speech enhancement for hearing aid users with smartphone as an assistive device. IEEE Signal Process. Lett. 24(11), 1601–1605 (2017)

Bai, Z., Zhang, X.-L.: Speaker recognition based on deep learning: an overview. Neural Netw. 140, 65–99 (2021)

Boll, S.: Suppression of acoustic noise in speech using spectral subtraction. IEEE Trans. Acoust. Speech Signal Process. 27(2), 113–120 (1979). https://doi.org/10.1109/TASSP.1979.1163209

Ephraim, Y.: Statistical-model-based speech enhancement systems. Proc. IEEE 80(10), 1526–1555 (1992). https://doi.org/10.1109/5.168664

Zhao, Y., Xu, B., Giri, R., Zhang, T.: Perceptually guided speech enhancement using deep neural networks. In: 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pp. 5074–5078 (2018). https://doi.org/10.1109/ICASSP.2018.8462593

Feng, X., Zhang, Y., Glass, J.: Speech feature denoising and dereverberation via deep autoencoders for noisy reverberant speech recognition. In: 2014 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pp. 1759–1763 (2014). https://doi.org/10.1109/ICASSP.2014.6853900

Gao, T., Du, J., Dai, L.-R., Lee, C.-H.: Densely connected progressive learning for lstm-based speech enhancement. In: 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pp. 5054–5058 (2018). https://doi.org/10.1109/ICASSP.2018.8461861

Park, S.R., Lee, J.: A fully convolutional neural network for speech enhancement. arXiv preprint arXiv:1609.07132 (2016)

Westhausen, N.L., Meyer, B.T.: Dual-signal transformation lstm network for real-time noise suppression. arXiv preprint arXiv:2005.07551 (2020)

Choi, H.-S., Kim, J.-H., Huh, J., Kim, A., Ha, J.-W., Lee, K.: Phase-aware speech enhancement with deep complex u-net. In: International Conference on Learning Representations (2018)

Li, A., Liu, W., Luo, X., Yu, G., Zheng, C., Li, X.: A simultaneous denoising and dereverberation framework with target decoupling. arXiv preprint arXiv:2106.12743 (2021)

Tzinis, E., Casebeer, J., Wang, Z., Smaragdis, P.: Separate but together: unsupervised federated learning for speech enhancement from non-iid data. In: 2021 IEEE Workshop on Applications of Signal Processing to Audio and Acoustics (WASPAA) (2021). IEEE

Rombach, R., Blattmann, A., Lorenz, D., Esser, P., Ommer, B.: High-resolution image synthesis with latent diffusion models. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 10684–10695 (2022)

Reddy, C.K., Gopal, V., Cutler, R.: Dnsmos: A non-intrusive perceptual objective speech quality metric to evaluate noise suppressors. In: ICASSP 2021 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pp. 6493–6497 (2021). IEEE

Acknowledgements

This work was supported by the National Natural Science Foundation of China (No. 52275296), National Key Research and Development Program of China (No. 2022YFC2402700), the China Scholarships Council (No. 202306420024), the Graduate Innovation Program of China University of Mining and Technology (No. 2024WLJCRCZL112), the Postgraduate Research & Practice Innovation Program of Jiangsu Province (No. KYCX24_2729), and the Priority Academic Program Development of Jiangsu Higher Education Institutions.

Author information

Authors and Affiliations

Contributions

ZZ: Data Curation, Methodology, Conceptualization, Writing—Original Draft. YD: Software, Writing—Original Draft, Investigation. WC: Visualization, Project administration. YC: Writing—Original Draft, Investigation. WG: Project administration, Supervision. HL: Supervision, Funding acquisition. Both authors co-wrote the manuscript and approved the final version for submission.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no Conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Zhang, Z., Ding, Y., Chen, W. et al. Multi-stage strength estimation network with cross attention for single channel speech enhancement. SIViP 18, 6937–6948 (2024). https://doi.org/10.1007/s11760-024-03364-1

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11760-024-03364-1