Abstract

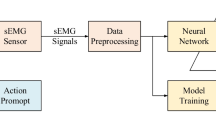

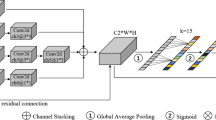

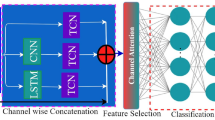

Gesture recognition, being a natural and convenient means of human-computer interaction, has emerged as a prominent area of research. The goal of accurate and swift gesture recognition has driven numerous studies, as it plays a crucial role in meeting practical application demands. Real-time monitoring of surface electromyography (sEMG) signals collected from sEMG sensor during gesture execution enables the extraction of rich gesture action features, prompting researchers to delve into sEMG-based gesture recognition algorithms. However, existing algorithms often struggle to strike a favorable balance between prediction accuracy and computational efficiency. In response to these challenges, this paper proposes a new gesture recognition model leveraging multi-channel sEMG signals captured by a sEMG sensor. The approach involves employing a lightweight convolutional neural network (CNN) model as the backbone network, supplemented by a lightweight feature extraction block (LFE) to effectively aggregate multi-scale features. Additionally, the model benefits from pre-training weights based on transfer learning, enhancing feature extraction capabilities and generalization ability for faster convergence of the prediction model. Moreover, the introduction of a plug-and-play block called the broad attention learning block (BAL) significantly improves the overall recognition performance of the proposed model. To better capture contextual information from multi-channel sEMG signals, a pyramid input with varying resolutions is constructed, elevating the model’s multi-scale feature extraction capability. The proposed model is evaluated and validated on a dynamic gesture dataset collected using wearable sensor (Myo). A series of ablation experiments, comparative analyses, and visualization outcomes unequivocally showcase the remarkable efficacy and superiority of our proposed method. Comparisons with other advanced models reveal that the proposed model successfully achieves a balanced compromise between prediction accuracy and processing speed.

Similar content being viewed by others

Data Availability Statement

The data that support the findings of this study are available upon reasonable request.

References

Duchateau, J., Enoka, R.M.: Human motor unit recordings: origins and insight into the integrated motor system. Brain Res. 1409, 42–61 (2011)

Jiang, X., Liu, X., Fan, J., Ye, X., Dai, C., Clancy, E.A., Farina, D., Chen, W.: Optimization of hd-semg-based cross-day hand gesture classification by optimal feature extraction and data augmentation. IEEE Trans. Human Mach. Syst. 52(6), 1281–1291 (2022)

Shen, C., Pei, Z., Chen, W., Wang, J., Zhang, J., Chen, Z.: Toward generalization of semg-based pattern recognition: A novel feature extraction for gesture recognition. IEEE Trans. Instrum. Measure. 71, 1–12 (2022)

Al-Timemy, A.H., Khushaba, R.N., Bugmann, G., Escudero, J.: Improving the performance against force variation of EMG controlled multifunctional upper-limb prostheses for transradial amputees. IEEE Trans. Neural Syst. Rehabilitat. Eng. 24(6), 650–661 (2015)

Hong, C., Park, S., Kim, K.: sEMG-based gesture recognition using temporal history. IEEE Trans. Biomed. Eng. 70(9), 2655–2666 (2023)

Song, W., Han, Q., Lin, Z., Yan, N., Luo, D., Liao, Y., Zhang, M., Wang, Z., Xie, X., Wang, A., et al.: Design of a flexible wearable smart sEMG recorder integrated gradient boosting decision tree based hand gesture recognition. IEEE Trans. Biomed. Circ. Syst. 13(6), 1563–1574 (2019)

Lu, Z., Chen, X., Li, Q., Zhang, X., Zhou, P.: A hand gesture recognition framework and wearable gesture-based interaction prototype for mobile devices. IEEE Trans. Human-Mach. Syst. 44(2), 293–299 (2014)

Zhang, D., Xiong, A., Zhao, X., Han, J.: PCA and LDA for EMG-based control of bionic mechanical hand, in,: IEEE international conference on information and automation. IEEE 2012, 960–965 (2012)

Khezri, M., Jahed, M.: A neuro-fuzzy inference system for sEMG-based identification of hand motion commands. IEEE Trans. Ind. Electron. 58(5), 1952–1960 (2010)

Atzori, M., Cognolato, M., Müller, H.: Deep learning with convolutional neural networks applied to electromyography data: A resource for the classification of movements for prosthetic hands. Front. Neuror. 10, 9 (2016)

Duan, S., Wu, L., Xue, B., Liu, A., Qian, R., Chen, X.: A hybrid multimodal fusion framework for sEMG-acc-based hand gesture recognition. IEEE Sensors J. 23(3), 2773–2782 (2023)

Côté-Allard, U., Fall, C. L., Campeau-Lecours, A., Gosselin, C., Laviolette, F., Gosselin, B.: Transfer learning for sEMG hand gestures recognition using convolutional neural networks, in: 2017 IEEE International Conference on Systems, Man, and Cybernetics (SMC), IEEE, (2017), pp. 1663–1668

Côté-Allard, U., Fall, C.L., Drouin, A., Campeau-Lecours, A., Gosselin, C., Glette, K., Laviolette, F., Gosselin, B.: Deep learning for electromyographic hand gesture signal classification using transfer learning. IEEE Trans. Neural Syst. Rehabilitat. Eng. 27(4), 760–771 (2019)

Shen, S., Wang, X., Wu, M., Gu, K., Chen, X., Geng, X.: Ica-CNN: Gesture recognition using CNN with improved channel attention mechanism and multimodal signals. IEEE Sensors J. 23(4), 4052–4059 (2023)

Quivira, F., Koike-Akino, T., Wang, Y., Erdogmus, D.: Translating sEMG signals to continuous hand poses using recurrent neural networks, in: 2018 IEEE EMBS International Conference on Biomedical & Health Informatics (BHI), IEEE, (2018), pp. 166–169

Sun, T., Hu, Q., Gulati, P., Atashzar, S.F.: Temporal dilation of deep lstm for agile decoding of sEMG: Application in prediction of upper-limb motor intention in neurorobotics. IEEE Robot. Autom. Lett. 6(4), 6212–6219 (2021)

Hu, Y., Wong, Y., Wei, W., Du, Y., Kankanhalli, M., Geng, W.: A novel attention-based hybrid CNN-RNN architecture for sEMG-based gesture recognition. PloS one 13(10), e0206049 (2018)

Wu, Y., Zheng, B., Zhao, Y.: Dynamic gesture recognition based on lstm-CNN, in,: Chinese Automation Congress (CAC). IEEE pp 2446–2450 (2018)

Rahimian, E., Zabihi, S., Asif, A., Farina, D., Atashzar, S. F., Mohammadi, A.: Temgnet: Deep transformer-based decoding of upperlimb semg for hand gestures recognition, arXiv preprint arXiv:2109.12379

Montazerin, M., Zabihi, S., Rahimian, E., Mohammadi, A., Naderkhani, F.: Vit-hgr: Vision transformer-based hand gesture recognition from high density surface emg signals. arxiv, arXiv preprint arXiv:2201.10060

Shen, S., Wang, X., Mao, F., Sun, L., Gu, M.: Movements classification through sEMG with convolutional vision transformer and stacking ensemble learning. IEEE Sensors J. 22(13), 13318–13325 (2022)

Coelho, A.L., Lima, C.A.: Assessing fractal dimension methods as feature extractors for EMG signal classification. Eng. Appl. Artif. Intel. 36, 81–98 (2014)

Krasoulis, A., Vijayakumar, S., Nazarpour, K.: Multi-grip classification-based prosthesis control with two EMG-IMU sensors. IEEE Trans. Neural Syst. Rehabilitat. Eng. 28(2), 508–518 (2019)

Khushaba, R.N., Nazarpour, K.: Decoding HD-EMG signals for myoelectric control-how small can the analysis window size be? IEEE Robot. Autom. Lett. 6(4), 8569–8574 (2021)

Chen, C.P., Liu, Z.: Broad learning system: an effective and efficient incremental learning system without the need for deep architecture. IEEE Trans. Neural Netw. Learn. Syst. 29(1), 10–24 (2017)

Zhang, X., Zhou, X., Lin, M., Sun, J.: Shufflenet: An extremely efficient convolutional neural network for mobile devices, in: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), (2018), pp. 6848–6856

Howard, A. G., Zhu, M., Chen, B., Kalenichenko, D., Wang, W., Weyand, T., Andreetto, M., Adam, H.: Mobilenets: Efficient convolutional neural networks for mobile vision applications, arXiv preprint arXiv:1704.04861

Tan, M., Le, Q.: Efficientnet: Rethinking model scaling for convolutional neural networks, in: International conference on machine learning, PMLR, (2019), pp. 6105–6114

Simonyan, K., Zisserman, A.: Very deep convolutional networks for large-scale image recognition, arXiv preprint arXiv:1409.1556

Szegedy, C., Liu, W., Jia, Y., Sermanet, P., Reed, S., Anguelov, D., Erhan, D., Vanhoucke, V., Rabinovich, A.: Going deeper with convolutions, in: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), (2015), pp. 1–9

Huang, G., Liu, Z., Van Der Maaten, L., Weinberger, K. Q.: Densely connected convolutional networks, in: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), (2017), pp. 4700–4708

Xie, S., Girshick, R., Dollár, P., Tu, Z., He, K.: Aggregated residual transformations for deep neural networks, in: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), (2017), pp. 1492–1500

He, K., Zhang, X., Ren, S., Sun, J.: Deep residual learning for image recognition, in: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), (2016), pp. 770–778

Dosovitskiy, A., Beyer, L., Kolesnikov, A., Weissenborn, D., Zhai, X., Unterthiner, T., Dehghani, M., Minderer, M., Heigold, G., Gelly, S.: An image is worth 16x16 words: Transformers for image recognition at scale, arXiv preprint arXiv:2010.11929

Liu, Z., Lin, Y., Cao, Y., Hu, H., Wei, Y., Zhang, Z., Lin, S., Guo, B.: Swin transformer: Hierarchical vision transformer using shifted windows, in: Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), (2021), pp. 10012–10022

Acknowledgements

All the authors would like to thank the anonymous referees for their valuable suggestions and comments.This research work was supported by the National Key Research & Development Project of China (2020YFB1313701) and the National Natural Science Foundation of China (No.62003309).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Liu, Y., Li, X. & Yang, L. A wearable sensor-based dynamic gesture recognition model via broad attention learning. SIViP 19, 30 (2025). https://doi.org/10.1007/s11760-024-03567-6

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11760-024-03567-6