Abstract

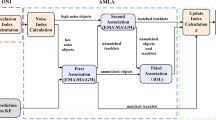

Event cameras are preferred for space object tracking due to their high temporal resolution and ability to capture dim light, fast-moving objects, and other challenging space objects. However, existing event trackers still use conventional tracking methods based on object textures, which may not be robust enough for challenging scenarios. To address this, we propose the Event-Based Space multi-object Tracker (EBSTracker), which integrates a bidirectional self-attention network and a multi-stage data association network. The bidirectional self-attention network enhances feature representation for tiny objects, while the multi-stage data association network uses the Noise Scale Adaptive (NSA) Kalman filter and Generalized Intersection over Union (GIoU) metric to predict trajectory positions, improving tracking robustness. Experiments on two large-scale datasets have demonstrated the effectiveness and robustness of EBSTracker, achieving state-of-the-art (SOTA) performance in challenging scenarios with tiny moving objects. This has advanced event-based space multi-object tracking technology.

Similar content being viewed by others

Data availability

No datasets were generated or analysed during the current study.

References

Li, S., Zhou, Z., Zhao, M., Yang, J., Guo, W., Lv, Y., Kou, L., Wang, H., Gu, Y.: A multitask benchmark dataset for satellite video: object detection, tracking, and segmentation. IEEE Trans. Geosci. Remote Sens. 61, 5611021 (2023). https://doi.org/10.1109/TGRS.2023.3278075

Lichtsteiner, P., Posch, C., Delbruck, T.: A 128$\times $128 120 dB 15$\mu $ s latency asynchronous temporal contrast vision sensor. IEEE J. Solid. State. Circuit. 43(2), 566–76 (2008)

Wang, S., Wang, Z., Li, C., Qi, X., So, H.K.: SpikeMOT: Event-based multi-object tracking with sparse motion features. arXiv-CS-Computer vision and pattern recognition, 2023

Gehrig, D., Rebecq, H., Gallego, G., Scaramuzza, D.: EKLT: Asynchronous photometric feature tracking using events and frames. Int. J. Comput. Vision. 128(3), 601–18 (2020)

Zhang, J., Yang, X., Fu, Y., Wei, X., Yin, B., Dong, B.: Object tracking by jointly exploiting frame and event domain. In: 2021 IEEE/CVF international conference on computer vision, pp. 13043–13052 (2021).

Chen, H., Suter, D., Wu, Q., Wang, H.: End-to-end learning of object motion estimation from retinal events for event-based object tracking. In: Proceedings of the AAAI Conference on Artificial Intelligence, pp. 10534–10541 (2020).

Zhao, M., Li, S., Wang, H., Yang, J., Sun, Y., Gu, Y.: MP2Net: mask propagation and motion prediction network for multi-object tracking in satellite videos. IEEE Transactions on Geoscience and Remote Sensing, 62, 5617515 (2024).https://doi.org/10.1109/TGRS.2024.3385406

Yin, Q., Hu, Q., Liu, H., Zhang, F., Wang, Y., Lin, Z., An, W., Guo, Y.: Detecting and tracking small and dense moving objects in satellite videos: a benchmark. IEEE Trans. Geosci. Remot. Sens. 23(60), 1–8 (2021)

Kong, L., Yan, Z., Zhang, Y., Diao, W., Zhu, Z., Wang, L.: CFTracker: Multi-object tracking with cross-frame connections in satellite videos. IEEE Trans. Geosci. Remote Sens. 61, 5611214 (2023). https://doi.org/10.1109/TGRS.2023.3278107

Bewley, A., Ge, Z., Ott, L., Ramos, F., Upcroft, B.: simple online and real-time tracking. in Proc. IEEE Int. Conf. Image Process. (ICIP), pp. 3464–3468 (2016).

Bergmann, P., Meinhardt, T., Leal-Taixé, L.: Tracking without bells and whistles. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp. 941–951 (2019)

Zhang, Y., Sun, P., Yi, J., Yu, D., Weng, F., Yuan, Z., Luo, P., Liu, W., Wang, X.: ByteTrack: Multi-object tracking by associating every detection box. In: Proceedings of the European Conference on Computer Vision, pp. 1–21 (2022).

Cao, J., Pang, J., Weng, X., Khirodkar, R., Kitani, K.: Observation-Centric SORT: Rethinking SORT for robust multi-object tracking. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 9686–9696 (2023).

Wojke, N., Bewley, A., Paulus, D.: Simple online and realtime tracking with a deep association metric. In: Proc. IEEE Int. Conf. Image Process., pp. 3645–3649 (2017).

Feng, J., Zeng, D., Jia, X., Zhang, X., Li, J., Liang, Y., Jiao, L.: Cross-frame key point-based and spatial motion information-guided networks for moving vehicle detection and tracking in satellite videos. ISPRS J. Photogramm. Remote. Sens. 177, 116–130 (2021). https://doi.org/10.1016/j.isprsjprs.2021.05.005

Xiao, C., Yin, Q., Ying, X., Li, R., Wu, S., Li, M., Liu, L., An, W., Chen, Z.: DSFNet: Dynamic and static fusion network for moving object detection in satellite videos. IEEE Geosci. Remote Sens. Lett. 19, 1–5 (2022). https://doi.org/10.1109/LGRS.2021.3124222

Xu, Z., Yang, W., Zhang, W., Tan, X., Huang, H., Huang, L.: Segment as points for efficient and effective online multi-object tracking and segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 44(10), 624–6437 (2021). https://doi.org/10.1109/TPAMI.2021.3087898

Han, S., Huang, P., Wang, H., Yu, E., Liu, D., Pan, X.: MAT: Motion aware multi-object tracking. Neurocomputing 476, 75–86 (2022). https://doi.org/10.1016/j.neucom.2021.12.104

Shuai, B., Berneshawi, A., Li, X., Modolo, D., Tighe, J.: SiamMOT: Siamese multi-object tracking. In: 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 12372–12382 (2021).

Zhou, X., Bei, C.: Backlight and dim space object detection based on a novel event camera. Peer. J. Comput. Sci. 10(e2192), 1–27 (2024). https://doi.org/10.7717/peerj-cs.2192

Zhou, X., Yin, T., Koltun, V., Krähenbühl, P.: Global tracking transformers. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 8771–8780 (2022).

Du, Y., Zhao, Z., Song, Y., Zhao, Y., Su, F., Gong, T., Meng, H.: StrongSORT: Make DeepSORT great again. IEEE Trans. Multimedia 25, 8725–8737 (2023). https://doi.org/10.1109/TMM.2023.3240881

Aharon, N., Orfaig, R., Bobrovsky, B.Z.: BoT-SORT: Robust associations multi-pedestrian tracking.

Meinhardt , T., Kirillov, A., Lea.-Taixe , L., Feichenhofer, C.: TrackFormer: Mult-object tracking with transformers. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 8844–8854 (2022).

Funding

This work is supported by the National Natural Science Foundation of China (NSFC) (12272010).

Author information

Authors and Affiliations

Contributions

X.Z. designed the research framework, analyzed the results, and wrote the manuscript. C.B. provided assistance in the preparation work and validation work. All authors have read and agreed to the published version of the manuscript.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no competing interests.

Consent for publication

All authors agreed on the final approval of the version to be published.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Zhou, X., Bei, C. EBSTracker: event-based space multi-object tracking with bidirectional self-attention and multi-stage data association. SIViP 19, 130 (2025). https://doi.org/10.1007/s11760-024-03571-w

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11760-024-03571-w