Abstract

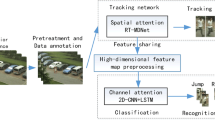

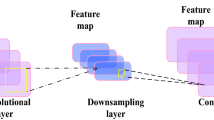

In the safety monitoring of workers in manufacturing enterprises, there is a problem of large amount of calculation in the identification model caused by multi-objective high concurrent behavior. In this paper, we propose a human behavior recognition model that combines multi-dimensional convolution and gated recurrent neural network from the perspective of model structure design. The single target human behavior data set of the factory was constructed by YOLOv7 target detection and BOT-SORT multi-target tracking. Human behavior recognition model 3-2DCNN-BIGRU, in the mixed spatio-temporal feature extraction layer, uses the advantages of 3DCNN in spatio-temporal feature extraction to extract spatio-temporal features; The 3-2DCNN is used to extract spatial features after dimension reduction to improve the computational complexity and reduce the complexity of the model; Using the idea of expansion convolution in the time convolution network, the receptive fields of 3DCNN and 3-2DCNN are increased, and the ability of spatio-temporal feature extraction of the model is enhanced. In the time feature enhancement layer, a bidirectional gated recurrent neural network is fused to enhance the model’s ability to extract time features, thereby improving the overall performance of the model. With fewer parameters, the accuracy on the Fall Dataset reaches 98.65%, which can effectively identify human behaviors such as walking, sitting, and falling in factories, ensuring the safety of workers.

Similar content being viewed by others

Data availability

No datasets were generated or analysed during the current study.

References

Yue, R., Tian, Z., Du, S.: Action recognition based on RGB and skeleton data sets: A survey. Neurocomputing. 512, 287–306 (2022). https://doi.org/10.1016/j.neucom.2022.09.071

Nunez-Marcos, A., Azkune, G., Arganda-Carreras, I.: Egocentric Vision-based Action Recognition: A survey. NEUROCOMPUTING. 472, 175–197 (2022). https://doi.org/10.1016/j.neucom.2021.11.081

Zhang, H., Liu, X., Yu, D., Guan, L., Wang, D., Ma, C., Hu, Z.: Skeleton-based action recognition with multi-stream, multi-scale dilated spatial-temporal graph convolution network. Appl. Intell. 53, 17629–17643 (2023). https://doi.org/10.1007/s10489-022-04365-8

Wu, N., Kera, H., Kawamoto, K.: Improving zero-shot action recognition using human instruction with text description. Appl. Intell. 53, 24142–24156 (2023). https://doi.org/10.1007/s10489-023-04808-w

Qi, Y., Hu, J., Zhuang, L., Pei, X.: Semantic-guided multi-scale human skeleton action recognition. Appl. Intell. 53, 9763–9778 (2023). https://doi.org/10.1007/s10489-022-03968-5

Tran, D., Bourdev, L., Fergus, R., Torresani, L., Paluri, M.: Learning Spatiotemporal Features with 3D Convolutional Networks. In: 2015 IEEE International Conference on Computer Vision (ICCV). pp. 4489–4497 (2015)

Hara, K., Kataoka, H., Satoh, Y.: Can Spatiotemporal 3D CNNs Retrace the History of 2D CNNs and ImageNet? In: 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition. pp. 6546–6555 (2018)

Muhammad, K., Mustaqeem, Ullah, A., Imran, A.S., Sajjad, M., Kiran, M.S., Sannino, G., de Albuquerque, V.H.C.: Human action recognition using attention based LSTM network with dilated CNN features. Future Gener Comput. Syst. 125, 820–830 (2021). https://doi.org/10.1016/j.future.2021.06.045

Tan, K.S., Lim, K.M., Lee, C.P., Kwek, L.C.: Bidirectional long short-term memory with temporal dense sampling for human action recognition. Expert Syst. Appl. 210, 118484 (2022). https://doi.org/10.1016/j.eswa.2022.118484

Afza, F., Khan, M.A., Sharif, M., Kadry, S., Manogaran, G., Saba, T., Ashraf, I., Damaševičius, R.: A framework of human action recognition using length control features fusion and weighted entropy-variances based feature selection. Image Vis. Comput. 106, 104090 (2021). https://doi.org/10.1016/j.imavis.2020.1040

Carreira, J., Zisserman, A.: Quo Vadis, Action Recognition? A New Model and the Kinetics Dataset. In: 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR). pp. 4724–4733. IEEE, Honolulu, HI (2017)

Dai, C., Liu, X., Lai, J.: Human action recognition using two-stream attention based LSTM networks. Appl. Soft Comput. 86, 105820 (2020). https://doi.org/10.1016/j.asoc.2019.105820

Hu, W., Fu, C., Cao, R., Zang, Y., Wu, X.-J., Shen, S., Gao, X.-Z.: Joint dual-stream interaction and multi-scale feature extraction network for multi-spectral pedestrian detection. Appl. Soft Comput. 147, 110768 (2023). https://doi.org/10.1016/j. asoc.2023.110768

Senthilkumar, N., Manimegalai, M., Karpakam, S., Ashokkumar, S.R., Premkumar, M.: Human action recognition based on spatial–temporal relational model and LSTM-CNN framework. Mater. Today Proc. 57, 2087–2091 (2022). https://doi.org/10.1016/j.matpr.2021.12.004

Jaouedi, N., Boujnah, N., Bouhlel, M.S.: A new hybrid deep learning model for human action recognition. J. King Saud Univ. - Comput. Inf. Sci. 32, 447–453 (2020). https://doi.org/10.1016/j.jksuci.2019.09.004

Zhang, Z., Lv, Z., Gan, C., Zhu, Q.: Human action recognition using convolutional LSTM and fully-connected LSTM with different attentions. Neurocomputing. 410, 304–316 (2020). https://doi.org/10.1016/j.neucom.2020.06.032

Tran, D., Wang, H., Torresani, L., Ray, J., LeCun, Y., Paluri, M.: A Closer Look at Spatiotemporal Convolutions for Action Recognition. In: 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition. pp. 6450–6459 (2018)

Liu, X., Xiong, S., Wang, X., Liang, T., Wang, H., Liu, X.: A compact multi-branch 1D convolutional neural network for EEG-based motor imagery classification. Biomed. Signal. Process. Control. 81, 104456 (2023). https://doi.org/10.1016/j.bspc.2022

Cui, J., Lan, Z., Liu, Y., Li, R., Li, F., Sourina, O., Müller-Wittig, W.: A compact and interpretable convolutional neural network for cross-subject driver drowsiness detection from single-channel EEG. Methods. 202, 173–184 (2022). https://doi.org/10.1016/j. ymeth.2021.04.017

Soomro, K., Zamir, A.R., Shah, M.: UCF101: A Dataset of 101 Human Actions Classes From Videos in The Wild, (2012). http://arxiv.org/abs/1212.0402

Adhikari, K., Bouchachia, H., Nait-Charif, H.: Activity recognition for indoor fall detection using convolutional neural network. In: 2017 Fifteenth IAPR International Conference on Machine Vision Applications (MVA). pp. 81–84 (2017)

Kuehne, H., Jhuang, H., Garrote, E., Poggio, T., Serre, T.: HMDB: A large video database for human motion recognition. In: 2011 International Conference on Computer Vision. pp. 2556–2563 (2011)

Wang, C.-Y., Bochkovskiy, A., Liao, H.-Y.M.: YOLOv7: Trainable Bag-of-Freebies Sets New State-of-the-Art for Real-Time Object Detectors. In: 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). pp. 7464–7475 (2023)

Aharon, N., Orfaig, R., Bobrovsky, B.-Z.: BoT-SORT: Robust Associations Multi-Pedestrian Tracking, (2022). http://arxiv.org/abs/2206.14651

Funding

The research was supported by the Natural Science Foundation of Shaanxi Province (No.2021SF-422) and the Natural Science Foundation of Shaanxi Province (No.2024GX-YBXM-190).

Author information

Authors and Affiliations

Contributions

This paper was written by Wang Zhenyu, who independently completed the research design, data analysis and result interpretation, and provided in-depth insights into the research results. Zheng Jianming gave four instructions on the overall structure of the paper. Yang Mingshun gave two instructions on the experimental design and data analysis of the paper. Shi Weichao was responsible for reviewing the literature and provided an important knowledge framework in the background part of the paper. Su Yulong is responsible for revising and proofreading the paper. Chen Ting provided resources related to the experiment and provided key support in the process of experiment design and implementation. Peng Chao combed the language of the paper and optimized the pictures of the paper.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Wang, Z., Zheng, J., Yang, M. et al. Research on human behavior recognition in factory environment based on 3-2DCNN-BIGRU fusion network. SIViP 19, 102 (2025). https://doi.org/10.1007/s11760-024-03613-3

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11760-024-03613-3