Abstract

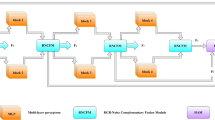

Image tamper localization is an important research topic in the field of computer vision, which aims at identifying and localizing human-modified regions in images. In this paper, we propose a new image tampering localization network, which is named MAPS-Net. It combines the advantages of efficient multi-scale attention, shift operation, and progressive subtraction, which not only improves the sensitivity and generalization to novel data tampering behaviors but also significantly reduces the computation time. MAPS-Net consists of upper and lower branches, which are the fake edge-enhancing branch and the interfering factors-weakening branch. The fake edge-enhancing branch uses an efficient multi-scale edge residual module to enhance the expressiveness of the features, while the interfering factors-weakening branch uses progressive subtraction to weaken the interference of image content fluctuations in capturing general tampering behaviors. Finally, the features of both branches are fused with a position attention mechanism via a shift operation to capture the spatial relationships between different views. Experiments conducted on several publicly available datasets show that MAPS-Net outperforms existing mainstream models in both image tampering detection and localization, especially in image tampering localization in real scenes. Code is available at: https://github.com/dklive1999/MAPS-Net.

Similar content being viewed by others

References

Dai, Chenwei: Su, Lichao, Wu, Boyu, Chen, Jian: DS-Net: Dual supervision neural network for image manipulation localization. IET Imag Process. 17(12), 3551–3563 (2023)

Bappy, Jawadul H., Roy-Chowdhury, Amit K., Bunk, Jason: Lakshmanan Nataraj. Exploiting Spatial Structure for Localizing Manipulated Image Regions, B.S. Manjunath (2017)

Dong, C., Chen, X., Hu, R., Cao, J., Li, X.: Mvss-net: multi-view multi-scale supervised networks for image manipulation detection. IEEE Trans. Pattern Anal. Mach. Intell. 45(3), 3539–3553 (2023)

Dong, J., Wang, W., Tan, T.: CASIA image tampering detection evaluation database, 422–426 (2013)

Fridrich, Jessica, Kodovský, Jan: Rich models for steganalysis of digital images. IEEE Trans. Inf. Forensics Secur. 7(3), 868–882 (2012)

Chennamma, H. R., Madhushree, B.: A comprehensive survey on image authentication for tamper detection with localization. Multimed. Tools Appl. 82(2), 1873–1904 (2023). https://doi.org/10.1007/s11042-022-13312-1

Rublee, E., Rabaud, V., Konolige, K., Bradski, G.: Orb: An efficient alternative to sift or surf. In: 2011 International Conference on Computer Vision, pp. 2564–2571 (2011)

Salloum, Ronald, Ren, Yuzhuo, Jay Kuo, C..-C..: Image splicing localization using a multi-task fully convolutional network (MFCN). J. V. Commun. Image Represent. 51, 201–209 (2018). https://doi.org/10.1016/j.jvcir.2018.01.010

Wu, Y., AbdAlmageed, W., Natarajan, P.: ManTra-Net: manipulation tracing network for detection and localization of image forgeries with anomalous features, 9543–9552 (2019)

Kwon, M.-J., Yu, I.-J., Nam, S.-H., Lee, H.-K.: Cat-net: Compression artifact tracing network for detection and localization of image splicing. In: 2021 IEEE winter conference on applications of computer vision (WACV), pp. 375–384 (2021)

Ouyang, D., He S., Zhang, G., Luo, M., Guo, H., Jin, Z., Huang, Z.: Efficient multi-scale attention module with cross-spatial learning. In: IEEE International Conference on Acoustics, Speech, and Signal Processing (2023)

Wang, G., Zhao, Y., Tang, C., Luo, C., Zeng, W.: When shift operation meets vision transformer: an extremely simple alternative to attention mechanism. Proc. AAAI Conf. Artif. Intell. 36(2), 2423–2430 (2022). https://doi.org/10.1609/aaai.v36i2.20142

Xu, D., Shen, X., Shi, Z., Ta, N.: Semantic-agnostic progressive subtractive network for image manipulation detection and localization. Neurocomputing 543, 126263 (2023)

Hu, X., Zhang, Z., Jiang, Z., Chaudhuri, S., Yang, Z., Nevatia, R.: SPAN: spatial pyramid attention network for image manipulation localization, 312–328 (2020)

Liu, X., Liu, Y., Chen, J., Liu, X.: PSCC-Net: progressive spatio-channel correlation network for image manipulation detection and localization. IEEE Trans. Circuits Syst. Video Technol. 32(11), 7505–7517 (2022). https://doi.org/10.1109/TCSVT.2022.3189545

Wang, J., Wu, Z., Chen, J., Han, X., Shrivastava, A., Lim, S.-N., Jiang, Y.-G.: Objectformer for image manipulation detection and localization. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 2364–2373 (2022)

Fan, H., Xiong, B., Mangalam, K., Li, Y., Yan, Z.: Jitendra Malik. Multiscale Vision Transformers, Christoph Feichtenhofer (2021)

Long, Z., Tan, S., Li, B., Huang, J.: Self-adversarial training incorporating forgery attention for image forgery localization. arXiv: Computer Vision and Pattern Recognition (2021)

Fan, D.-P., Zhou, T., Ji, G.-P., Zhou, Y., Chen, G., Fu, H., Shen, J., Shao, L.: Inf-net: automatic covid-19 lung infection segmentation from ct images. IEEE Trans. Med. Imaging 39(8), 2626–2637 (2020)

Xu, Z., Li, T., Liu, Y., Zhan, Y., Chen, J., Lukasiewicz, T.: Pac-net: multi-pathway fpn with position attention guided connections and vertex distance iou for 3d medical image detection. Front. Bioeng. Biotechnol. 11, 1049555 (2023)

Chen, X., Dong, C., Ji, J., Cao, J., Li, X.: Image manipulation detection by multi-view multi-scale supervision. In: 2021 IEEE/CVF International Conference on Computer Vision (ICCV), pp. 14165–14173 (2021)

Fu, J., Liu, J., Tian, H., Li, Y., Bao, Y., Fang, Z., Lu, H.: Dual attention network for scene segmentation, 3146–3154 (2019)

Wen, B., Zhu, Y., Subramanian, R., Ng, T. T., Shen, X., Winkler, S.: COVERAGE - A novel database for copy-move forgery detection, 161–165 (2016)

Hsu, J., Chang, S.: Columbia uncompressed image splicing detection evaluation dataset. Columbia DVMM Research Lab 6 (2006)

Guan, H., Kozak, M., Robertson, E., Lee, Y., Yates, A.N., Delgado, A., Zhou, D., Kheyrkhah, T., Smith, J., Fiscus, J.: Mfc datasets: Large-scale benchmark datasets for media forensic challenge evaluation. In: 2019 IEEE Winter Applications of Computer Vision Workshops (WACVW), pp. 63–72 . IEEE (2019)

Wu, H., Zhou, J., Tian, J., Liu, J., Qiao, Y.: Robust image forgery detection against transmission over online social networks. IEEE Trans. Inf. Forensics Secur. 17, 443–456 (2022). https://doi.org/10.1109/TIFS.2022.3144878

Deng, J., Dong, W., Socher, R., Li, L., Li, K., Li, F.: ImageNet: a large-scale hierarchical image database, 248–255 (2009)

Yang, C., Li, H., Lin, F., Jiang, B., Zhao, H.: Constrained r-cnn: a general image manipulation detection model. In: 2020 IEEE International Conference on Multimedia and Expo (ICME), pp. 1–6 (2020)

Zhou, Peng, Chen, Bor-Chun., Han, Xintong, Najibi, Mahyar, Shrivastava, Abhinav, Lim, Ser-Nam., Davis, Larry: Generate, segment, and refine: towards generic manipulation segmentation. Proc. AAAI Conf. Artif. Intell. 34(07), 13058–13065 (2020)

Zhuang, P., Li, H., Tan, S., Li, B., Huang, J.: Image tampering localization using a dense fully convolutional network. IEEE Trans. Inf. Forensics Secur. 16, 2986–2999 (2021)

Guo, X., Liu, X., Ren, Z., Grosz, S., Masi, I., Liu, X.: Hierarchical fine-grained image forgery detection and localization. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 3155–3165 (2023)

Selvaraju, R.R., Cogswell, M., Das, A., Vedantam, R., Parikh, D., Batra, D.: Grad-cam: Visual explanations from deep networks via gradient-based localization. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 618–626 (2017)

Acknowledgements

The numerical calculations in this paper have been done on the supercomputing system in the WQ & UCAS Research Academy Intelligent Computing Center (WRA-ICC). This research was supported by National Natural Science Foundation of China under Grants 62376017, and Fundamental Research Funds for the Central Universities buctrc202221.

Author information

Authors and Affiliations

Contributions

Y.S. involved in conceptualization, methodology and supervision. K.D. took part in methodology, writing and editing. L.W. took part in writing, supervision and review.

Corresponding author

Ethics declarations

Conflict of interest

There is no Conflict of interest in the study.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Shao, Y., Dai, K. & Wang, L. Image tampering localization network based on multi-class attention and progressive subtraction. SIViP 19, 2 (2025). https://doi.org/10.1007/s11760-024-03622-2

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11760-024-03622-2