Abstract

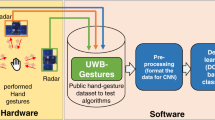

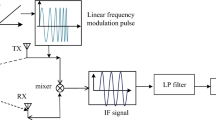

Radar-based artificial intelligence (AI) applications have gained significant attention recently, spanning from fall detection to gesture recognition. The growing interest in this field has led to a shift towards deep convolutional networks, and transformers have emerged to address limitations in convolutional neural network methods, becoming increasingly popular in the AI community. In this paper, we present a novel hybrid approach for radar-based traffic hand gesture classification using transformers. Traffic hand gesture recognition (HGR) holds importance in AI applications, and our proposed three-phase approach addresses the efficiency and effectiveness of traffic HGR. In the initial phase, feature vectors are extracted from input radar images using the pre-trained DenseNet-121 model. These features are then consolidated by concatenating them to gather information from diverse radar sensors, followed by a patch extraction operation. The concatenated features from all inputs are processed in the Swin transformer block to facilitate further HGR. The classification stage involves sequential application of global average pooling, Dense, and Softmax layers. To assess the effectiveness of our method on ULM university radar dataset, we employ various performance metrics, including accuracy, precision, recall, and F1-score, achieving an average accuracy score of 90.54%. We compare this score with existing approaches to demonstrate the competitiveness of our proposed method.

Similar content being viewed by others

Data availability

No datasets were generated or analysed during the current study.

References

Rasouli, A., Tsotsos, J.K.: Autonomous vehicles that interact with pedestrians: a survey of theory and practice. IEEE Trans. Intell. Transp. Syst.Intell. Transp. Syst. 21, 900–918 (2020). https://doi.org/10.1109/TITS.2019.2901817

Ohn-Bar, E., Trivedi, M.M.: Looking at humans in the age of self-driving and highly automated vehicles. IEEE Trans. Intell. Veh. 1, 90–104 (2016). https://doi.org/10.1109/TIV.2016.2571067

Ohn-Bar, E., Trivedi, M.M.: Hand gesture recognition in real time for automotive interfaces: a multimodal vision-based approach and evaluations. IEEE Trans. Intell. Transp. Syst.Intell. Transp. Syst. 15, 2368–2377 (2014). https://doi.org/10.1109/TITS.2014.2337331

Gupta, S., Vasardani, M., Winter, S.: Negotiation between vehicles and pedestrians for the right of way at intersections. IEEE Trans. Intell. Transp. Syst.Intell. Transp. Syst. 20, 888–899 (2019). https://doi.org/10.1109/TITS.2018.2836957

Molchanov, P., Gupta, S., Kim, K., Kautz, J.: Hand gesture recognition with 3D convolutional neural networks. IEEE Comput. Soc. Conf. Comput. Vis. Pattern Recognit. Work. (2015). https://doi.org/10.1109/CVPRW.2015.7301342

Chamorro, S., Collier, J., Grondin, F.: Neural network based lidar gesture recognition for realtime robot teleoperation. In: 2021 IEEE Int. Symp. Safety, Secur. Rescue Robot. SSRR 2021. 98–103 (2021). https://doi.org/10.1109/SSRR53300.2021.9597855

Sang, Y., Shi, L., Liu, Y.: Micro hand gesture recognition system using ultrasonic active sensing. IEEE Access. 6, 49339–49347 (2018). https://doi.org/10.1109/ACCESS.2018.2868268

Rautaray, S.S., Agrawal, A.: Vision based hand gesture recognition for human computer interaction: a survey. Artif. Intell. Rev.. Intell. Rev. 43, 1–54 (2015). https://doi.org/10.1007/s10462-012-9356-9

Korti, D.S., Slimane, Z., Lakhdari, K.: Enhancing dynamic hand gesture recognition using feature concatenation via multi-input hybrid model. Int. J. Electr. Comput. Eng. Syst. 14, 535–546 (2023). https://doi.org/10.32985/ijeces.14.5.5

Guo, L., Lu, Z., Yao, L.: Human-machine interaction sensing technology based on hand gesture recognition: a review. IEEE Trans. Human-Machine Syst. 51, 300–309 (2021). https://doi.org/10.1109/THMS.2021.3086003

Van Amsterdam, B., Clarkson, M.J., Stoyanov, D.: Gesture recognition in robotic surgery: a review. IEEE Trans. Biomed. Eng. 68, 2021–2035 (2021). https://doi.org/10.1109/TBME.2021.3054828

Jin, B., Ma, X., Zhang, Z., Lian, Z., Wang, B.: Interference-robust millimeter-wave radar-based dynamic hand gesture recognition using 2D CNN-transformer networks. IEEE Internet Things J. (2023). https://doi.org/10.1109/JIOT.2023.3293092

Wang, C., Zhao, X., Li, Z.: DCS-CTN: subtle gesture recognition based on TD-CNN-transformer via millimeter-wave radar. IEEE Internet Things J. 10, 17680–17693 (2023). https://doi.org/10.1109/JIOT.2023.3280227

Liu, H., Liu, Z.: A multimodal dynamic hand gesture recognition based on radar-vision fusion. IEEE Trans. Instrum. Meas.Instrum. Meas. 72, 1–15 (2023). https://doi.org/10.1109/TIM.2023.3253906

Zhao, P., Lu, C.X., Wang, B., Trigoni, N., Markham, A.: CubeLearn: end-to-end learning for human motion recognition from raw mmWave radar signals. IEEE Internet Things J. 10, 10236–10249 (2023). https://doi.org/10.1109/JIOT.2023.3237494

Mao, Y., Zhao, L., Liu, C., Ling, M.: A low-complexity hand gesture recognition framework via dual mmWave FMCW radar system. Sensors (Basel). (2023). https://doi.org/10.3390/s23208551

Kern, N., Grebner, T., Waldschmidt, C.: PointNet+LSTM for target list-based gesture recognition with incoherent radar networks. IEEE Trans. Aerosp. Electron. Syst.Aerosp. Electron. Syst. 58, 5675–5686 (2022). https://doi.org/10.1109/TAES.2022.3179248

Sharma, R.R., Kumar, K.A., Cho, S.H.: Novel time-distance parameters based hand gesture recognition system using multi-UWB radars. IEEE Sensors Lett. 7, 1–4 (2023). https://doi.org/10.1109/LSENS.2023.3268065

Guo, Z., Guendel, R.G., Yarovoy, A., Fioranelli, F.: Point transformer-based human activity recognition using high-dimensional radar point clouds. In: Proc. IEEE Radar Conf. 2023 (2023). https://doi.org/10.1109/RadarConf2351548.2023.10149679

Gao, H., Li, C.: Automated violin bowing gesture recognition using FMCW-radar and machine learning. IEEE Sens. J. 23, 9262–9270 (2023). https://doi.org/10.1109/JSEN.2023.3263513

Huang, G., Liu, Z., Van Der Maaten, L., Weinberger, K.Q.: Densely connected convolutional networks. In: Proc. - 30th IEEE Conf. Comput. Vis. Pattern Recognition, CVPR 2017. (2017). https://doi.org/10.1109/CVPR.2017.243

Liu, Z., Lin, Y., Cao, Y., Hu, H., Wei, Y., Zhang, Z., Lin, S., Guo, B.: Swin transformer: hierarchical vision transformer using shifted windows. In: Proc. IEEE Int. Conf. Comput. Vis. 9992–10002 (2021). https://doi.org/10.1109/ICCV48922.2021.00986

Dosovitskiy, A., Beyer, L., Kolesnikov, A., Weissenborn, D., Zhai, X., Unterthiner, T., Dehghani, M., Minderer, M., Heigold, G., Gelly, S., Uszkoreit, J., Houlsby, N.: An ımage is worth 16 × 16 words: transformers for ımage recognition at scale. In: ICLR 2021 (2021)

Traffic Gesture Dataset, https://www.uni-ulm.de/in/mwt/forschung/online-datenbank/traffic-gesture-dataset/

Flach, P.A., Kull, M.: Precision-recall-gain curves: PR analysis done right. Adv. Neural Inf. Process. Syst. 2015, 838–846 (2015)

Kim, Y., Toomajian, B.: Hand gesture recognition using micro-Doppler signatures with convolutional neural network. IEEE Access. 4, 7125–7130 (2016). https://doi.org/10.1109/ACCESS.2016.2617282

Funding

None.

Author information

Authors and Affiliations

Contributions

Hüseyin FIRAT: Conceptualization, Discussed the results, Writing—Original Draft Preparation, Validation, Formal analysis. Hüseyin ÜZEN: Conceptualization, Methodology, Software, Writing- Original Draft Preparation, Visualization. Orhan ATİLA: Conceptualization, Discussed the results, Writing—Original Draft Preparation, Validation, Formal analysis. Abdulkadir ŞENGÜR: Reviewing and Editing, Discussed the results, Validation, Supervision.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Fırat, H., Üzen, H., Atila, O. et al. Automated efficient traffic gesture recognition using swin transformer-based multi-input deep network with radar images. SIViP 19, 35 (2025). https://doi.org/10.1007/s11760-024-03664-6

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11760-024-03664-6