Abstract

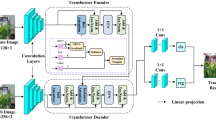

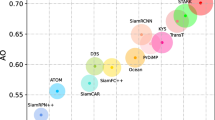

This study proposes FLSTrack, an end-to-end multi-object tracking algorithm that integrates Focused Linear Attention with dual decoders. The algorithm aims to address the limitations of current multi-object tracking methods, including poor performance in complex scenarios, inadequate data association, and high computational complexity. Initially, the SwinTransformer is paired with a Focused Linear Attention module to enhance the network’s ability to extract both local and global information, thereby reducing computational costs. Subsequently, a dual-branch decoder based on window attention is developed, with one branch dedicated to tracking and the other to detecting targets in image frames. To further enhance the algorithm’s speed, the complex feature re-identification (ReID) network is replaced with the BYTE data association method. To compensate for the loss of feature appearance resulting from omitting the ReID network, the SIoU loss function is introduced, significantly improving target localization accuracy. The experimental results of FLSTrack on the MOT17, MOT20, DanceTrack, and KITTI datasets show superior performance. Moreover, with an inference speed nearing 30 FPS, the algorithm achieves an optimal balance between tracking accuracy and real-time performance.

Similar content being viewed by others

Availability of data:

Data will be made available on request.

References

Ge, Z., Liu, S., Wang, F., Li, Z., Sun, J.: Yolox: Exceeding yolo series in 2021. ArXiv abs/2107.08430 (2021) https://doi.org/10.48550/arXiv.2107.08430

Kuhn, H.W.: The hungarian method for the assignment problem. Naval Res. Logist. Terq 2(1–2), 83–97 (1955). https://doi.org/10.46254/au01.20220498

Kalman, R.E.: A new approach to linear filtering and prediction problems. ASME J. Basic Eng. 82, 35–45 (1960). https://doi.org/10.1115/1.3662552

Wojke, N., Bewley, A., Paulus, D.: Simple online and realtime tracking with a deep association metric. In: 2017 IEEE international conference on image processing (ICIP), pp. 3645–3649 (2017). https://doi.org/10.1109/icip.2017.8296962 . IEEE

Bewley, A., Ge, Z., Ott, L., Ramos, F., Upcroft, B.: Simple online and realtime tracking. In: 2016 IEEE international conference on image processing (ICIP), pp. 3464–3468 (2016). https://doi.org/10.1109/icip.2016.7533003 . IEEE

Chen, L., Ai, H., Zhuang, Z., Shang, C.: Real-time multiple people tracking with deeply learned candidate selection and person re-identification. In: 2018 IEEE international conference on multimedia and expo (ICME), pp. 1–6 (2018). https://doi.org/10.1109/icme.2018.8486597 . IEEE

Feichtenhofer, C., Pinz, A., Zisserman, A.: Detect to track and track to detect. In: Proceedings of the IEEE international conference on computer vision, pp. 3038–3046 (2017). https://doi.org/10.1109/iccv.2017.330

Aharon, N., Orfaig, R., Bobrovsky, B.-Z.: Bot-sort: Robust associations multi-pedestrian tracking. arXiv preprint arXiv:2206.14651 (2022) https://doi.org/10.48550/arXiv.2206.14651

Bergmann, P., Meinhardt, T., Leal-Taixe, L.: Tracking without bells and whistles. In: Proceedings of the IEEE/CVF international conference on computer vision, pp. 941–951 (2019). https://doi.org/10.1109/iccv.2019.00103

Zhou, X., Koltun, V., Krähenbühl, P.: Tracking objects as points. In: European conference on computer vision, pp. 474–490 (2020). https://doi.org/10.1007/978-3-030-58548-8_28 . Springer

Zhang, Y., Wang, C., Wang, X., Zeng, W., Liu, W.: Fairmot: on the fairness of detection and re-identification in multiple object tracking. Int. J. Comput. Vision 129, 3069–3087 (2021). https://doi.org/10.1007/s11263-021-01513-4

Sun, P., Cao, J., Jiang, Y., Zhang, R., Xie, E., Yuan, Z., Wang, C., Luo, P.: Transtrack: Multiple object tracking with transformer. arXiv preprint arXiv:2012.15460 (2020) https://doi.org/10.48550/arXiv.2012.15460

Meinhardt, T., Kirillov, A., Leal-Taixe, L., Feichtenhofer, C.: Trackformer: Multi-object tracking with transformers. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp. 8844–8854 (2022). https://doi.org/10.1109/cvpr52688.2022.00864

Xu, Y., Ban, Y., Delorme, G., Gan, C., Rus, D., Alameda-Pineda, X.: Transcenter: transformers with dense representations for multiple-object tracking. IEEE Trans. Pattern Anal. Mach. Intell. 45(6), 7820–7835 (2022). https://doi.org/10.1109/tpami.2022.3225078

Liang, C., Zhang, Z., Zhou, X., Li, B., Zhu, S., Hu, W.: Rethinking the competition between detection and reid in multiobject tracking. IEEE Trans. Image Process. 31, 3182–3196 (2022). https://doi.org/10.1109/tip.2022.3165376

Wang, Z., Zheng, L., Liu, Y., Li, Y., Wang, S.: Towards real-time multi-object tracking. In: European conference on computer vision, pp. 107–122 (2020). https://doi.org/10.1007/978-3-030-58621-8_7 . Springer

Cai, J., Xu, M., Li, W., Xiong, Y., Xia, W., Tu, Z., Soatto, S.: Memot: Multi-object tracking with memory. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp. 8090–8100 (2022). https://doi.org/10.1109/cvpr52688.2022.00792

Yu, E., Li, Z., Han, S.: Towards discriminative representation: Multi-view trajectory contrastive learning for online multi-object tracking. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 8834–8843 (2022). https://doi.org/10.1109/cvpr52688.2022.00863

Vaswani, A., Shazeer, N., Parmar, N., Uszkoreit, J., Jones, L., Gomez, A.N., Kaiser, Ł., Polosukhin, I.: Attention is all you need. Advances in neural information processing systems 30 (2017) https://doi.org/10.48550/arXiv.1706.03762

Gu, F., Lu, J., Cai, C.: Rpformer: a robust parallel transformer for visual tracking in complex scenes. IEEE Trans. Instrum. Meas. 71, 1–14 (2022)

Gu, Fengwei, Lu, Jun, Cai, Chengtao, Zhu, Qidan, Ju, Zhaojie: RTSformer: a robust toroidal transformer with spatiotemporal features for visual tracking. IEEE Trans. Human-Mach. Sys. 54(2), 214–225 (2024). https://doi.org/10.1109/THMS.2024.3370582

Yuan, D., Shu, X., Liu, Q., He, Z.: Aligned spatial-temporal memory network for thermal infrared target tracking. IEEE Trans. Circuit. Syst. II Express Brief. 70(3), 1224–1228 (2022)

Gu, Fengwei, Lu, Jun, Cai, Chengtao, Zhu, Qidan, Ju, Zhaojie: EANTrack: an efficient attention network for visual tracking. IEEE Trans. Auto. Sci. Eng. 21(4), 5911–5928 (2024). https://doi.org/10.1109/TASE.2023.3319676

Liu, Z., Lin, Y., Cao, Y., Hu, H., Wei, Y., Zhang, Z., Lin, S., Guo, B.: Swin transformer: Hierarchical vision transformer using shifted windows. In: Proceedings of the IEEE/CVF international conference on computer vision, pp. 10012–10022 (2021). https://doi.org/10.1109/iccv48922.2021.00986

Han, D., Pan, X., Han, Y., Song, S., Huang, G.: Flatten transformer: Vision transformer using focused linear attention. In: Proceedings of the IEEE/cvf international conference on computer vision, pp. 5961–5971 (2023). https://doi.org/10.1109/iccv51070.2023.00548

Zhang, Y., Sun, P., Jiang, Y., Yu, D., Weng, F., Yuan, Z., Luo, P., Liu, W., Wang, X.: Bytetrack: Multi-object tracking by associating every detection box. In: European conference on computer vision, pp. 1–21 (2022). https://doi.org/10.1007/978-3-031-20047-2_1 . Springer

Loss, G.Z.S.: More powerful learning for bounding box regression. arXiv preprint arXiv:2205.12740 (2022) https://doi.org/10.48550/arXiv.2205.12740

Cao, J., Pang, J., Weng, X., Khirodkar, R., Kitani, K.: Observation-centric sort: Rethinking sort for robust multi-object tracking. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp. 9686–9696 (2023). https://doi.org/10.1109/cvpr52729.2023.00934

Zhang, Y., Liang, Y., Leng, J., Wang, Z.: Scgtracker: spatio-temporal correlation and graph neural networks for multiple object tracking. Pattern Recogn. 149, 110249 (2024)

Wang, Z., Li, Z., Leng, J., Li, M., Bai, L.: Multiple pedestrian tracking with graph attention map on urban road scene. IEEE Trans. Intell. Transp. Syst. 24(8), 8567–8579 (2022)

Zhu, X., Su, W., Lu, L., Li, B., Wang, X., Dai, J.: Deformable detr: Deformable transformers for end-to-end object detection. arXiv preprint arXiv:2010.04159 (2020) https://doi.org/10.48550/arXiv.2010.04159

Carion, N., Massa, F., Synnaeve, G., Usunier, N., Kirillov, A., Zagoruyko, S.: End-to-end object detection with transformers. In: European conference on computer vision, pp. 213–229 (2020). https://doi.org/10.1007/978-3-030-58452-8_13 . Springer

Zhao, T., Zheng, C., Zhu, Q., He, H.: Swintranstrack: Multi-object tracking using shifted window transformers. In: 2022 21st International symposium on communications and information technologies (ISCIT), pp. 183–188 (2022). https://doi.org/10.1109/iscit55906.2022.9931284 . IEEE

Zeng, F., Dong, B., Zhang, Y., Wang, T., Zhang, X., Wei, Y.: Motr: End-to-end multiple-object tracking with transformer. In: European conference on computer vision, pp. 659–675 (2022). https://doi.org/10.1007/978-3-031-19812-0_38 . Springer

Zhang, Y., Wang, T., Zhang, X.: Motrv2: Bootstrapping end-to-end multi-object tracking by pretrained object detectors. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp. 22056–22065 (2023). https://doi.org/10.1109/cvpr52729.2023.02112

Lin, T.-Y., Goyal, P., Girshick, R., He, K., Dollár, P.: Focal loss for dense object detection. In: Proceedings of the IEEE International conference on computer vision, pp. 2980–2988 (2017). https://doi.org/10.1109/iccv.2017.324

Rezatofighi, H., Tsoi, N., Gwak, J., Sadeghian, A., Reid, I., Savarese, S.: Generalized intersection over union: A metric and a loss for bounding box regression. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp. 658–666 (2019). https://doi.org/10.1109/cvpr.2019.00075

Milan, A., Leal-Taixé, L., Reid, I., Roth, S., Schindler, K.: Mot16: A benchmark for multi-object tracking. arXiv preprint arXiv:1603.00831 (2016) https://doi.org/10.1007/978-3-319-46478-7

Dendorfer, P., Rezatofighi, H., Milan, A., Shi, J., Cremers, D., Reid, I., Roth, S., Schindler, K., Leal-Taixé, L.: Mot20: A benchmark for multi object tracking in crowded scenes. arXiv preprint arXiv:2003.09003 (2020) https://doi.org/10.48550/arXiv.2003.09003

Sun, P., Cao, J., Jiang, Y., Yuan, Z., Bai, S., Kitani, K., Luo, P.: Dancetrack: Multi-object tracking in uniform appearance and diverse motion. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp. 20993–21002 (2022). https://doi.org/10.1109/cvpr52688.2022.02032

Geiger, A., Lenz, P., Stiller, C., Urtasun, R.: Vision meets robotics: the kitti dataset. Int. J. Robot. Res. 32(11), 1231–1237 (2013). https://doi.org/10.1177/0278364913491297

Shao, S., Zhao, Z., Li, B., Xiao, T., Yu, G., Zhang, X., Sun, J.: Crowdhuman: A benchmark for detecting human in a crowd. arXiv preprint arXiv:1805.00123 (2018) https://doi.org/10.48550/arXiv.1805.00123

Loshchilov, I., Hutter, F.: Decoupled weight decay regularization. arXiv preprint arXiv:1711.05101 (2017) https://doi.org/10.48550/arXiv.1711.05101

Glorot, X., Bengio, Y.: Understanding the difficulty of training deep feedforward neural networks. In: Proceedings of the thirteenth international conference on artificial intelligence and statistics, JMLR workshop and conference proceedingspp. 249–256 (2010)

Deng, J., Dong, W., Socher, R., Li, L.-J., Li, K., Fei-Fei, L.: Imagenet: A large-scale hierarchical image database. In: 2009 IEEE conference on computer vision and pattern recognition, pp. 248–255 (2009). https://doi.org/10.1109/cvpr.2009.5206848 . Ieee

Ioffe, S., Szegedy, C.: Batch normalization: Accelerating deep network training by reducing internal covariate shift. In: International conference on machine learning, pp. 448–456 (2015). pmlr

Du, Y., Zhao, Z., Song, Y., Zhao, Y., Su, F., Gong, T., Meng, H.: Strongsort: Make deepsort great again. IEEE Trans. Multimedia (2023). https://doi.org/10.1109/tmm.2023.3240881

Liu, Z., Wang, X., Wang, C., Liu, W., Bai, X.: Sparsetrack: Multi-object tracking by performing scene decomposition based on pseudo-depth. arXiv preprint arXiv:2306.05238 (2023)

Qin, Z., Zhou, S., Wang, L., Duan, J., Hua, G., Tang, W.: Motiontrack: Learning robust short-term and long-term motions for multi-object tracking. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp. 17939–17948 (2023). https://doi.org/10.1109/cvpr52729.2023.01720

Yu, E., Li, Z., Han, S., Wang, H.: Relationtrack: relation-aware multiple object tracking with decoupled representation. IEEE Trans. Multimedia (2022). https://doi.org/10.1109/tmm.2022.3150169

Weng, X., Wang, J., Held, D., Kitani, K.: 3d multi-object tracking: A baseline and new evaluation metrics. In: 2020 IEEE/RSJ International conference on intelligent robots and systems (IROS), pp. 10359–10366 (2020). iros45743.2020.9341164 . IEEE

Pang, J., Qiu, L., Li, X., Chen, H., Li, Q., Darrell, T., Yu, F.: Quasi-dense similarity learning for multiple object tracking. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp. 164–173 (2021). https://doi.org/10.1109/cvpr46437.2021.00023

Hu, H.-N., Yang, Y.-H., Fischer, T., Darrell, T., Yu, F., Sun, M.: Monocular quasi-dense 3d object tracking. IEEE Trans. Pattern Anal. Mach. Intell. 45(2), 1992–2008 (2022)

Acknowledgements

This work was supported in part by the Science and Technology Foundation of Guizhou Province (Grant No. QKHJC-ZK[2024]063), and in part by National Natural Science Foundation of China (Grant No. 62266011).

Author information

Authors and Affiliations

Contributions

Conceptualization:[Dafu zu], [Guangqian Kong]; Methodology:[Dafu Zu], [Xun Duan]; Formal analysis and investigation: [Dafu Zu], [Huiyun Long]; Writing-original draft preparation: [Dafu Zu]; Writing-review and editing: [Guangqian Kong], [Xun Duan]; Funding acquisition: [Guangqian Kong],[Xun Duan],[Huiyun Long]; Supervision: [Guangqian Kong],[Xun Duan],[Huiyun Long].

Corresponding author

Ethics declarations

Conflict of interest:

The authors have no Conflict of interest to declare that are relevant to the content of this article.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Zu, D., Duan, X., Kong, G. et al. FLSTrack: focused linear attention swin-transformer network with dual-branch decoder for end-to-end multi-object tracking. SIViP 19, 25 (2025). https://doi.org/10.1007/s11760-024-03676-2

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11760-024-03676-2