Abstract

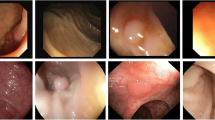

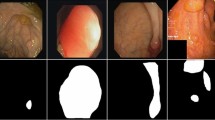

Multi-classification of colorectal polyps using endoscopic images is crucial for enhancing clinical diagnostic accuracy and reducing colorectal cancer mortality. Accurately classifying colorectal polyps poses significant challenges due to blurred lesion boundaries, varying intra-class scales, and high inter-class similarities. To address these challenges, we propose the Fused Residual Attention Network (FRAN) for colorectal polyp classification. FRAN employs a dual-branch structure to emphasize both semantic and detailed information. The Residual Attention Learning mechanism enhances lesion region detection, while Global Dependent Self-Attention captures global context. Additionally, the Edge Feature Fusion module, combined with Semantic Alignment, mitigates semantic loss during upsampling and captures edge-detailed features. We evaluated FRA on a private four-class colorectal polyp dataset, the three-class public Kvasir dataset, the three-class public HyperKvasir dataset, and the four-class public PICCOLO dataset. The overall classification accuracies achieved are 85.73% and 97.16%, respectively, which are higher than those of the compared state-of-the-art colorectal polyp classification algorithms. Our approach effectively highlights critical regions and maintains detailed information, thereby offering a robust solution to the challenges in colorectal polyp classification.

Similar content being viewed by others

Data availability

The datasets generated during and/or analysed during the current study are available from the corresponding author on reasonable request.

References

Sung, H., Ferlay, J., Siegel, R.L., Laversanne, M., Soerjomataram, I., Jemal, A., Bray, F.: Global cancer statistics 2020: globocan estimates of incidence and mortality worldwide for 36 cancers in 185 countries. CA: Cancer J. Clin. 71(3), 209–249 (2021)

Xi, Y., Xu, P.: Global colorectal cancer burden in 2020 and projections to 2040. Transl. Oncol. 14(10), 101174 (2021)

Singhi, A.D., Koay, E.J., Chari, S.T., Maitra, A.: Early detection of pancreatic cancer: opportunities and challenges. Gastroenterology 156(7), 2024–2040 (2019)

Misawa, M., Kudo, S., Mori, Y., et al.: Artificial intelligence-assisted polyp detection for colonoscopy: initial experience. Gastroenterology 154(8), 2027–2029 (2018)

Korbar, B., Olofson, A.M., Miraflor, A.P., Nicka, C.M., Suriawinata, M.A., Torresani, L., Suriawinata, A.A., Hassanpour, S.: Deep learning for classification of colorectal polyps on whole-slide images. J. Pathol. Inf. 8(1), 30 (2017)

Brand, M., Troya, J., Krenzer, A., Costanza, D.M., Niklas, M., Sebastian, G., Benjamin, W., Alexander, M., Alexander, H.: Frame-by-frame analysis of a commercially available artificial intelligence polyp detection system in full-length colonoscopies. Digestion 103(5), 378–385 (2022)

Muto, T., Bussey, H., Morson, B.: The evolution of cancer of the colon and rectum. Cancer 36(6), 2251–2270 (1975)

De Groen, P.C.: History of the endoscope [scanning our past]. Proc. IEEE 105(10), 1987–1995 (2017)

Wang, P., Xiao, X., Glissen Brown, J.R., Berzin, T.M., Tu, M., Xiong, F., Hu, X., Liu, P., Song, Y., Zhang, D., et al.: Development and validation of a deep-learning algorithm for the detection of polyps during colonoscopy. Nat. Biomed. Eng. 2(10), 741–748 (2018)

Poudel, S., Kim, Y.J., Vo, D.M., Lee, S.-W.: Colorectal disease classification using efficiently scaled dilation in convolutional neural network. IEEE Access 8, 99227–99238 (2020)

Ahn, S.B., Han, D.S., Bae, J.H., Byun, T.J., Kim, J.P., Eun, C.S.: The miss rate for colorectal adenoma determined by quality-adjusted, back-to-back colonoscopies. Gut Liver 6(1), 64 (2012)

Ozawa, T., Ishihara, S., Fujishiro, M., Kumagai, Y., Shichijo, S., Tada, T.: Automated endoscopic detection and classification of colorectal polyps using convolutional neural networks. Ther. Adv. Gastroenterol. 13, 1756284820910659 (2020)

Zhang, X., Chen, F., Yu, T., et al.: Real-time gastric polyp detection using convolutional neural networks. PloS one 14(3), e0214133 (2019)

Wan, J., Chen, B., Yu, Y.: Polyp detection from colorectum images by using attentive YOLOv5. United Eur. Gastroenterol. J. 11(12), 2264 (2021)

Brand, M., Troya, J., Krenzer, A., et al.: Development and evaluation of a deep learning model to improve the usability of polyp detection systems during interventions. United Eur. Gastroenterol. J. 10(5), 477–484 (2022)

Nisha, J., Gopi, V.P., Palanisamy, P.: Automated colorectal polyp detection based on image enhancement and dual-path CNN architecture. Biomed. Signal Process. Control 73, 103465 (2022)

Guo, X., Yuan, Y.: Triple ANet: adaptive abnormal-aware attention network for WCE image classification. Med. Image Anal. 11764, 293–301 (2019)

Li, S., Cao, J., Yao, J., Zhu, J., He, X., Jiang, Q.: Adaptive aggregation with self-attention network for gastrointestinal image classification. IET Image Process. 16, 2384–2397 (2022)

Krenzer, A., Banck, M., Makowski, K., et al.: A real-time polyp-detection system with clinical application in colonoscopy using deep convolutional neural networks. J. Imaging 9(2), 26 (2023)

Krenzer, A., Heil, S., Fitting, D., et al.: Automated classification of polyps using deep learning architectures and few-shot learning. BMC Med. Imaging 23(1), 59 (2023)

Krenzer, A., Makowski, K., Hekalo, A., et al.: Fast machine learning annotation in the medical domain: a semi-automated video annotation tool for gastroenterologists. Biomed. Eng. Online 21(1), 33 (2022)

Brand, M., Troya, J., Krenzer, A., Zita, S., Wolfram, G.Z., Alexander, M., Thomas, J.L., Alexander, H.: Development and evaluation of a deep learning model to improve the usability of polyp detection systems during interventions. United Eur. Gastroenterol. J. 10(5), 477–484 (2022)

Hu, J., Shen, L., Sun, G.: Squeeze-and-excitation networks. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 7132-7141 (2018)

Wang, Q., Wu, B., Zhu, P., Li, P., Zuo, W., Hu, Q.: Eca-net: Efficient channel attention for deep convolutional neural networks. In: 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 11531-11539 (2020). https://doi.org/10.1109/CVPR42600.2020.01155

Woo, S., Park, J., Lee, J.-Y., Kweon, I.S.: Cbam: Convolutional block attention module. In: Proceedings of the European Conference on Computer Vision (ECCV), pp. 3-19 (2018)

Li, X., Wang, W., Hu, X., Yang, J.: Selective kernel networks. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 510-519 (2019)

Hou, Q., Zhou, D., Feng, J.: Coordinate attention for efficient mobile network design. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 13713-13722 (2021)

Zhang, R., Zheng, Y., Mak, T.W.C., Yu, R., Wong, S.H., Lau, J.Y., Poon, C.C.: Automatic detection and classification of colorectal polyps by transferring lowlevel CNN features from nonmedical domain. IEEE J. Biomed. Health Inform. 21(1), 41–47 (2016)

Yu, F., Koltun, V.: Multi-scale context aggregation by dilated convolutions. arXiv preprint arXiv:1511.07122 (2015)

Dai, J., Qi, H., Xiong, Y., Li, Y., Zhang, G., Hu, H., Wei, Y.: Deformable convolutional networks. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 764-773 (2017)

Zhao, H., Shi, J., Qi, X., Wang, X., Jia, J.: Pyramid scene parsing network. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 2881-2890 (2017)

Liu, C., Wechsler, H.: A shape-and texture-based enhanced fisher classifier for face recognition. IEEE Trans. Image Process. 10(4), 598–608 (2001)

Yang, J., Yang, J.-Y.: Generalized k-l transform based combined feature extraction. Pattern Recognit. 35(1), 295–297 (2002)

Sun, Y., Chen, G., Zhou, T., Zhang, Y., Liu, N.: Context-aware crosslevel fusion network for camouflaged object detection. arXiv preprint arXiv:2105.12555 (2021)

Chen, Z., Guo, X., Woo, P.Y., Yuan, Y.: Super-resolution enhanced medical image diagnosis with sample affinity interaction. IEEE Trans. Med. Imaging 40(5), 1377–1389 (2021)

He, K., Zhang, X., Ren, S., Sun, J.: Deep residual learning for image recognition. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 770-778 (2016)

Jaderberg, M., Simonyan, K., Zisserman, A., et al.: Spatial transformer networks. In: Advances in Neural Information Processing Systems (NIPS 2015), Montreal, Canada, vol 28

Buda, M., Maki, A., Mazurowski, M.A.: A systematic study of the class imbalance problem in convolutional neural networks. Neural Netw. 106, 249–259 (2018)

Soffer, S., Klang, E., Shimon, O., Nachmias, N., Eliakim, R., Ben-Horin, S., Kopylov, U., Barash, Y.: Deep learning for wireless capsule endoscopy: a systematic review and meta-analysis. Gastrointest. Endosc. 92(4), 831–839 (2020)

Huang, G., Liu, Z., Van Der Maaten, L., Weinberger, K.Q.: Densely connected convolutional networks. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 4700–4708 (2017)

Sun, G., Cholakkal, H., Khan, S., Khan, F., Shao, L.: Fine-grained recognition: Accounting for subtle differences between similar classes. In: Proceedings of the AAAI Conference on Artificial Intelligence, vol. 34, pp. 12047–12054 (2020)

Kabir, H.M.: Reduction of class activation uncertainty with background information. arxiv preprint arxiv:2305.03238 (2023)

Zhou, B., Khosla, A., Lapedriza, A., Oliva, A., Torralba, A.: Learning deep features for discriminative localization. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 2921–2929 (2016)

Sánchez-Peralta, L., Blas, P.J., Picón, A., Calderón, Á., Polo, F., Andraka, N., Bilbao, R., Glover, B., Saratxaga, C., Sánchez-Margallo, F.: Piccolo white-light and narrow-band imaging colonoscopic dataset: a performance comparative of models and datasets. Appl. Sci. 10(23), 8501 (2020)

Borgli, H., Thambawita, V., Smedsrud, P.H., Hicks, S., Jha, D., Eskeland, S.L., et al.: Hyperkvasir, a comprehensive multi-class image and video dataset for gastrointestinal endoscopy. Sci. Data 7(1), 1–14 (2020)

Funding

This work was supported by National Science Foundation of P.R. China (Grants: 62233016), Key R&D Program Projects in Zhejiang Province(Grant: 2020C03074).

Author information

Authors and Affiliations

Contributions

Sheng Li and Xinran Guo wrote the main text. Beibei Zhu prepared some simulation figures. Shufang Ye, Yongwei Zhuang are providing the dataset. Jietong Ye wrote discussion with the simulation results. Xiongxiong He and Sheng Li supervise the work and provide the fundings. All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Ethical approval

This article does not contain any studies with human participants or animals performed by any of the authors.

Informed consent

Informed consent was obtained from all individual participants included in the study.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Li, S., Guo, X., Zhu, B. et al. Multi-classification of colorectal polyps with fused residual attention. SIViP 19, 144 (2025). https://doi.org/10.1007/s11760-024-03701-4

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11760-024-03701-4