Abstract

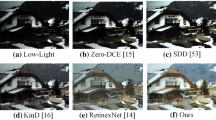

Current low-light image enhancement methods often suffer from insufficient detail enhancement, color distortion, excessive brightness enhancement, and limited generalization performance. To improve these issues, this paper proposes a multi-layer feature enhancement expression method utilizing multi-branch attention mechanisms. Specifically, single-level or single-scale feature extraction often fails to capture sufficient local details and global contextual features. Therefore, this article designs a multi-scale feature information extension module to obtain rich multi-scale feature information from different levels. Furthermore, to better capture key feature information across different levels, a multi-branch attention module is designed according to the characteristics of features at different scales. For example, lower network layers contain rich detailed information, for which local attention is used to optimize feature extraction. Higher network layers contain a wide range of contextual features that are processed using global attention. By using this strategy to enhance the feature expression ability of images. Finally, these feature information are fused to form a more accurate image feature representation. Extensive experiments on multiple public datasets demonstrate that the proposed method performs well and can enhance low-light images effectively.

Similar content being viewed by others

Data availibility

Data will be made available on request.

References

Kim, H.E., Linan, N.S.A.C.: Transfer learning for medical image classification: a literature review. BMC Med. Imaging. 22, 69 (2022)

Zeng, W., Zhu, C.L.H.L.: A survey of generative adversarial networks and their application in text-to-image synthesis. Elect. Res. Arch. 31, 7142–7181 (2023)

Deng, J., Dong, R.S. W.: Imagenet: a large-scale hierarchical image database. In: 2009 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 248–255. (2009)

Zeng, W., Xiao, Z.: Few-shot learning based on deep learning: a survey. Math. Biosci. Eng. 21(1), 679–711 (2023). https://doi.org/10.3934/mbe.2024029

Yun, S., Han, S.C. D.: Cutmix: Regularization strategy to train strong classifiers with localizable features. In: 2019 IEEE/CVF International Conference on Computer Vision (ICCV). pp. 6022–6031. (2019)

Zeng, W., Xiao, Z.: Improving long-tailed classification with PixDyMix: a localized pixel-level mixing method. Signal, Image Video Process. 18(10), 7157–7170 (2024). https://doi.org/10.1007/s11760-024-03382-z

Wei, C., Wang, W.H.Y. W. J.: Deep retinex decomposition for low-light enhancement. preprint, arXiv:1808.04560

Zhang, Y. H., Zhang, X.J.G. J. W. : Kindling the darkness: A practical low-light image enhancer. In: 2019 Proceedings of the 27th ACM international conference on multimedia. pp. 1632–1640. (2019)

Jiang, Y., Gong, X., Liu, D., Cheng, Yu., Fang, C., Shen, X., Yang, J., Zhou, P., Wang, Z.: EnlightenGAN: deep light enhancement without paired supervision. IEEE Trans. Image Process. 30, 2340–2349 (2021). https://doi.org/10.1109/TIP.2021.3051462

Guo, C. L., Li, J.C.G. C. Y.: Zero-reference deep curve estimation for low-light image enhancement. In: 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). pp. 1777–1786. (2020)

Li, C., Guo, C., Chen, C.L.: Learning to enhance low-light image via zero-reference deep curve estimation. IEEE Trans. Pattern Anal. Mach. Intell. (2021). https://doi.org/10.1109/TPAMI.2021.3063604

Vaswani, A., Ramachandran, A.S. P.: Scaling local self-attention for parameter efficient visual backbones. In: 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). pp. 12894–12904. (2021)

Zhang, Z. Z., Lan, W.J.Z. C. L.: Relation-aware global attention for person re-identification. In: 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). pp. 3186–3195. (2020)

Wei, C., Wang, W.H.Y. W. J.: Deep retinex decomposition for low-light enhancement. preprint, arXiv:1808.04560

Chen, C., Chen, J.X. Q. F.: Learning to see in the dark. In: 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). pp. 3291–3300. (2018)

Ma, L., Ma, R.S.L. T. Y.: Toward fast, flexible, and robust low-light image enhancement. In: 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). pp. 5637–5646 (2022)

Liu, R. S., Ma, J.A.Z. L.: Retinex-inspired unrolling with cooperative prior architecture search for low-light image enhancement. In: 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). pp. 10561–10570. (2021)

Zhu, A. Q., Zhang, Y.S. L.: Zero-shot restoration of underexposed images via robust retinex decomposition. In: 2020 IEEE International Conference on Multimedia and Expo (ICME). pp. 1–6. (2020)

Acknowledgements

The authors declare that no funds were received for this research.

Author information

Authors and Affiliations

Contributions

Wu Zeng:Software, Writing—original draft, Conceptualization, Methodology, Investigation, Writing—review & editing.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Zeng, W. A low-light image enhancement network based on multi-layer feature aggregation and multi-branch attention mechanisms. SIViP 19, 114 (2025). https://doi.org/10.1007/s11760-024-03706-z

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11760-024-03706-z