Abstract

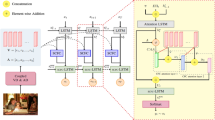

Image captioning is a task in the bimodal context of computer vision and natural language processing, where the model outputs textual information captions for given input images. Traditional Transformer architectures based on image encoder and language decoder have shown promising results in the image captioning domain. However, there are still two challenges present: heavy parameters and additional data preprocessing. In this paper, we propose a lightweight based-CLIP early fusion transformer (BCEFT) to tackle this challenge. The BCEFT use CLIP as the data encoder for images and text, then add a multi-modal fusion model to generate image captions. Specifically, the multi-modal fusion model comprises a multi-modal fusion attention module, which reduces computational complexity by more than a half. At last, we utilize reinforcement learning to train our model with beam search algorithm after cross-entropy training. Our approach only requires relatively quick training to produce a high-qualified captioning model. Without the demand for additional annotations or pre-training, it can effectively generate meaningful captions for large-scale and diverse datasets. The experimental results on the MSCOCO dataset demonstrate the superiority of our model. Meanwhile, our model achieves significant efficiency gains, including a nearly 50% decrease in model parameters and an eight-fold improvement in runtime speed.

Similar content being viewed by others

Data availibility

No datasets were generated or analysed during the current study.

References

Vinyals, O., Toshev, A., Bengio, S., Erhan, D.: Show and tell: a neural image caption generator. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 3156–3164 (2015)

Cornia, M., Baraldi, L., Cucchiara, R.: Show, control and tell: a framework for generating controllable and grounded captions. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 8299–8308 (2019)

Karpathy, A., Fei-Fei, L.: Deep visual-semantic alignments for generating image descriptions. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (2015)

Xu, K., Ba, J., Kiros, R., Cho, K., Courville, A., Salakhutdinov, R., Zemel, R.S., Bengio, Y.: Show, attend and tell: Neural image caption generation with visual attention. Computer Science, 2048–2057 (2015)

Vinyals, O., Toshev, A., Bengio, S., Erhan, D.: Show and tell: lessons learned from the 2015 MSCOCO image captioning challenge. IEEE Trans. Pattern Anal. Mach. Intell. 39(4), 652–663 (2017)

Vaswani, A., Shazeer, N., Parmar, N., Uszkoreit, J., Jones, L., Gomez, A.N., Kaiser, Ł., Polosukhin, I.: Attention is all you need. Adv.Neural Inf. Process. Syst.30 (2017)

Li, J., Selvaraju, R., Gotmare, A., Joty, S., Xiong, C., Hoi, S.C.H.: Align before fuse: vision and language representation learning with momentum distillation. Adv. Neural Inf. Process. Syst. 34, 9694–9705 (2021)

Devlin, J., Chang, M.-W., Lee, K., Toutanova, K.: BERT: pre-training of deep bidirectional transformers for language understanding. arXiv preprint arXiv:1810.04805 (2018)

Radford, A., Kim, J.W., Hallacy, C., Ramesh, A., Goh, G., Agarwal, S., Sastry, G., Askell, A., Mishkin, P., Clark, J., : Learning transferable visual models from natural language supervision. In: Proceedings of the International Conference on Machine Learning, pp. 8748–8763 (2021)

Lu, J., Xiong, C., Parikh, D., Socher, R.: Knowing when to look: Adaptive attention via a visual sentinel for image captioning. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 375–383 (2017)

Ren, S., He, K., Girshick, R., Sun, J.: Faster R-CNN: Towards real-time object detection with region proposal networks. Adv. Neural Inf. Process. Syst.28 (2015)

Li, Y., Qi, H., Dai, J., Ji, X., Wei, Y.: Fully convolutional instance-aware semantic segmentation. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 2359–2367 (2017)

Johnson, J., Karpathy, A., Fei-Fei, L.: Densecap: fully convolutional localization networks for dense captioning. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 4565–4574 (2016)

Anderson, P., He, X., Buehler, C., Teney, D., Johnson, M., Gould, S., Zhang, L.: Bottom-up and top-down attention for image captioning and visual question answering. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 6077–6086 (2018)

You, Q., Jin, H., Wang, Z., Fang, C., Luo, J.: Image captioning with semantic attention. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 4651–4659 (2016)

Cornia, M., Stefanini, M., Baraldi, L., Cucchiara, R.: Meshed-memory transformer for image captioning. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 10578–10587 (2020)

Li, G., Duan, N., Fang, Y., Gong, M., Jiang, D.: Unicoder-vl: a universal encoder for vision and language by cross-modal pre-training. In: Proceedings of the Conference on Artificial Intelligence, vol. 34, pp. 11336–11344 (2020)

Chen, Y., Rohrbach, M., Yan, Z., Shuicheng, Y., Feng, J., Kalantidis, Y.: Graph-based global reasoning networks. In: Proceedings of the International Conference on Pattern Recognition, pp. 433–442 (2019)

Parmar, N., Vaswani, A., Uszkoreit, J., Kaiser, L., Shazeer, N., Ku, A., Tran, D.: Image transformer. In: Proceedings of the International Conference on Machine Learning, pp. 4055–4064 (2018)

Shi, Z., Zhou, X., Qiu, X., Zhu, X.: Improving image captioning with better use of captions. arXiv preprint arXiv:2006.11807 (2020)

Sahoo, S.K., Chalasani, S., Joshi, A., Iyer, K.N.: Enhancing classification with hierarchical scalable query on fusion transformer. In: 2023 IEEE International Conference on Consumer Electronics (ICCE), pp. 1–6 (2023)

Zhang, J., Xie, Y., Ding, W., Wang, Z.: Cross on cross attention: deep fusion transformer for image captioning. IEEE Trans. Circ. Syst. Vid. Technol. 33(8), 4257–4268 (2023)

Rennie, S.J., Marcheret, E., Mroueh, Y., Ross, J., Goel, V.: Self-critical sequence training for image captioning. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 7008–7024 (2017)

Papineni, K., Roukos, S., Ward, T., Zhu, W.-J.: Bleu: a method for automatic evaluation of machine translation. In: Proceedings of the 40th Annual Meeting of the Association for Computational Linguistics, pp. 311–318 (2002)

Lin, C.-Y., Och, F.J.: Automatic evaluation of machine translation quality using longest common subsequence and skip-bigram statistics. In: Proceedings of the 42nd Annual Meeting of the Association for Computational Linguistics (ACL-04), pp. 605–612 (2004)

Denkowski, M., Lavie, A.: Meteor universal: Language specific translation evaluation for any target language. In: Proceedings of the Ninth Workshop on Statistical Machine Translation, pp. 376–380 (2014)

Vedantam, R., Lawrence Zitnick, C., Parikh, D.: Cider: Consensus-based image description evaluation. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 4566–4575 (2015)

Anderson, P., Fernando, B., Johnson, M., Gould, S.: Spice: semantic propositional image caption evaluation. In: Proceedings of the European Conference on Computer Vision, pp. 382–398 (2016)

Wang, Z., Yu, J., Yu, A.W., Dai, Z., Tsvetkov, Y., Cao, Y.: Simvlm: Simple visual language model pretraining with weak supervision. arXiv preprint arXiv:2108.10904 (2021)

Li, X., Yin, X., Li, C., Zhang, P., Hu, X., Zhang, L., Wang, L., Hu, H., Dong, L., Wei, F., : Oscar: object-semantics aligned pre-training for vision-language tasks. In: Proceedings of the European Conference on Computer Vision, pp. 121–137 (2020)

Li, J., Li, D., Xiong, C., Hoi, S.: Blip: Bootstrapping language-image pre-training for unified vision-language understanding and generation. In: International Conference on Machine Learning, pp. 12888–12900 (2022)

Zhou, L., Palangi, H., Zhang, L., Hu, H., Corso, J., Gao, J.: Unified vision-language pre-training for image captioning and vqa. In: Proceedings of the Conference on Artificial Intelligence, vol. 34, pp. 13041–13049 (2020)

Hu, X., Gan, Z., Wang, J., Yang, Z., Liu, Z., Lu, Y., Wang, L.: Scaling up vision-language pre-training for image captioning. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 17980–17989 (2022)

Author information

Authors and Affiliations

Contributions

Jinyu Guo: Software and Writing. Yuejia Li: Data analysis and Software. Wenrui Li: Methodology and Visualization. Guanghui Cheng: Methodology and Supervision.

Corresponding authors

Ethics declarations

Conflict of interest

The authors declare no Conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Guo, J., Li, Y., Cheng, G. et al. Based-CLIP early fusion transformer for image caption. SIViP 19, 112 (2025). https://doi.org/10.1007/s11760-024-03721-0

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11760-024-03721-0