Abstract

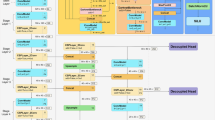

Existing target detection algorithms have limitations in complex mine environments like low illumination, small targets, background interference, occlusion, and motion blur. Also, their complex network structures and large parameter volumes can't meet real-time detection needs of edge devices. Thus, a lightweight network-based multi-target detection method for mine driverless rail locomotive driving areas was proposed. A dataset of seven target images (electric locomotives, miners, etc.) in five scenarios (normal & low illumination, etc.) was constructed. Based on YOLOv5s, improvements were made: adding a small target detection layer to enhance small target detection; using the GhostBottleNeck module to replace BottleNeck in C3 to build C3Ghost, reducing calculation and parameters and compensating for the added layer; introducing the SimAM attention mechanism to focus on targets and suppress interference; replacing CIoU with SIoU loss function to speed up convergence. Experimental results show the proposed lightweight network cuts parameters by 12.3%, boosts mAP by 1.7%, and is more suitable for multi-object detection in mine rail locomotive driving areas.

Similar content being viewed by others

Data availability

The datasets generated during and/or analyzed during the current study are available from the corresponding author on reasonable request.

References:

Han, J., Wei, X., Lu, Y., et al.: Driverless technology of underground locomotive in coal mine. J China Coal Soc. 45(6), 2104–2115 (2020). https://doi.org/10.13225/j.cnki.jccs.ZN20.0343

Bao, J., Zhang, Q., Ge, S., et al.: Basic research and application practice of unmanned auxiliary transportation system in coal mine. J China Coal Soc. 48(2), 1085–1098 (2023). https://doi.org/10.13225/j.cnki.jccs.2022.1600

Souani, C., Faiedh, H., Besbes, K.: Efficient algorithm for automatic road sign recognition and its hardware implementation. J Real-time Image Process. 9(1), 79–93 (2014). https://doi.org/10.1007/s11554-013-0348-z

Maldonado, B., Lafuente, A., Gil, J., et al.: Road-sign detection and recognition based on support vector machines. IEEE Trans Intell Transp Syst. 8(2), 264–278 (2007)

Girshick, R., Donahue, J., Darrell, T., et al.: Rich feature hierarchies for accurate object detection and semantic segmentation. In: Proceedings of IEEE/CVF Conference on Computer Vision and Pattern Recognition. 580-587 (2014). https://ieeexplore.ieee.org/document/6909475

Tian, Y., Yang, G., Wang, Z., et al.: Apple detection during different growth stages in orchards using the improved YOLO-V3 model. Comput Electr Agric. 157(02), 417–426 (2019). https://doi.org/10.1016/j.compag.2019.01.012

Hendry, H., Chen, R.: Automatic license plate recognition via sliding-window darknet-YOLO deep learning. Image Vision Comput. 87(07), 47–56 (2019). https://doi.org/10.1016/j.imavis.2019.04.007

Bochkovskiy, A., Wang, C., Liao, H. -Y. M.: YOLOv4: Optimal speed and accuracy of object detection. In: Proceedings of IEEE/CVF Conference on Computer Vision and Pattern Recognition. (2020) https://doi.org/10.48550/arXiv.2004.10934

Tan, M., Pang, R., Le, Q.: Efficient Det: Scalable and efficient object detection. In: Proceedings of IEEE/CVF Conference on Computer Vision and Pattern Recognition. 10781-10790 (2020). https://ieeexplore.ieee.org/abstract/document/9156454

Liu, W., Anguelov, D., Erhan, D., et al.: SSD: single shot multibox detector. In: Proceedings of European Conference on Computer Vision. 21-37 (2016). https://doi.org/10.1007/978-3-319-46448-0_2

Girshick, R.: Fast R-CNN. In: Proceedings of IEEE International Conference on Computer Vision. 1440-1448 (2015). https://doi.org/10.48550/arXiv.1504.08083

Ren, S., He, K., Girshick, R., et al.: Faster R-CNN: towards real-time object detection with region proposal networks. IEEE Trans Pattern Anal Mach Intell. 39(6), 1137–1149 (2017)

Zhang, S., Ji, F., Lu, C., et al.: Improved YOLOX method for track obstacle detection of unmanned electric locomotive in Coal mine under low illumination. J Safety Environ. 24(03), 952–9611 (2024). https://doi.org/10.13637/j.issn.1009-6094.2023.0349

Wang, W., Wang, S., Guo, Y., et al.: Obstacle detection method of unmanned electric locomotive in coal mine based on YOLOv3-4L. J Electr Imag. 31(2), 023032 (2022). https://doi.org/10.1117/1.JEI.31.2.023032

Mao, Q., Wang, M., Hu, X., et al.: Intelligent identification method of shearer drums based on improved YOLOv5s with dark channel-guided filtering defogging. Energies. 16(10), 4190 (2023). https://doi.org/10.3390/en16104190

Wang, W., Wang, S., Guo, Y., et al.: Detection method of obstacles in the dangerous area of electric locomotive driving based on MSE-YOLOv4-Tiny. Measur Sci Technol. 33, 115403 (2022). https://doi.org/10.1088/1361-6501/ac82db

Chen, Y., Lu, C., Wang, Zhen: Detection of foreign object intrusion in railway region of interest based on lightweight network. J Jilin Univ. 52(10), 2405–2418 (2022). https://doi.org/10.13229/j.cnki.jdxbgxb20210266

Wang, W., Wang, S., Zhao, Y., et al.: Real-time obstacle detection method in the driving process of driverless rail locomotives based on DeblurGANv2 and improved YOLOv4. Appl Sci-Basel. 13(6), 3861–3879 (2023). https://doi.org/10.3390/app13063861

Pan, L., Duan, Y., Zhang, Y., et al.: A lightweight algorithm based on YOLOv5 for relative position detection of hydraulic support at coal mining faces. J Real-Time Image Proc. 20, 40 (2023). https://doi.org/10.1007/s11554-023-01292-w

Liu, G., Hu, Y., Chen, Z., et al.: Lightweight object detection algorithm for robots with improved YOLOv5. Eng Appl Artif Intell. 123, 106217 (2023). https://doi.org/10.1016/j.engappai.2023.106217

Yang L., Zhang, R., Li, L., et al.: SimAM: A simple, parameter-free attention module for convolutional neural networks. In: Proceedings of International Conference on Machine Learning. 11863-1187 (2021). https://api.semanticscholar.org/CorpusID:235825945

Zheng, Z., Wang, P., Liu, W., et al.: Distance-IoU loss: faster and better learning for bounding box regression. In: Proceedings of the AAAI Conference on Artificial Intelligence New York. 12993-13000 (2020). https://doi.org/10.48550/arXiv.1911.08287

Gevorgyan, Z.: SIoU loss: More powerful learning for bounding box regression. In: Proceedings of Computer Vision and Pattern Recognition. 1-12(2022). https://api.semanticscholar.org/CorpusID:249063031

Han, K., Wang, Y., Tian, Q., et al.: GhostNet: More features from cheap operations. In: Proceedings of IEEE/CVF Conference on Computer Vision and Pattern Recognition. 1577-1586 (2020). https://ieeexplore.ieee.org/document/9157333

Webb, B., Dhruv, N., Solomon, S., et al.: Early and late mechanisms of surround suppression in striate cortex of macaque. J Neurosci. 25(50), 11666–11675 (2005). https://doi.org/10.1523/JNEUROSCI.3414-05.2005

Lin, Y., Hu, W., Zheng, Z., et al.: citrus identification and counting algorithm based on improved YOLOv5s and deepsort. Agronomy 13(7), 1674 (2023). https://doi.org/10.3390/agronomy13071674

Hu, J., Shen, L., Sun, G.: Squeeze-and-Excitation Networks. In: Proceedings of IEEE/CVF Conference on Computer Vision and Pattern Recognition. 7132-7141 (2018). https://ieeexplore.ieee.org/document/8578843

Woo, S., Park, J., Lee, J.-Y., et al.: Cbam: Convolutional block attention module. In: Proceedings of IEEE/CVF Conference on Computer Vision and Pattern Recognition. 3-19 (2018). https://doi.org/10.48550/arXiv.1807.06521

Hou, Q., Zhou, D., Feng, J.: Coordinate Attention for Efficient Mobile Network Design. In: Proceedings of IEEE/CVF Conference on Computer Vision and Pattern Recognition. 13708-13717 (2021). https://ieeexplore.ieee.org/document/9577301

Wang, Q., Wu, B., Zhu, P., et al.: ECA-Net: efficient channel attention for deep convolutional neural network. In: Proceedings of IEEE/CVF Conference on Computer Vision and Pattern Recognition. 11531-11539 (2020). https://ieeexplore.ieee.org/document/9156697

Adarsh, P., Rathi, P., Kumar, M. YOLOv3-Tiny: object detection and recognition using one stage improved model. In: Proceedings of International Conference on Advanced Computing and Communication Systems. 687-694 (2020). https://ieeexplore.ieee.org/document/9074315

Wang, C. -Y., Bochkovskiy, A., Liao, H. -Y. M.: Scaled-YOLOv4: scaling cross stage partial network. In: Proceedings of IEEE/CVF Conference on Computer Vision and Pattern Recognition. 13024-13033 (2021). https://doi.org/10.48550/arXiv.2011.08036

Ge, Z., Liu, S., Wang, F., et al.: Yolox: Exceeding yolo series in 2021. In: Proceedings of IEEE/CVF Conference on Computer Vision and Pattern Recognition. (2021). https://doi.org/10.48550/arXiv.2107.08430

Wang, C.-Y., Bochkovskiy, A., Liao, H.-Y.M.: YOLOv7: trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. Proce IEEE/CVF Confer Comput Vision Pattern Recognit (2023). https://doi.org/10.48550/arXiv.2207.02696

Funding

This study was funded by Scientific Research Foundation for High-level Talents of Anhui University of Science and Technology under Grant No.2023yjrc95, the Open Fund of Anhui Intelligent Mine Technology and Equipment Engineering Research Center under Grant No. AIMTEERC202405, the National Natural Science Foundation of China Project under Grant No. 52274153.

Author information

Authors and Affiliations

Contributions

Wenshan Wang conceived the idea. Wenshan Wang and Kun Hu performed the data analyses and wrote the manuscript. Hao Jiang edited the manuscript. All authors discussed the results and revised the manuscript.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no conflict of interest.

Consent for Publication

All authors agree to publish this paper.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Wang, W., Hu, K. & Jiang, H. Multi-target detection method for driving area of mine driverless rail locomotives based on lightweight network. SIViP 19, 276 (2025). https://doi.org/10.1007/s11760-025-03883-5

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11760-025-03883-5