Abstract

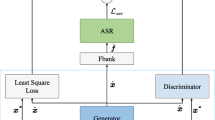

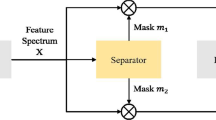

Speech Dialogue System is currently widely used in various fields. Users can interact and communicate with the system through natural language. While in practical situations, there exist third-person background sounds and background noise interference in real dialogue scenes. This issue seriously damages the intelligibility of the speech signal and decreases speech recognition performance. To tackle this, in this paper, we exploit a speech separation method that can help us to separate target speech from complex multi-person speech. We propose a multi-task-attention mechanism, and we select TFCN as our audio feature extraction module. Based on the multi-task method, we use SI-SDR and cross-entropy speaker classification loss function for joint training, and then we use the attention mechanism to further excludes the background vocals in the mixed speech. We not only test our result in Distortion indicators SI-SDR and SDR, but also test with a speech recognition system. To train our model and demonstrate its effectiveness, we build a background vocal removal data set based on a common data set. Experimental results empirically show that our model significantly improves the performance of speech separation model.

Similar content being viewed by others

References

Lee K-F, Hon H-W, Reddy R (1990) An overview of the sphinx speech recognition system. IEEE Trans Acoust Speech Signal Process 38(1):35–45

Lin Y-C, Chiang T-H, Wang H-M, Peng C-M, Chang C-H (1998) The design of a multi-domain mandarin Chinese spoken dialogue system. In: Fifth international conference on spoken language processing

Zibert J, Martincic-Ipsic S, Hajdinjak M, Ipsic I, Mihelic F (2003) Development of a bilingual spoken dialog system for weather information retrieval. In: Eighth European conference on speech communication and technology

Huang C, Xu P, Zhang X, Zhao S, Huang T, Xu B (1999) Lodestar: a mandarin spoken dialogue system for travel information retrieval. In: Sixth European conference on speech communication and technology. Citeseer

Liu J, Xu Y, Seneff S, Zue V (2008) Citybrowser II: a multimodal restaurant guide in mandarin. In: International symposium on Chinese spoken language processing

Loizou PC, Kim G (2010) Reasons why current speech-enhancement algorithms do not improve speech intelligibility and suggested solutions. IEEE Trans Audio Speech Lang Process 19(1):47–56

Boll S (1979) Suppression of acoustic noise in speech using spectral subtraction. IEEE Trans Acoust Speech Signal Process 27(2):113–120

Lim JS, Oppenheim AV (1979) Enhancement and bandwidth compression of noisy speech. Proc IEEE 67(12):1586–1604

Liang S, Liu W, Jiang W (2012) A new Bayesian method incorporating with local correlation for IBM estimation. IEEE Trans Audio Speech Lang Process 21(3):476–487

Roweis ST (2000) One microphone source separation. In: NIPS, vol 13

Ozerov A, Vincent E, Bimbot F (2011) A general flexible framework for the handling of prior information in audio source separation. IEEE Trans Audio Speech Lang Process 20(4):1118–1133

Mohammadiha N, Smaragdis P, Leijon A (2013) Supervised and unsupervised speech enhancement using nonnegative matrix factorization. IEEE Trans Audio Speech Lang Process 21(10):2140–2151

Virtanen T (2007) Monaural sound source separation by nonnegative matrix factorization with temporal continuity and sparseness criteria. IEEE Trans Audio Speech Lang Process 15(3):1066–1074

Wang D, Brown G (2008) Computational auditory scene analysis: principles, algorithms and applications. IEEE Trans Neural Netw 19(1):199–199

Jia X, Li D (2022) TFCN: temporal-frequential convolutional network for single-channel speech enhancement. arXiv:2201.00480

Hao Y, Huang X, Huang H, Wu Q (2021) Denoi-spex+: a speaker extraction network based speech dialogue system. In: The IEEE international conference on e-business engineering (ICEBE)

Paliwal K, Wójcicki K, Schwerin B (2010) Single-channel speech enhancement using spectral subtraction in the short-time modulation domain. Speech Commun 52(5):450–475

Hinton GE, Osindero S, Teh Y-W (2006) A fast learning algorithm for deep belief nets. Neural Comput 18(7):1527–1554

Nair V, Hinton GE (2010) Rectified linear units improve restricted Boltzmann machines. In: Icml

Abdel-Hamid O, Mohamed A-r, Jiang H, Penn G (2012) Applying convolutional neural networks concepts to hybrid NN-HMM model for speech recognition. In: 2012 IEEE international conference on acoustics, speech and signal processing (ICASSP). IEEE, pp 4277–4280

Abdel-Hamid O, Deng L, Yu D (2013) Exploring convolutional neural network structures and optimization techniques for speech recognition. In: Interspeech, vol 11. Citeseer, pp 73–75

Smahi MI, Hadjila F, Tibermacine C, Benamar A (2021) A deep learning approach for collaborative prediction of web service QoS. SOCA 15(1):5–20

Wang D, Chen J (2018) Supervised speech separation based on deep learning: an overview. IEEE/ACM Trans Audio Speech Lang Process 26(10):1702-1726

Fan C, Liu B, Tao J, Wen Z, Yi J, Bai Y (2018) Utterance-level permutation invariant training with discriminative learning for single channel speech separation. In: 2018 11th international symposium on chinese spoken language processing (ISCSLP). IEEE, pp 26–30

Yu D, Kolbæk M, Tan Z-H, Jensen J (2017) Permutation invariant training of deep models for speaker-independent multi-talker speech separation. In: 2017 IEEE international conference on acoustics, speech and signal processing (ICASSP). IEEE, pp 241–245

Kolbæk M, Yu D, Tan Z-H, Jensen J (2017) Multitalker speech separation with utterance-level permutation invariant training of deep recurrent neural networks. IEEE/ACM Trans Audio Speech Lang Process 25(10):1901–1913

Hershey JR, Chen Z, Le Roux J, Watanabe S (2016) Deep clustering: discriminative embeddings for segmentation and separation. In: 2016 IEEE international conference on acoustics, speech and signal processing (ICASSP). IEEE, pp 31–35

Williamson DS, Wang Y, Wang DL (2016) Complex ratio masking for monaural speech separation. IEEE/ACM Trans Audio Speech Lang Process 24(3):483

Lee Y-S, Wang C-Y, Wang S-F, Wang J-C, Wu C-H (2017) Fully complex deep neural network for phase-incorporating monaural source separation. In: 2017 IEEE international conference on acoustics, speech and signal processing (ICASSP). IEEE, pp 281–285

Pascual S, Bonafonte A, Serra J (2017) Segan: speech enhancement generative adversarial network. arXiv:1703.09452

Chen J, Wang D (2017) Long short-term memory for speaker generalization in supervised speech separation. J Acoust Soc Am 141(6):4705-4714

Luo Y, Mesgarani N (2019) CONV-TasNet: surpassing ideal time-frequency magnitude masking for speech separation. IEEE/ACM Trans Audio Speech Lang Process 27(8):1256–1266

Xu C, Rao W, Chng ES, Li H (2019) Time-domain speaker extraction network. In: 2019 IEEE automatic speech recognition and understanding workshop (ASRU). IEEE, pp 327–334

Lea C, Vidal R, Reiter A, Hager GD (2016) Temporal convolutional networks: a unified approach to action segmentation. In: European conference on computer vision. Springer, pp 47–54

He K, Zhang X, Ren S, Sun J (2016) Deep residual learning for image recognition. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 770–778

Xu C, Rao W, Chng ES, Li H (2020) SpEx: Multi-scale time domain speaker extraction network. IEEE/ACM Trans Audio Speech Lang Process 28:1370–1384

Delcroix M, Ochiai T, Zmolikova K, Kinoshita K, Tawara N, Nakatani T, Araki S (2020) Improving speaker discrimination of target speech extraction with time-domain speakerbeam. In: ICASSP 2020—2020 IEEE international conference on acoustics, speech and signal processing (ICASSP). IEEE, pp 691–695

Bu H, Du J, Na X, Wu B, Zheng H (2017) Aishell-1: an open-source mandarin speech corpus and a speech recognition baseline. In: 2017 20th conference of the oriental chapter of the international coordinating committee on speech databases and speech I/O systems and assessment (O-COCOSDA). IEEE, pp 1–5

Funding

This work was supported by National Natural Science Foundation of China (61876208 and 61873094).

Author information

Authors and Affiliations

Corresponding authors

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Hao, Y., Wu, J., Huang, X. et al. Speaker extraction network with attention mechanism for speech dialogue system. SOCA 16, 111–119 (2022). https://doi.org/10.1007/s11761-022-00340-w

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11761-022-00340-w