Abstract

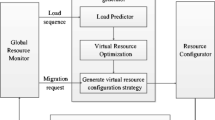

Predicting virtual machine (VM) workload to realize dynamic allocation resources has always been a hot issue in research, most of the current resource prediction methods are based on different load resources to build prediction models, it is difficult to realize knowledge transfer between multi-task prediction models to complete multiple tasks’ prediction. This paper proposes a innovative method - dynamic resource allocation method based on fuzzy migration learning, which is based on the feature attributes of command line processes to predict multiple resource loads of VMs and realize dynamic allocation of VM resources. Firstly, Principal Components Analysis (PCA) algorithm is used to reduce the attributes dimension of command line process. Then, we apply fuzzy transfer learning, which is based on fuzzy neural network with the capability to deal with the uncertainty in transfer learning, to predict multiple resource loads of VMs with the strong regularities of command line processes, and dynamically configure the resources of VMs. In the experimental procedure, we take CPU and memory as examples, on the basis of CPU prediction model, the model parameters are transferred to memory prediction model obtaining good results. The implementation verified the effectiveness of the proposed method, achieves the aim of dynamic resource allocation of VMs, and improved the VMs’ performance.

Similar content being viewed by others

References

Jin H, Gao W, Wu S et al (2011) Optimizing the live migration of virtual machine by CPU scheduling[J]. J Netw Comput Appl 34(4):1088–1096

Mhedheb Y, Jrad F, Tao J, et al. Load and thermal-aware VM scheduling on the cloud[C]// international conference on Algorithms & Architectures for parallel processing. Springer-Verlag New York, Inc. 2013

Gupta RK, Kumar PR (2017) Balance resource utilization (BRU) approach for the dynamic load balancing in cloud environment by using AR prediction model[J].Theoretical and experimental. Plant Physiol 29(4):24–50

Xiao Z, Song W, Chen Q (2013) Dynamic resource allocation using virtual Machines for Cloud Computing Environment[J]. IEEE Transactions on Parallel and Distributed Systems 24(6):1107–1117

Jaiganesh M, Antony Kumar AV (2013) B3: fuzzy-based data center load optimization in cloud computing. Math Probl Eng 2013:1–11

Domanal S G, Reddy G R M. An efficient cost optimized scheduling for spot instances in heterogeneous cloud environment[J]. Future Generation Computer Systems, 2018:S0167739X17303667

Zare J, Abolfazli S, Shojafar M, et al. Resource scheduling in Mobile cloud computing: taxonomy and open challenges[C]// IEEE iThings 2015 (8th IEEE international conference on internet of things). IEEE, 2015

Hai Z, Tao K, Zhang X. An approach to optimized resource scheduling algorithm for open-source cloud systems[C]// Chinagrid conference. 2010

Al-Sayed M M, Khattab S, Omara F A. Prediction mechanisms for monitoring state of cloud resources using Markov chain model[J]. Journal of Parallel and Distributed Computing, 2016:S0743731516300181

Paulraj G J L, Francis S A J, Peter J D, et al. A combined forecast-based virtual machine migration in cloud data centers[J] .Computers & Electrical Engineering, 2018

AlSayed MM, Khattab S, Omara FA (2016) Prediction mechanisms for monitoring state of cloud resources using Markov chain model[J]. Journal of Parallel & Distributed Computing 96:163–171

Bey KB, Benhammadi F, Gessoum Z et al (2011) CPU load prediction using neuro-fuzzy and Bayesian inferences[J]. Neurocomputing 74(10):1606–1616

Chen Y , Cao J , Guo P . CPU Load Prediction Based on a Multidimensional Spatial Voting Model.[C]// IEEE International Conference on Data Science & Data Intensive Systems. IEEE, 2016

Mason K , Duggan M , Barrett E , et al. Predicting host CPU utilization in the cloud using evolutionary neural networks[J]. Future Generation Computer Systems, 2018:S0167739X17322793

Zhang Y, Sun W, Inoguchi Y. CPU load predictions on the computational grid[C]// IEEE international symposium on cluster computing & the grid. IEEE, 2014

Feng D, Wu Z, Zuo DC (2019) A multiobjective migration algorithm as a resource consolidation strategy in cloud computing. PLoS One 14(2):1–25

Zhang Y, Sun W, Inoguchi Y (2008) Predict task running time in grid environments based on CPU load predictions[J]. Futur Gener Comput Syst 24(6):489–497

Baldini I, Fink S J, Altman E R. Predicting GPU Performance from CPU Runs Using Machine Learning.[C]//IEEE International Symposium on Computer Architecture & High Performance Computing. IEEE Computer Society, 2014

Wang Z, Zheng L, Chen Q, et al. CAP: co-scheduling based on asymptotic profiling in CPU+GPU hybrid systems[C]//proceedings of the 2013 international workshop on programming models and applications for multicores and Manycores. 2013

Bey KB, Benhammadi F, Mokhtari A et al (2010) Mixture of ANFIS systems for CPU load prediction in metacomputing environment[J]. Futur Gener Comput Syst 26(7):1003–1011

Ardalani N, Lestourgeon C, Sankaralingam K , et al. Cross-architecture performance prediction (XAPP) using CPU code to predict GPU performance[J]. ACM Press the 48th International Symposium, 2015:725–737

Duggan M , Mason K , Duggan J , et al. Predicting host CPU utilization in cloud computing using recurrent neural networks[C]//Internet Technology & Secured Transactions. IEEE, 2018

Pan SJ, Yang Q (2010) A survey on transfer learning[J]. IEEE Trans Knowl Data Eng 22(10):1345–1359

Kato T, Kashima H, Sugiyama M et al (2010) Conic programming for multitask learning[J]. IEEE Trans Knowl Data Eng 22(7):957–968

la Vega de León Antonio D, Beining C, Gillet VJ (2018) Effect of missing data on multitask prediction methods[J]. Journal of Chem informatics 10(1):26

Lin D, An X, Zhang J Doublebootstrapping source data selection for instance based transfer learning. Pattern Recognition letters 34(11):1279–1285

Jiang S, Xu Y, Song H, Wu Q et al (2018) Multi-instance transfer metric learning by weighted distribution and consistent maximum likelihood estimation. Neurocomputing 321:49–60

Wang Y , Zhai J , Li Y , et al. Transfer learning with partial related “instance-feature” knowledge[J]. Neurocomputing, 2018: S092523121830568X

Biondi GO, Prati RC (2015) Setting parameters for support vector machines using transfer learning[J]. Journal of Intelligent & Robotic Systems 80(1):295–311

Mihalkova L, Mooney R J. Transfer learning from minimal target data by mapping across relational domains[C]// international Jont conference on Artifical intelligence. 2009

Cambria E, White B (2014) Jumping NLP curves: a review of natural language processing research [review article][J]. IEEE Comput Intell Mag 9(2):48–57

Jing Y, Li T, Huang J et al (2016) An incremental attribute reduction approach based on knowledge granularity under the attribute generalization[J]. Int J Approx Reason 76:80–95

Kewen Li, Ming-Wen Shao, Wei-Zhi Wu. A data reduction method in formal fuzzy contexts[J]. Int J Mach Learn Cybern, 2016:1–11

D’Aspremont A, Ghaoui LE, Lanckriet JGRG (2007) A direct formulation for sparse PCA using Semidefinite programming[J]. SIAM Rev 49(3):434–448

Alandkar L , Gengaje S . Novel adaptive learning scheme for GMM[C]// international conference on wireless communications. IEEE, 2018

Jing L, Ng MK, Huang JZ (2007) An entropy weighting k-means algorithm for subspace clustering of high-dimensional sparse data[J]. IEEE Transactions on Knowledge & Data Engineering 19(8):1026–1041

Dempster A P. Maximum likelihood from incomplete data via the EM algorithm[J]. Journal of Royal Statistical Society B, 1977, 39

Jang J-SR (1993) ANFIS: adaptive-network- based fuzzy inference system[J]. IEEE Transactions on Systems, Man and Cybernetics 23(3):665–685

Xu H, Caramanis C, Sanghavi S (2012) Robust PCA via Outlier Pursuit[J]. IEEE Trans Inf Theory 58(5):3047–3064

Yan S, Xu D, Zhang B et al (2006) Graph embedding and extensions: a general framework for dimensionality reduction[J]. IEEE Trans Pattern Anal Mach Intell 29(1):40–51

Zhang S, Yin X, He C. The automatic estimating method of the in-degree of nodes in associated semantic network oriented to big data[M]. Kluwer Academic Publishers, 2016

Merlet J (2006) Jacobian, manipulability, condition number, and accuracy of parallel robots[J]. J Mech Des 128(128):199–206

Hua Z, Jie L, Guangquan Z et al (2018) Fuzzy transfer learning using an infinite Gaussian mixture model and active learning[J]. IEEE Trans Fuzzy Syst:1–1

Zuo H, Zhang G, Pedrycz W et al (2017) Fuzzy Regression Transfer Learning in Takagi-Sugeno Fuzzy Models[J]. IEEE Trans Fuzzy Syst 25(6):1795–1807

Acknowledgments

This work was supported in part by the National Natural Science Foundation of China (Grant no. 61702098), the science and technology planning project of Guangdong Province, China (2014A010103039, 2015A010103022, 20 14B090901002), the Dongguan science and technology plan project, Guangdong Privince, China(2014509102211), the Natural science fund for colleges and universities of Jiangsu province, China (18KJB520049), the industry University-Research-Cooperation Project in Jiangsu Province, China (BY2018124), and the Next Generation Internet Technology Innovation Project of Cernet, China (NGII20170515). The author would like to thank the anonymous reviewers and the editors for their comments and suggestions that improved the quality of the manuscript. In addition, the project received the funding supporting from National Scientific Data Sharing Platform for Population and Health.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Wu, X., Wang, H., Tan, W. et al. Dynamic allocation strategy of VM resources with fuzzy transfer learning method. Peer-to-Peer Netw. Appl. 13, 2201–2213 (2020). https://doi.org/10.1007/s12083-020-00885-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12083-020-00885-7