Abstract

The bearing capacity of a pile is a critical factor in geotechnical design, necessitating extensive testing procedures that often increase both the time and cost of earthwork. Consequently, there is a growing demand for efficient and reliable methods to determine pile bearing capacity. This study aims to propose optimized machine learning based models through the application of Random Search (RS) and Grid Search (GS) optimization techniques for the prediction of pile-bearing capacity in layered soils. For this purpose, an extensive dataset is sourced from literature, and various machine learning algorithms including Random Forest (RF), Support Vector Machine (SVM), and XGBoost are investigated. Through a systematic modeling approach, multiple models are generated, and the performance of machine learning algorithms is refined using RS and GS cross validation (CV) using a customized code in Python. Optimized models are further assessed based on comprehensive evaluation criteria using key statistical performance indices. The results demonstrate that both RS and GS-tuned machine learning models achieve high accuracy, with R2 values exceeding 0.9 and a low error index score across testing and training datasets. Notably, GS exhibits slightly superior statistical performance compared to RS. Furthermore, the tuned models with RS and GS showcase high performance on the validation dataset, with GS consistently outperforming RS. XGBoost emerges as the top performer among the machine learning models, followed by RF and SVM, highlighting the efficacy of tree-based algorithms in capturing the geotechnical variability inherent within pile bearing data. The proposed models offer valuable insights for predicting the preliminary evaluation of pile bearing capacity, facilitating swift and cost-effective geotechnical characterization within an acceptable error margin. This study introduces advancements in predictive modeling for geotechnical engineering, highlighting the transformative potential of optimization methodologies to enhance the machine learning models used for decision-making processes in civil engineering applications.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Pile foundations are frequently utilized to support structures by penetrating deep into the ground and transferring loads onto denser and more stable soils or rocks. It is crucial to accurately ascertain their load-bearing capacity (Momeni et al. 2015). The maximum load that can be applied to a pile without causing failure in the soil beneath the pile or pile itself is known as the bearing capacity of the pile. Owing to this, pile bearing capacity is the most important parameter while designing piles (Drusa et al. 2016). For safe and economical construction of the project, an accurate estimation of bearing capacity is needed (Pham et al. 2020). Throughout years of research, various approaches have been adopted to determine the pile-bearing capacity such as empirical formulations and field testing. Empirical formulations offer insights based on historical data and observations, while static and dynamic field tests provide direct and real-time evaluations of pile performance under specific conditions. Empirical formulas often yield inaccurate estimates due to the complex interplay of external factors like soil mechanics and geotechnical conditions, as well as internal considerations such as pile dimensions (Liu et al. 2019). For the meticulous analysis, the presence of uncertainties in the impact of the hammer and variations in soil strength between the time of pile driving and the time of loading lead to challenges in accurately determining bearing capacity (Nguyen et al. 2024). The pile load test, which measures full-scale pile settlement under static load (Nguyen et al. 2023), is considered to offer highly accurate results (Shooshpasha et al. 2013). The reason for the accuracy is due to the method's similarity to the actual installation of driven piles. Despite its precision, the method's expense and time intensity make it less suited for small-scale projects, relegating its application primarily to larger ventures (Hoang et al. 2022). The measurement of soil properties using in-situ test methods has advanced; along with this progress, there has been a growing trend of using this data to predict the bearing capacity of piles (Nguyen et al. 2023). Out of in-situ tests, the Standard Penetration Test (SPT) is not only the most used test for determining the bearing capacity (Heidarie Golafzani et al. 2020), but also is a feasible alternative to pile load testing (Kozłowski and Niemczynski 2016). A number of past studies (Bazaraa and Kurkur 1986; Biarez and Foray 1977; Shioi and Fukui 2021) have used SPT results and proposed several empirical formulas to determine bearing capacity in sandy soil. Also, some studies have proposed several formulas to determine the bearing capacity for various types of soils including sand and clay. However, previous predictive models relied on basic regression techniques, resulting in the unreliability of predictions. Given advancements in data science and artificial intelligence, there is a pressing requirement to construct predictive models for pile bearing capacity utilizing machine learning algorithms.

Machine learning, a subset of artificial intelligence, draws insights from historical data by adaptively learning patterns, much like human brain functions (Mirrashid and Naderpour 2021; ur Rehman et al. 2024; Rehman et al. 2022). Recent research suggests that machine learning could serve as an alternative to traditional empirical methods and field tests for estimating the maximum capacity of structures (Alwanas et al. 2019; Mangalathu et al. 2022; Mohammed et al. 2020; Sarothi et al. 2022; Todorov and Billah 2022). Artificial neural networks (ANN) have been employed to estimate the friction capacity of driven piles in clay, supported by literature data for training the model (Nguyen et al. 2020). Armaghani et al., (Armaghani et al. 2020) formulated a hybrid intelligence model, combining ANN and particle swarm optimization (PSO), to predict pile settlement. Using data from various piles embedded in rock mass, the model was designed. Sensitivity analyses were then conducted to pinpoint key PSO parameters influencing prediction accuracy. Ghorbani et al. (2018) combined ANN and fuzzy inference system to predict the ultimate bearing capacity of axially loaded piles, using 108 load test data from the literature, including the Cone Penetration Test (CPT) measurements (Ghorbani et al. 2018). Momeni et al. (2015) introduced an ANN method to predict the axial bearing capacity of concrete piles. This model was trained using data from 36 field tests conducted with a pile driving analyzer, considering pile geometry and soil investigation results (Momeni et al. 2015). There have been few studies on utilizing pile load test data i.e., pile diameter, pile length, and along with SPT test results for establishing quick predictive models. Some studies have used single machine learning algorithms like Random Forest (RF), ANN and RF, Support Vector Regression (SVR), XGBoost, AdaBoost, and Decision Tree (DT) and compared their predicting performances. The others have focused on hybridized algorithms for example, the utilization of Genetic Algorithms (GA) to tune Deep Learning Neural Networks (Pham et al. 2020), the hybridization of Random Forest through Particle Swarm Optimization (Pham and Tran 2022), enhancement of Least Squares Support Vector Regression through ordinary differential flower pollination (ODFP) metaheuristic (Hoang et al. 2022), and the exploration of XGBoost parameter optimization through the Whale Optimization Algorithm, These studies not only laid the foundation for using pile load test data for bearing capacity determination but also demonstrate the effectiveness of hybridization and fine-tuning with algorithms like GA, PSO, and Whale Optimization Algorithms (WOA). These adaptations have been shown to significantly surpass the performance of standard models. However, relying solely on individual machine learning tools or single metaheuristic optimizers, such as GA, PSO, or WOA, risks missing the mark for global optimization. This risk may stem from falling into local minima traps or from the fact that these optimizers themselves require hyperparameter tuning, sometimes complicating simpler tasks. Moreover, it is not guaranteed that these models perform well in all scenarios; they might exhibit promising accuracy in one setting but falter in another, particularly as different terrains present unique challenges. Despite academic research limitations, the implementation of these models in the field remains unexplored, thus reducing the urgency for quick methods. Considering this discussion, there is a need for continued exploration beyond a single optimization technique. Proposing optimized algorithms that can be applied to predict the load-bearing capacity of driven piles is essential. Based on the findings of studies (Nguyen et al. 2023; Ge et al. 2023; Li and Li 2024; Yu 2024; Chala and Ray 2023), RF, SVR, and XGBoost are selected for the current study due to their proven ability to address complex and nonlinear problems, as well as their successful application in similar pile foundation tasks. These algorithms are particularly effective for high-dimensional and noisy datasets commonly encountered in pile load-bearing capacity prediction. RF and XGBoost, as tree-based ensemble methods, model relationships by iteratively partitioning the input space through decision trees, while SVR approximates the input–output relationship using a kernel-based optimization framework. However, these algorithms require further optimization to ensure reliable model performance, minimize bias in training on noisy data, and improve their generalizability and applicability. However, to the authors’ best knowledge, no previous study has applied Random Search (RS) and Grid Search (GS) optimization techniques to enhance the performance of these widely adopted algorithms for enhanced modeling of pile bearing capacity for quick and reliable determination without conducting extensive pile-load tests. This study aims to fill this gap by employing these advanced machine learning algorithms to model the pile bearing capacity and fine tuning these algorithms using RS and GS techniques, incorporating cross-validation (CV) to enhance prediction accuracy. Validation of these models is rigorously conducted through learning curves, cross-validation scores, and tests on independent validation data. To facilitate the preliminary evaluation of pile bearing capacity before conducting actual pile load tests, or for small projects where pile load test may be cost-prohibitive, a web application can serve as a practical and efficient tool. This approach refines the accuracy of the models and ensures their practical reliability, underscoring a commitment to clarity and reproducibility.

Methodology

Modeling data

Data description

The schematic illustration of the methodology for the current study is shown in Fig. 1. The dataset employed for this research was sourced from the work of Pham and Tran (Pham et al. 2020). This dataset comprised the results of field tests conducted on 472 pre-cast reinforced concrete piles in Ha Nam Province, Vietnam. These piles, characterized by their square cross-sections and closed tips, were installed using a hydraulic jack-in apparatus operating at a uniform velocity. The structure of the pile along with its geometric variables and the layers of soil surrounding it are shown in Fig. 2. It is noteworthy that the piles underwent testing no sooner than 7 days after installation. The testing procedure entailed progressively increasing vertical loads to 100%, 150%, and 200% of the design load, spanning periods of approximately 6 h, 12 h, and 24 h, respectively.

The pile bearing capacity is determined based on one or both of the following criteria: (1) The pile's settlement at the current load matches or exceeds five times the settlement observed at the previous load level, or (2) The load-settlement curve is nearly linear. The pile bearing capacity corresponds to the load causing a settlement exceeding 10% of the pile's diameter. The pile bearing capacity corresponds to the load causing a settlement exceeding 10% of the pile's diameter. There are 10 input parameters i.e., pile diameter (D), depth of first soil layer (Z1), depth of second soil layer (Z2), depth of third soil layer (Z3), elevation of pile top (Zp), elevation of ground level (Zg), elevation of pile extra segment of pile top (Zt), elevation of pile tip (Zm), average SPT count along pile shaft (Nsh), average SPT count at pile tip (Nt) and one output parameter the bearing capacity (Pu). Meanwhile, the average SPT value (Nt) is calculated according to Pham et al. (2020): considering values from 8 times the pile diameter (8D) above and 3 times the pile diameter (3D) below the pile tip, where D represents the pile's diameter. Additionally, Nsh is calculated by measuring the SPT blow count (N) at intervals of 300 mm (about 11.81 inches) along the embedded length of the pile. The histograms of inputs and outputs are shown in Fig. 3, and Table S1 provides insights into the data.

Input analysis

The specific selection of this data is based on its parameters, which directly influence pile bearing capacity. These parameters encompass pile geometry, thickness of soil layers, and soil characteristics obtained through SPT results. Furthermore, SPT blow counts are measured along the pile length to assess soil compressibility, a crucial factor in our analysis. This dataset is meticulously structured to encompass critical aspects of both pile and soil characteristics, aligning perfectly with the core objectives of our research and ensuring the accuracy of bearing capacity predictions.

The dataset comprises approximately 11 parameters, each with its statistical significance as depicted in Table 1. For parameters D and Zg, low variability and a narrow range indicate that these features may not offer significant unique information for a model unless the target variable is closely associated with subtle changes in these features. Parameters Z1, Zp, Zt, and Nt exhibit moderate variability, and their range suggests that these features may provide useful information to a model, particularly if they are correlated with the target variable. Similarly, parameters Z2, Zm, and Nsh display high variability, and a wide range suggests that these features may serve as important predictors, provided that their variability represents meaningful information rather than mere noise. Parameter Z3 contains a large number of zeros because it indicates the depth of the third layer of soil.

In this study, feature selection was a crucial preliminary step before data analysis, recognizing that irrelevant or redundant features can adversely impact machine learning models. Such factors may lead to enhanced computational demands and reduced predictive accuracy. Therefore, for feature selection, recommendations from the study by Minh-Tu et al. (Cao et al. 2022) were taken into account. Irrelevant features, that do not contribute to understanding pile bearing capacity, exhibit statistical insignificance, or demonstrate dependent relationships, were eliminated. From the adopted dataset, features such as the elevation of the Zt and Zg were removed due to their weak correlation, low to moderate variability, and lack of domain relevance. Subsequently, the feature representing the elevation of the pile's lower head (Zm) was eliminated despite its strong correlation and high variability because of its dependent nature and redundancy, which could adversely affect the model. Specifically, Zm can be derived by summing the length of the pile embedded in each soil stratum (Z1, Z2, Z3) with the Zp. Additionally, further correlation coefficient analysis was conducted to reinforce the feature selection process, as illustrated in Fig. 4 and 7 input features were selected for modeling.

Preprocessing methods

Real-world data contains lots of noise, inconsistencies, and redundant information. The current dataset used in this study is extensive but exhibited significant skewness and outliers that could potentially bias the model. To address this, a detailed preprocessing procedure, including transformations and outlier handling, was implemented. These steps improved the symmetry of the data and reduced the presence of outliers, making the dataset more suitable for training machine learning models.

For the present data, a statistical approach named Gaussian approximation was implemented through a Python library Feature-engine (Galli 2021). It finds data points that lie further than 3 times standard deviations from the mean as outliers and removes outliers on both ends (tails) of observations.

Moreover, initially, the raw dataset underwent a preprocessing phase, where it is normalized using the MinMaxScaler function from scikit-learn. This preprocessing function re-scales each feature in the dataset to the range of 0 to 1, thereby ensuring that the features have the same scale. This is a crucial step, as it mitigates the potential for feature dominance and facilitates a more balanced learning process for the algorithms used.

Modeling and evaluation approaches

Three distinct machine learning models were used in this study i.e., RF, SVR, and Boost. RF aggregates the decision trees and forms a forest of them (Xue et al. 2021). Random forests generally outperform decision trees in terms of speed, accuracy, and computational cost (Gong et al. 2018). In this study, Python’s scikit-learn library is used for RF implementation, with hyperparameters tuned for optimal performance, as detailed in Table 2 (Zhu et al. 2022). Meanwhile, SVR, an extension of the Support Vector Machine for regression, is effective for handling nonlinear relationships and ensuring high prediction accuracy (Thomas et al. 2017). SVR finds an optimal hyperplane to bound predictions within a margin and is tuned using parameters like C, ε, and gamma, as shown in Table 3 (Astudillo, et al. 2020; Faris et al. 2018). XGBoost, a gradient-boosting algorithm, excels in predictive accuracy and efficiency, using regression trees to minimize prediction errors (Alibrahim and Ludwig 2021). It handles large datasets and complex relationships between input variables and the target variable (Marinov and Karapetyan 2019). Key hyperparameters such as "max_depth", "learning_rate", and "gamma" influence the model performance; their selected values for the current study are presented in Table 4 (Rehman et al. 2025; Prayogo et al. 2020; Nguyen et al. 2021).

Moreover, K-fold cross validation (CV) is adopted in this study to develop models and avoid training bias caused by relying on a single data subset. In addition, hyperparameter tuning, which adjusts settings before training, is employed to enhance the modeling process (Probst et al. 2019; Sipper 2022). This involves comparing performance against previous configurations to identify the optimal setup (Elgeldawi et al. 2021). Two search-based algorithms are employed in this study to optimize machine learning models. The Random Search (RS) approach involves randomly selecting hyperparameter combinations from a search space to efficiently explore and identify optimal settings for a model (Ren, et al. 2022). RandomizedSearchCV (RSCV), the Python implementation of RS, samples fixed combinations of hyperparameters using the "param_distributions" and "n_iter" arguments and incorporates cross-validation for robust performance estimation. It stores results in "cv_results" and identifies the best-performing model through "best_estimator" and "best_params" (Sarajcev and Meglic 2022), as shown in Fig. 5. In contrast, Grid Search (GS) systematically explores all possible hyperparameter combinations using the "param_grid" argument in GridSearchCV (GSCV), with a focus on model performance and generalization (Alibrahim and Ludwig 2021; Marinov and Karapetyan 2019). GSCV begins with a wide search space and narrows it down based on literature insights to ensure optimal tuning and generalization (Belete and Huchaiah 2022), as illustrated in Fig. 6.

Meanwhile, this study utilized various model metrics to evaluate the performance of ML regression models by assessing their prediction accuracy on training and test datasets. For this purpose, the coefficient of determination (R2), Root Mean Squared Error (RMSE), Mean Absolute Error (MAE) and Mean Squared Error (MSE) are considered to gauge the statistical performance of the models as follows.

where \({E}_{i}\) is predicted value, \({M}_{i}\) is real value, \({\overline{E} }_{i}\) is the mean of predicted values and \(n\) is total number of data points. In addition, learning curves are also used which assist in understanding how a model's performance changes with the size of the training data. They play a crucial role in various machine learning scenarios, helping with data acquisition, determining when to stop model training, and selecting the appropriate model (Mohr and Rijn 2201). Models are also evaluated using cross-validation scores for both training and testing, as well as assessing standard training and testing scores.

Results and discussion

Preprocessed data

The database after removing outliers consists of 447 data points and 8 parameters. The statistical summary of the cleaned data points is presented in Table 5. For this data, the D ranges from 300 to 400 mm, with a mean of 361.74 mm and a standard deviation of 48.65 mm. The Z1, Z2, and Z3 exhibit mean values of 3.801 m, 6.815 m, and 0.346 m, respectively, with varying standard deviations, highlighting the variability in layer thicknesses. The parameter Zp has a mean of 2.815 m, ranging from 1.95 to 3.4 m. The Nsh and the Nt show consistent averages of 10.965 and 7.177, respectively. The Pu varies widely, with values ranging from 407.2 to 1551, and a mean of 1005.519, reflecting significant variability across the dataset. Generally, outlier removal has led to slight changes in the mean, while the median and mode have remained mostly consistent. This is because outliers have a greater impact on the mean but less on the median and mode, which are more robust measures of central tendency.

For Z1, the mean has decreased from 3.8238 to 3.8018, skewness has decreased from 0.5477 to 0.5034, resulting in a change in range of –0.97. For Z2, the mean has increased from 6.579 to 6.8158, skewness has increased from −1.0327 to −0.3564, signifying a shift towards symmetry, with a change in range of −3.56. For Z3, the mean has slightly increased from 0.3315 to 0.3462, skewness has reduced from 0.8428 to 0.7459, indicating a move towards a more symmetric distribution, with a change in the range of −0.47. For Zp, the mean has slightly changed from 2.8038 to 2.8153, skewness has also slightly moved from −0.3423 to −0.3125, implying a small move towards symmetry, with a change in range of −0.73. For Nsh, the mean has increased from 10.7426 to 10.9660, skewness has moved from −0.2149 to −0.0408, indicating a more symmetric distribution post-outlier removal, with a change in range of −4.73. For Nt, the mean has increased from 7.0557 to 7.1776, and skewness has substantially changed from −1.9806 to almost 0.0071, implying a significant move towards symmetry, with a change in range of −2.33. Finally, for Pu, the mean has increased from 984.2014 to 1005.5187, skewness has moved from −0.0910 to −0.1725, indicating a slight move towards negative skewness, with no change in range.

In summary, the range has consistently decreased, indicating the successful removal of extreme values and resulting in a more compact dataset. The mode remains consistent across all inputs. The increase in the mean and the substantial shift in skewness from a negative value to one closer to zero suggest that the outliers were predominantly on the lower side, making the original distribution left-skewed. The slight reduction in both mean and skewness further indicates that the outliers had been slightly pulling the mean upward, making the distribution more positively skewed.

For each input parameter, minimum and maximum values are changed. After data normalization, the summary of rescaled data is shown in Table 6. The range of all input parameters was from 0–1 for normalized data with a standard deviation of 0.352 to 0.486. For the present study, the preprocessed data is divided into training, testing, and validation datasets. Specifically, 90% of data is used during hyperparameter tunning process, where cross-validation divides the data into multiple folds. In each fold, a portion of the data is used for training the model, while the remaining folds are used for testing. This iterative process ensures that this 90% of the data contributes to both training and testing the models. which is further divided through CV. Additionally, the remaining 10% of data is held out throughout the model development process and serves only as the external validation dataset to evaluate final model performance.

Optimized hyperparameters

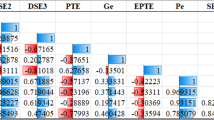

All the models undergo a search process to find the model with the highest mean scoring metric of five folds (i.e., K = 5). The best set of hyperparameters, which are shown in Tables 7, 8, and 9, along with corresponding mean train and test metrics, are presented in Fig. 7. There's a noticeable, though not substantial, difference between the mean training and test scores. This hints at slight overfitting, suggesting that the model might be capturing some noise present in the training data. The Fig. 7b, d, f and h contains the standard deviation between folds. A lower standard deviation, which is basically between model metrics during each fold split, indicates that the model's performance is consistent across different subsets of the dataset. The standard deviations of the metrics across the five folds (Fig. 7b, d, f, g, and h), particularly for the test scores, hint at the model's stability. R2train (Fig. 7a) is higher than R2test (Fig. 7c), which indicates there is some indication of the model capturing noise or exhibiting mild overfitting. As the model undergoes a cross-validation process, a scatter plot during each fold is shown in Fig. 8.

Performance evaluation

Evaluation of tuned models through learning curves

To gain insights into models that are presumably well-optimized for the dataset, learning curves are plotted. The variance (standard deviation) around both the training and validation scores provides an idea of how much variation exists in performance across different cross-validation folds.

For RF, the learning curve (Fig. 9) using RS with R2 (Fig. 9a) for the training score curve, starting from 0.921 and ending around 0.949, indicates the model has a high goodness-of-fit on the training data. The slight dip and subsequent plateau suggest that with more data, the model's performance on the training set stabilizes. Regarding the validation curve, starting from 0.872 and ending around 0.929 shows that as the model is trained with more data, its ability to generalize improves, but there's still a gap between training and validation, suggesting slight overfitting. The same behavior can be observed in the curve with RMSE on the y-axis (Fig. 9b). For RF, learning curves depicting model fit with optimal hyperparameters obtained through GS (Fig. 10) with R2 on the y-axis are displayed in Fig. 10a. These curves exhibit similar behavior to those derived from RS, indicating that RS performed adequately in identifying a set of hyperparameters comparable in performance to those discovered by GS. The slight upward trend observed at the end of the validation curve can be interpreted as a positive sign – suggesting that the model may benefit from additional training data, potentially leading to further improvement in its generalization capability. Employing both R2 and RMSE for learning curves provides a comprehensive understanding of the model's behavior. While R2 offers insights into the model's data-fitting ability, RMSE quantifies the magnitude of the error. The insights gleaned from these learning curves are valuable for comprehending the model's behavior and identifying potential areas for enhancement. The learning curves for SVR (Fig. 11) with optimal hyperparameters obtained from both RS (Fig. 11a and b) and GS (Fig. 11b and d) exhibit concurrent dips in the training and validation curves around 192 samples, suggesting that the model encountered challenges with that specific subset of the training data. This occurrence could be attributed to particular characteristics or noise within those samples. Furthermore, the downward trend observed in the validation curve towards the end suggests a potential slight overfitting of the model, particularly evident as the gap between the training and validation scores persists.

For XGBoost, the learning curve with optimal hyperparameter of RS (Fig. 12a and b), the training curve starts from 0.925 and steadily increases to stabilize at 0.954 and the validation curve starts from 0.876 and steadily increases to stabilize at 0.93. The consistent and small gap between the training and validation curves throughout suggests that the model is neither overfitting nor underfitting significantly. This indicates that the model generalizes well to unseen data. For the learning curve with optimal hyperparameters obtained from GS, as shown in Fig. 13a and b, the training curve initiates from a high point of 0.925, experiences a slight dip around 100 samples, though not significantly, and eventually stabilizes around 0.970. In contrast, the validation curve begins at 0.825, undergoes a minor decline around 225 samples, but ultimately stabilizes around 0.93. Although the gap between the training and validation curves diminishes over time, it persists around 0.04 by the end. While this difference is small, it is noticeable, indicating a mild overfitting as the model demonstrates a slightly superior fit on the training data compared to the validation data.

The model trained with RS (Fig. 12a and b) hyperparameters exhibits a narrower gap between the training and validation sets, suggesting it is slightly more robust than the one trained with GS (Fig. 13a and b) hyperparameters. Despite achieving a higher R2 on the training set, the GS model displays a more prominent gap, indicating mild overfitting. The slight declines observed in the curves (at 100 samples for the training curve and 224 samples for the validation curve) in the GS (Fig. 13a and b) model might be attributed to specific data subsets, noise, or transient overfitting during training. Nevertheless, the R2 scores are relatively high for both models, signifying good predictive capabilities.

Evaluation of tuned models on validation data

To evaluate and compare the performance of the tuned models, all models were validated on 10% of the data, which was set aside at the beginning of the process and not used in in model development. The results were then compared with those of untuned or base models (Fig. 14). Initially, XGBoost outperformed the other models with an R2validation of 0.923 (Fig. 14a) and relatively lower error metrics (MAEvalidation = 69.056, RMSEvalidation = 98.091, MSEvalidation=9621.96), showcasing its superior ability to capture the complexities in the data. RF and SVR also performed reasonably well but had higher errors, indicating room for improvement (Fig. 14). Hyperparameter tunned models significantly performed better than base models. For RS-RF, it led to substantial improvements, with GS-RF (Fig. 14). This highlighted the value of an exhaustive search in fine-tuning model parameters, particularly for complex tasks like predicting load-bearing capacity. Similarly, RS-SVR and GS-SVR showed notable gains from tuning, though it still lagged tunned RF and XGBoost, even after optimization.

XGBoost, however, benefited the most from tuning. The GSCV-tuned XGBoost model (GS-XGBoost) achieved the highest accuracy across all models, with an R2 of 0.952 (Fig. 14a) and further reduced error metrics (MAEvalidation = 60.88, RMSEvalidation = 76.45, MSEvalidation = 5844.96). This demonstrates that XGBoost, when fine-tuned, offers exceptional predictive power and reliability, making it the most robust model for this application. Overall, while all models improved with tuning, XGBoost stood out as the most effective tool, particularly when paired with GSCV for hyperparameter optimization. However, the differences in performance between the hyperparameter tuning methods are relatively small due to the higher number of iterations during RS. Overall, the results show the GS technique for hyperparameter tuning tends to yield slightly better results compared to RS, as evidenced by the higher R2 and lower error metrics (i.e., MAE, RMSE, and MSE). Overall, this analysis can guide the selection of models and hyperparameter tuning methods for similar tasks, emphasizing the importance of model selection and hyperparameter optimization in achieving optimal performance in machine learning tasks. The graphs in Fig. 15 show the predicted values versus the actual values. Each algorithm, when tuned using RSCV, was compared against its counterpart tuned with GSCV.

For the simultaneous visualization of multiple statistical measures, the predictions of each tuned model are evaluated on a Taylor diagram (Fig. 16). This analysis reveals that models tuned using the Grid Search technique, particularly the XGBoost and RF algorithms, demonstrate superior performance. They exhibit R2 of 0.977 and 0.9769, and standard deviations of 338.552 and 336.988, respectively. This superiority is also evident in their lower RMSE values, indicating a strong linear relationship with actual values and minimal prediction errors. Additionally, the XGBoost model tuned through a RS technique shows promising results. The Grid Search—XGBoost (GS-XGBoost) model, in particular, stands out as the top performer, exhibiting the highest correlation and the lowest RMSE among all models evaluated. This suggests the remarkable capability of this model in capturing the underlying relationships in the data, making it a reliable choice for similar datasets or prediction tasks.

The predictions by turned models on 10% validation data are presented in Table S2. All these tuned models are also evaluated by finding the distribution of errors, which is shown in Fig. 17 by revealing their performance through the spread of their error distributions. The overall graphs (Fig. 17) suggest that the models have their errors symmetrically distributed around zero, which indicates no significant bias in overestimating or underestimating. The tuned RF (Fig. 17a) and XGBoost (Fig. 17c) models show a tight clustering of errors when refined through GS, implying a potential refinement in prediction accuracy. The tuned SVR models (Fig. 17c) exhibit a broader spread in errors, indicating a range of predicted deviations. The visual overlap in error ranges between Random and Grid Search suggests a nuanced difference in their tuning effectiveness.

Comparison with existing models

The prediction performance of the proposed tuned ML model, GS-XGBoost, was compared against those of other proposed models in the literature (Table 10). The performance indices of existing models were obtained based on data points used in the current study for model development ensuring comparability. It can be observed that the GA-tuned models significantly decreased the error of DLNN and RF. Also, metaheuristics approaches like PSO, and WAO also showed promising performance. Meanwhile, GS-XGBoost showed superior performance amongst all previously proposed models, with R2 value of 0.952 outperforming alternatives such as GA-DLNN (R2 = 0.923) and RF-PSO (R2 = 0.924). The superior performance of GS-XGBoost can be explained based on different factors; firstly, the grid search optimization systematically explored the hyperparameter space, ensuring the best configuration for capturing the complex, nonlinear relationships inherent in the current dataset. Secondly, the XGBoost algorithm's inherent strengths, including gradient boosting with regularization, made it more effective at handling the high-dimensional, noisy, and variable geotechnical data compared to models like DLNN, which are more prone to overfitting, and RF, which may struggle with bias due to simpler ensembling (Belete and Huchaiah 2022). Additionally, GS offers a balanced exploration of the hyperparameter space, unlike stochastic methods such as PSO and WAO, which, while promising, may require larger computational budgets and may not always converge to the global optimum. This systematic approach, coupled with XGBoost's robustness and computational efficiency, allowed GS-XGBoost to achieve superior performance in both accuracy and generalization. Although differences in computational budgets and optimization strategies exist among these methods, the results highlight the effectiveness of the GS-XGBoost model proposed in this study, which leverages the combination of systematic tuning and the algorithmic strengths of XGBoost to deliver outstanding performance in static pile load test predictions.

Field Implications

Determining the pile loading bearing capacity is a significant geotechnical challenge as it directly impacts the stability and safety of constructions. Although pile loading tests are accurate and reliable across various design stages, their high costs and time requirements often deter designers from conducting field tests. Despite advancements in testing methodologies and analytical tools, this process remains challenging and often involves considerable uncertainty. The current study proposed an enhanced machine learning-based model for predicting pile bearing capacity by comprehensively applying optimization techniques and rigorously evaluating the models. To extend the practical implications of this research, the author developed a web-based application using the proposed model to assist engineers and designers in leveraging the best-performing model in real-world scenarios. The application accepts input parameters such as soil layer thickness, pile diameter, and SPT values along the shaft and tip, and computes the pile bearing capacity. This pile bearing capacity corresponds to a settlement of 10% of the pile diameter. The web application is accessible at: https://pilebearingcapacity.streamlit.app/. By using this tool, users can make informed decisions during the preliminary evaluation of piles based on readily known parameters from the field. Additionally, the application allows users to adjust various parameters and analyze the desirable soil properties for achieving specific pile bearing capacity thresholds. This enables engineers to explore different design scenarios, aiding in effective decision-making during the early stages of earthwork projects involving pile construction.

Moreover, it is important to note that the current model is trained on a specific range of data, as described earlier. Therefore, its application is limited to these ranges which are also indicated in the web-based application. Additionally, such models are suitable for the preliminary evaluation (Rehman et al. 2025; Prayogo et al. 2020); therefore, for detailed assessments, pile bearing capacity tests are recommended considering the nature of the project. To further enhance the applicability of the proposed models, future studies could expand the dataset used in this research. Although an extensive dataset has already been utilized, future enhancements could focus on broadening the input parameter ranges, incorporating additional parameters or material property information, and including more tests and bearing capacity data.

Conclusion

This study delved into the application of RS and GS optimized machine learning techniques for predictive modeling of pile bearing capacity. Leveraging an extensive dataset curated from literature, this study explored the efficacy of various machine learning algorithms including RF, SVM, and XGBoost. Through systematic experimentation, multiple models were generated and the performance of machine learning algorithms was refined by integrating RSCV and GSCV techniques. The best models for predicting pile-bearing capacity are identified using comprehensive evaluation criteria. The following are the main findings of this study.

-

Both RS and GS-tuned machine learning models exhibit remarkable accuracy, boasting R2 values exceeding 0.9 and RMSE values less than 100 kN across both testing and training datasets. Notably, GS demonstrates a marginally superior statistical performance compared to RS. Furthermore, our tuned models with RS and GS showcase commendable performance on the validation dataset, with GS consistently outperforming RS.

-

In terms of machine learning model performance, XGBoost emerges as the top performer across training, testing, and validation datasets, followed closely by Random Forest and Support Vector Machine. This underscores the effectiveness of tree-based algorithms in effectively capturing the intricate geotechnical variability inherent within pile bearing data.

-

The models proposed in this study serve as invaluable tools for predicting the preliminary evaluation of pile bearing capacity, enabling swift and cost-effective geotechnical characterization within an acceptable error margin. This research contributes to the advancement of predictive modeling in geotechnical engineering and underscores the significance of leveraging machine learning methodologies for informed decision-making in civil engineering applications.

This study also presents a web-based application for evaluating pile bearing capacity using the best-performing model. However, this model is applicable to specific input ranges and is recommended for preliminary evaluations. For detailed design, pile bearing capacity tests are advised. Future studies could focus on expanding the applicability of the proposed model by improving the dataset and utilizing advanced optimization techniques and error analysis across the subset for model training and testing.

Data availability

Data will be made available on request.

References

Alibrahim H, Ludwig SA (2021) Hyperparameter optimization: comparing genetic algorithm against grid search and bayesian optimization. In: 2021 IEEE congress on evolutionary computation pp 1551–1559

Alwanas AAH et al (2019) Load-carrying capacity and mode failure simulation of beam-column joint connection: Application of self-tuning machine learning model. Eng Struct 194:220–229

Armaghani DJ et al (2020) On the use of neuro-swarm system to forecast the pile settlement. Appl Sci 10(6):1904

Astudillo G et al (2020) Copper price prediction using support vector regression technique. Appl Sci 10(19):6648

Bazaraa A, Kurkur M (1986) N-values used to predict settlements of piles in Egypt. In: Use of in situ tests in geotechnical engineering, ASCE

Belete DM, Huchaiah MD (2022) Grid search in hyperparameter optimization of machine learning models for prediction of HIV/AIDS test results. Int J Comput Appl 44(9):875–886

Biarez J, Foray P (1977) Bearing capacity and settlement of pile foundations. J Geotech Geoenviron Eng 103:348–350

Cao M-T, Nguyen N-M, Wang W-C (2022) Using an evolutionary heterogeneous ensemble of artificial neural network and multivariate adaptive regression splines to predict bearing capacity in axial piles. Eng Struct 268:114769

Chala AT, Ray R (2023) Assessing the performance of machine learning algorithms for soil classification using cone penetration test data. Appl Sci 13(9):5758

Drusa M, Gago F, Vlček J (2016) Contribution to estimating bearing capacity of pile in clayey soils. Civil Environ Eng 12(2):128–136

Elgeldawi E et al (2021) Hyperparameter tuning for machine learning algorithms used for arabic sentiment analysis. Informatics 8(4):79

Faris H et al (2018) A multi-verse optimizer approach for feature selection and optimizing SVM parameters based on a robust system architecture. Neural Comput Appl 30:2355–2369

Galli S (2021) Feature-engine: A Python package for feature engineering for machine learning. J Open Source Softw 6(65):3642

Ge Q, Li C, Yang F (2023) Support vector machine to predict the pile settlement using novel optimization algorithm. Geotech Geol Eng 41(7):3861–3875

Ghorbani B et al (2018) Numerical ANFIS-Based Formulation for Prediction of the Ultimate Axial Load Bearing Capacity of Piles Through CPT Data. Geotech Geol Eng 36(4):2057–2076

Gong H et al (2018) Use of random forests regression for predicting IRI of asphalt pavements. Constr Build Mater 189:890–897

HeidarieGolafzani S, JamshidiChenari R, Eslami A (2020) Reliability based assessment of axial pile bearing capacity: static analysis, SPT and CPT-based methods. Georisk: Assess Manag Risk Eng Syst Geohazards 14(3):216–230

Hoang N-D, Tran X-L, Huynh T-C (2022) Prediction of pile bearing capacity using opposition-based differential flower pollination-optimized least squares support vector regression (ODFP-LSSVR). Adv Civil Eng 2022:7183700

Kozłowski W, Niemczynski D (2016) Methods for estimating the load bearing capacity of pile foundation using the results of penetration tests-case study of road viaduct foundation. Procedia Eng 161:1001–1006

Li Y, Li T (2024) Prediction of pile settlement using hybrid support vector regressor. Multiscale Multidiscip Mod Exp Des 7(3):2103–2120

Liu Q, Cao Y, Wang C (2019) Prediction of ultimate axial load-carrying capacity for driven piles using machine learning methods. In: 2019 IEEE 3rd information technology, networking, electronic and automation control conference (ITNEC). IEEE, pp 334–340

Mangalathu S et al (2022) Machine-learning interpretability techniques for seismic performance assessment of infrastructure systems. Eng Struct 250:112883

Marinov D, Karapetyan D (2019) Hyperparameter optimisation with early termination of poor performers. In: 2019 11th computer science and electronic engineering (CEEC). IEEE, pp 160–163

Mirrashid M, Naderpour H (2021) Recent trends in prediction of concrete elements behavior using soft computing (2010–2020). Arch Comput Methods Eng 28:3307–3327

Mohammed M et al (2020) Shallow foundation settlement quantification: application of hybridized adaptive neuro-fuzzy inference system model. Adv Civil Eng 2020:1–14

Mohr F, van Rijn JN (2022) Learning Curves for Decision Making in Supervised Machine Learning--A Survey. arXiv preprint arXiv:2201.12150

Momeni E et al (2015) Application of artificial neural network for predicting shaft and tip resistances of concrete piles. Earth Sci Res J 19(1):85–93

Nguyen T-A et al (2020) Estimation offriction capacity of driven piles in clay using. Vietnam J Earth Sci 42(2):265–275

Nguyen H et al (2021) Efficient machine learning models for prediction of concrete strengths. Constr Build Mater 266:120950

Nguyen H et al (2023) A novel whale optimization algorithm optimized XGBoost regression for estimating bearing capacity of concrete piles. Neural Comput Appl 35(5):3825–3852

Nguyen T-H et al (2024) Efficient hybrid machine learning model for calculating load-bearing capacity of driven piles. Asian J Civil Eng 25(1):883–893

Pham TA, Tran VQ (2022) Developing random forest hybridization models for estimating the axial bearing capacity of pile. PLoS ONE 17(3):e0265747

Pham TA et al (2020) Design deep neural network architecture using a genetic algorithm for estimation of pile bearing capacity. PLoS ONE 15(12):e0243030

Prayogo D et al (2020) Combining machine learning models via adaptive ensemble weighting for prediction of shear capacity of reinforced-concrete deep beams. Eng Comput 36:1135–1153

Probst P, Boulesteix A-L, Bischl B (2019) Tunability: Importance of hyperparameters of machine learning algorithms. J Mach Learn Res 20(1):1934–1965

Rehman Z, Khalid U, Ijaz N, Mujtaba H, Haider A, Farooq K, Ijaz Z (2022) Machine learning-based intelligent modeling of hydraulic conductivity of sandy soils considering a wide range of grain sizes. Eng Geol 311:106899

Rehman Z, Khalid U, Ijaz N, Ijaz Z (2025) Big data-driven global modeling of cohesive soil compaction across conceptual and arbitrary energies through machine learning. Transp Geotech 50:101470

Ren YM et al (2022) A tutorial review of neural network modeling approaches for model predictive control. Comput Chem Eng 165:107956

Sarajcev P, Meglic A (2022) Error analysis of multi-step day-ahead PV production forecasting with chained regressors. J Phys Conf Ser 2369:012051

Sarothi SZ et al (2022) Predicting bearing capacity of double shear bolted connections using machine learning. Eng Struct 251:113497

Shioi Y, Fukui J (2021) Application of N-value to design of foundations in Japan. Penetration Testing, vol 1. Routledge, pp 159–164

Shooshpasha I, Hasanzadeh A, Taghavi A (2013) Prediction of the axial bearing capacity of piles by SPT-based and numerical design methods. Geomate J 4(8):560–564

Sipper M (2022) High per parameter: A large-scale study of hyperparameter tuning for machine learning Algorithms. Algorithms 15(9):315

Thomas S, Pillai GN, Pal K (2017) Prediction of peak ground acceleration using ϵ-SVR, ν-SVR and Ls-SVR algorithm. Geomat Nat Haz Risk 8(2):177–193

Todorov B, Billah AM (2022) Machine learning driven seismic performance limit state identification for performance-based seismic design of bridge piers. Eng Struct 255:113919

ur Rehman Z, Aziz Z, Khalid U, Ijaz N, ur Rehman S, Ijaz Z (2024) Artificial intelligence-driven enhanced CBR modeling of sandy soils considering broad grain size variability. J Rock Mech Geotech Eng In press, https://doi.org/10.1016/j.jrmge.2024.05.048.

Xue L et al (2021) A data-driven shale gas production forecasting method based on the multi-objective random forest regression. J Petrol Sci Eng 196:107801

Yu S (2024) Prediction of pile settlement by using hybrid random forest models. Multiscale Multidiscip Model Exp Des 7(3):2087–2101

Zhu N et al (2022) Optimization of the Random Forest Hyperparameters for Power Industrial Control Systems Intrusion Detection Using an Improved Grid Search Algorithm. Appl Sci 12(20):10456

Acknowledgements

NUST Islamabad is acknowledged for technical support.

Funding

Not applicable.

Author information

Authors and Affiliations

Contributions

S.J. Arabi: Methodology, Data Curation, Investigation, Data curation, Formal analysis, Software, Visualization, Writing original draft; Z. Rehman: Conceptualization, Methodology, Resources, Supervision, Validation, Writing-review and editing; W. Hassan: Data Curation, Validation, Methodology, Visualization, Writing-review and editing; U. Khalid: Validation, Methodology, Visualization, Writing-review and editing; N. Ijaz: Validation, Methodology, Visualization, Writing-review and editing; Z. Maqsood: Validation, Visualization, Writing-review and editing; A. Haider: Validation, Methodology, Writing-review and editing.

Corresponding author

Ethics declarations

Ethical approval

Not applicable.

Consent to participate

Not applicable.

Consent for publication

Not applicable.

Competing interests

The authors declare no competing interests.

Additional information

Communicated by: Hassan Babaie

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Arbi, S.J., Rehman, Z.u., Hassan, W. et al. Optimized machine learning-based enhanced modeling of pile bearing capacity in layered soils using random and grid search techniques. Earth Sci Inform 18, 332 (2025). https://doi.org/10.1007/s12145-025-01784-2

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s12145-025-01784-2