Abstract

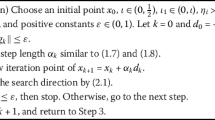

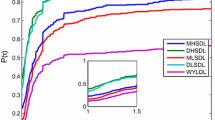

In this paper, we introduce some modifications of the classic conjugate gradient method Dai–Yuan, to solve large-scale unconstrained optimization problems. Our modifications are based on four improved secant conditions. We indicate that the presented methods inherit the appropriate global convergence property of the Dai–Yuan method. Furthermore, we illustrate the amazing numerical behavior of these modifications in two sets of numerical experiments.

Similar content being viewed by others

References

Hestenes, M.R., Stiefel, E.: Methods of conjugate gradients for solving linear systems. J. Res. Nat. Bur. Stand. 49(6), 409–435 (1952). https://doi.org/10.6028/JRES.049.044

Fletcher, R., Reeves, C.: Function minimization by conjugate gradients. Comput. J. 7(2), 149–154 (1962). https://doi.org/10.1093/comjnl/7.2.149

Polak, E., Ribiére, G.: Note sur la convergence de méthodes de directions conjuguées. Francaise Informat. Recherche Opertionelle, 3e année 3(R1), 35–43 (1969)

Polyak, B.T.: The conjugate gradient method in extreme problems. USSR Comput. Math. Math. Phys. 9(4), 94–112 (1969). https://doi.org/10.1016/0041-5553(69)90035-4

Dai, Y.H., Yuan, Y.: A nonlinear conjugate gradient method with a strong global convergence property. SIAM J. Optim. 10(1), 177–182 (1999). https://doi.org/10.1137/S1052623497318992

Li, M.: A family of three-term nonlinear conjugate gradient methods close to the memoryless BFGS method. Optim. Lett. 12(8), 1911–1927 (2018). https://doi.org/10.1007/s11590-017-1205-y

Liu, J.K., Feng, Y.M., Zou, L.M.: Some three-term conjugate gradient methods with the inexact line search condition. Calcolo 55(2), 1–16 (2018). https://doi.org/10.1007/s10092-018-0258-3

Livieris, I.E., Pintelas, P.: A limited memory descent Perry conjugate gradient method. Optim. Lett. 10(8), 1725–1742 (2016). https://doi.org/10.1007/s11590-015-0979-z

Wu, Y.: A modified three-term PRP conjugate gradient algorithm for optimization models. J. Inequal. Appl. 97(1), 1–14 (2017). https://doi.org/10.1186/s13660-017-1373-4

Zhang, Y., Dan, B.: An efficient adaptive scaling parameter for the spectral conjugate gradient method. Optim. Lett. 10(1), 119–136 (2016). https://doi.org/10.1007/s11590-015-0865-8

Jiang, X.Z., Jian, J.B.: Improved Fletcher-Reeves and Dai-Yuan conjugate gradient methods with the strong Wolfe line search. J. Comput. Appl. Math. 348(1), 525–534 (2019). https://doi.org/10.1016/j.cam.2018.09.012

Zhu, Z., Zhang, D., Wang, S.: Two modified DY conjugate gradient methods for unconstrained optimization problems. Appl. Math. Comput. 373(1), 125004 (2020). https://doi.org/10.1016/j.amc.2019.125004

Abubakar, A.B., Kumam, P., Malik, M., Chaipunya, P., Ibrahim, A.S.: A hybrid FR–DY conjugate gradient algorithm for unconstrained optimization with application in portfolio selection. AIMS Math. 6(6), 6506–6527 (2021). https://doi.org/10.1016/j.amc.2015.08.014

Deepho, J., Abubakar, A.B., Malik, M., Argyros, I.K.: Solving unconstrained optimization problems via hybrid CD–DY conjugate gradient methods with applications. J. Comput. Appl. Math. 405(1), 113823 (2022). https://doi.org/10.1016/j.cam.2021.113823

Waziri, M.Y., Ahmed, K.: Two descent Dai–Yuan conjugate gradient methods for systems of monotone nonlinear equations. J. Sci. Comput. 90(1), 1–53 (2022). https://doi.org/10.1007/s10915-021-01713-7

Andrei, N.: A Dai–Yuan conjugate gradient algorithm with sufficient descent and conjugacy conditions for unconstrained optimization. Appl. Math. Lett. 21(2), 165–171 (2008). https://doi.org/10.1016/j.aml.2007.05.002

Zhu, H., Chen, X.: Global convergence of a special case of the Dai–Yuan family without line search. Asia-Pac. J. Oper. Res. 25(3), 411–420 (2008). https://doi.org/10.1142/S0217595908001663

Zhang, L.: Two modified Dai–Yuan nonlinear conjugate gradient methods. Numer. Algorithms 50(1), 1–16 (2009). https://doi.org/10.1007/s11075-008-9213-8

Jiang, X.Z., Jian, J.B.: A sufficient descent Dai–Yuan type nonlinear conjugate gradient method for unconstrained optimization problems. Nonlinear Dyn. 72(1), 101–112 (2013). https://doi.org/10.1007/s11071-012-0694-6

Babaie-Kafaki, S., Ghanbari, R.: A hybridization of the Hestenes–Stiefel and Dai–Yuan conjugate gradient methods based on a least-squares approach. Optim. Methods Softw. 30(4), 673–681 (2015). https://doi.org/10.1080/10556788.2014.966825

Yuan, Y.X.: A modified BFGS algorithm for unconstrained optimization. IMA J. Numer. Anal. 11(3), 325–332 (1991). https://doi.org/10.1093/imanum/11.3.325

Wei, Z., Li, G., Qi, L.: New quasi-Newton methods for unconstrained optimization problems. Appl. Math. Comput. 175(2), 1156–1188 (2006). https://doi.org/10.1016/j.amc.2005.08.027

Zhang, J.Z., Deng, N.Y., Chen, L.H.: New quasi-Newton equation and related methods for unconstrained optimization. J. Optim. Theory Appl. 102(1), 147–167 (1999). https://doi.org/10.1023/A:1021898630001

Zhang, J., Xu, C.: Properties and numerical performance of quasi-Newton methods with modified quasi-Newton equations. J. Comput. Appl. Math. 137(2), 269–278 (2001). https://doi.org/10.1016/S0377-0427(00)00713-5

Biglari, F., Hassan, M.A., Leong, W.J.: New quasi-Newton methods via higher order tensor models. J. Comput. Appl. Math. 235(8), 2412–2422 (2011). https://doi.org/10.1016/j.cam.2010.10.041

Bojari, S., Eslahchi, M.R.: Global convergence of a family of modified BFGS methods under a modified weak-Wolfe-Powell line search for nonconvex functions. 4OR-Q. J. Oper. Res. 18(2), 219–244 (2020). https://doi.org/10.1007/s10288-019-00412-2

Andrea, C., Giovanni, F., Massimo, R.: Novel preconditioners based on quasi-Newton updates for nonlinear conjugate gradient methods. Optim. Lett. 11(4), 835–853 (2017). https://doi.org/10.1007/s11590-016-1060-2

Bojari, S., Eslahchi, M.R.: Two families of scaled three-term conjugate gradient methods with sufficient descent property for nonconvex optimization. Numer. Algorithms 83(3), 901–933 (2020). https://doi.org/10.1007/s11075-019-00709-7

Eslahchi, M.R., Bojari, S.: Global convergence of a new sufficient descent spectral three-term conjugate gradient class for large-scale optimization. Optim. Method Softw. (2020). https://doi.org/10.1080/10556788.2020.1843167

Nocedal, J., Wright, S.J.: Numerical Optimization, 2nd edn. Springer, Springer Series in Operations Research, New York (2006). https://doi.org/10.1007/978-0-387-40065-5

Andrei, N.: An unconstrained optimization test functions collection. Adv. Model. Optim. 10(1), 147–161 (2008). https://api.semanticscholar.org/CorpusID:63504217

Jamil, M., Yang, X.S.: A literature survey of benchmark functions for global optimisation problems. Int. J. Math. Model. Numer. Optim. 4(2), 150–194 (2013). https://doi.org/10.1504/IJMMNO.2013.055204

Dolan, E., Moré, J.: Benchmarking optimization software with performance profiles. Math. Program. 91(2), 201–213 (2002). https://doi.org/10.1007/s101070100263

Acknowledgements

The authors would like to thank the anonymous reviewers for their helpful suggestions and valuable comments.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Bojari, S., Eslahchi, M.R. Some improved Dai–Yuan conjugate gradient methods for large-scale unconstrained optimization problems. J. Appl. Math. Comput. 69, 4213–4228 (2023). https://doi.org/10.1007/s12190-023-01918-z

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12190-023-01918-z

Keywords

- Optimization

- Large-scale problems

- Conjugate gradient method

- Weak-Wolfe–Powell line search technique

- Dai–Yuan method