Abstract

The AVLaughterCycle project aims at developing an audiovisual laughing machine, able to detect and respond to user’s laughs. Laughter is an important cue to reinforce the engagement in human-computer interactions. As a first step toward this goal, we have implemented a system capable of recording the laugh of a user and responding to it with a similar laugh. The output laugh is automatically selected from an audiovisual laughter database by analyzing acoustic similarities with the input laugh. It is displayed by an Embodied Conversational Agent, animated using the audio-synchronized facial movements of the subject who originally uttered the laugh. The application is fully implemented, works in real time and a large audiovisual laughter database has been recorded as part of the project.

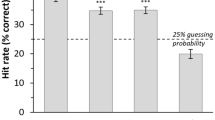

This paper presents AVLaughterCycle, its underlying components, the freely available laughter database and the application architecture. The paper also includes evaluations of several core components of the application. Objective tests show that the similarity search engine, though simple, significantly outperforms chance for grouping laughs by speaker or type. This result can be considered as a first measurement for computing acoustic similarities between laughs. A subjective evaluation has also been conducted to measure the influence of the visual cues on the users’ evaluation of similarity between laughs.

Similar content being viewed by others

References

Bartlett MS, Littlewort GC, Frank MG, Lainscsek C, Fasel IR, Movellan JR (2006) Automatic recognition of facial actions in spontaneous expression. J Multimed 1(6):22–35

Becker-Asano C, Ishiguro H (2009) Laughter in social robotics—no laughing matter. In: Intl workshop on social intelligence design (SID2009), pp 287–300

Berk L, Tan S, Napier B, Evy W (1989) Eustress of mirthful laughter modifies natural killer cell activity. Clin Res 37(1):115A

Cantoche (2010) http://www.cantoche.com/

Cassell J, Bickmore T, Billinghurst M, Campbell L, Chang K, Vilhjálmsson H, Yan H (1999) Embodiment in conversational interfaces: Rea. In: Proceedings of the CHI’99 conference. ACM, New York, pp 520–527

Curio C, Breidt M, Kleiner M, Vuong QC, Giese MA, Bülthoff HH (2006) Semantic 3D motion retargeting for facial animation. In: APGV’06: Proceedings of the 3rd symposium on applied perception in graphics and visualization. ACM, New York, pp 77–84

DiLorenzo PC, Zordan VB, Sanders BL (2008) Laughing out loud. In: SIGGRAPH’08: ACM SIGGRAPH 2008. ACM, New York

Dupont S, Dubuisson T, Urbain J, Frisson C, Sebbe R, D’Alessandro N (2009) Audiocycle: browsing musical loop libraries. In: Proc of IEEE content based multimedia indexing conference (CBMI09)

Haptek (2010) http://www.haptek.com/

Heylen D, Kopp S, Marsella S, Pelachaud C, Vilhjálmsson H (2008) Why conversational agents do what they do? Functional representations for generating conversational agent behavior. In: The first functional markup language workshop, Estoril, Portugal

Janin A, Baron D, Edwards J, Ellis D, Gelbart D, Morgan N, Peskin B, Pfau T, Shriberg E, Stolcke A, Wooters C (2003) The ICSI meeting corpus. In: 2003 IEEE international conference on acoustics, speech, and signal processing (ICASSP), Hong-Kong

Knox MT, Mirghafori N (2007) Automatic laughter detection using neural networks. In: Proceedings of interspeech 2007, Antwerp, Belgium, pp 2973–2976

Kopp S, Jung B, Leßmann N, Wachsmuth I (2003) Max—a multimodal assistant in virtual reality construction. Künstl Intell 17(4):11–18

Lasarcyk E, Trouvain J (2007) Imitating conversational laughter with an articulatory speech synthesis. In: Proceedings of the interdisciplinary workshop on the phonetics of laughter, Saarbrucken, Germany, pp 43–48

Natural Point, Inc (2009) Optitrack—optical motion tracking solutions. http://www.naturalpoint.com/optitrack/

Niewiadomski R, Bevacqua E, Mancini M, Pelachaud C (2009) Greta: an interactive expressive ECA system. In: Sierra C, Castelfranchi C, Decker KS, Sichman JS (eds) 8th international joint conference on autonomous agents and multiagent systems (AAMAS 2009), IFAAMAS, Budapest, Hungary, 10–15 May 2009, vol 2, pp 1399–1400

Nijholt A (2002) Embodied agents: a new impetus to humor research. In: Proc Twente workshop on language technology 20 (TWLT 20), pp 101–111

Ostermann J (2002) Face animation in MPEG-4. In: Pandzic IS, Forchheimer R (eds) MPEG-4 facial animation—the standard implementation and applications. Wiley, New York, pp 17–55

Pantic M, Bartlett MS (2007) Machine analysis of facial expressions. In: Delac K, Grgic M (eds) Face recognition. I-Tech Education and Publishing, Vienna, pp 377–416

Parke FI (1982) Parameterized models for facial animation. IEEE Comput Graph Appl 2(9):61–68

Peeters G (2004) A large set of audio features for sound description (similarity and classification) in the CUIDADO project. Tech rep, Institut de Recherche et Coordination Acoustique/Musique (IRCAM)

Petridis S, Pantic M (2009) Is this joke really funny? Judging the mirth by audiovisual laughter analysis. In: Proceedings of the IEEE international conference on multimedia and expo, New York, USA, pp 1444–1447

Pighin F, Hecker J, Lischinski D, Szeliski R, Salesin DH (1998) Synthesizing realistic facial expressions from photographs. In: SIGGRAPH’98. ACM, New York, pp 75–84

Ruch W, Ekman P (2001) The expressive pattern of laughter. In: Kaszniak A (ed) Emotion, qualia and consciousness. World Scientific, Singapore, pp 426–443

Savran A, Sankur B (2009) Automatic detection of facial actions from 3D data. In: Proceedings of the IEEE 12th international conference on computer vision workshops (ICCV workshops), Kyoto, Japan, pp 1993–2000

Schröder M (2003) Experimental study of affect bursts. Speech Commun 40(1–2):99–116

Siebert X, Dupont S, Fortemps P, Tardieu D (2009) MediaCycle: browsing and performing with sound and image libraries. In: Dutoit T, Macq B (eds) QPSR of the numediart research program, Numediart research program on digital art technologies, vol 2, pp 19–22

Skype Communications S à rl (2009) The skype laughter chain. http://www.skypelaughterchain.com/

Stoiber N, Seguier R, Breton G (2010) Facial animation retargeting and control based on a human appearance space. J Vis Comput Animat 21(1):39–54

Strommen E, Alexander K (1999) Emotional interfaces for interactive aardvarks: designing affect into social interfaces for children. In: Proceedings of ACM CHI’99, pp 528–535

Sundaram S, Narayanan S (2007) Automatic acoustic synthesis of human-like laughter. J Acoust Soc Am 121(1):527–535

Thórisson KR, List T, Pennock C, DiPirro J (2005) Whiteboards: scheduling blackboards for semantic routing of messages & streams. In: Thórisson KR, Vilhjálmsson H, Marsella S (eds) Proc of AAAI-05 workshop on modular construction of human-like intelligence, Pittsburgh, Pennsylvania, pp 8–15

Trouvain J (2003) Segmenting phonetic units in laughter. In: Proceedings of the 15th international congress of phonetic sciences, Barcelona, Spain, pp 2793–2796

Truong KP, van Leeuwen DA (2007) Automatic discrimination between laughter and speech. Speech Commun 49(2):144–158

Truong KP, van Leeuwen DA (2007) Evaluating automatic laughter segmentation in meetings using acoustic and acoustic-phonetic features. In: Proceedings of the interdisciplinary workshop on the phonetics of laughter, Saarbrucken, Germany, pp 49–53

Urbain J, Bevacqua E, Dutoit T, Moinet A, Niewiadomski R, Pelachaud C, Picart B, Tilmanne J, Wagner J (2010) AVLaughterCycle: an audiovisual laughing machine. In: Camurri A, Mancini M, Volpe G (eds) Proceedings of the 5th international summer workshop on multimodal interfaces (eNTERFACE’09). DIST-University of Genova, Genova

Urbain J, Bevacqua E, Dutoit T, Moinet A, Niewiadomski R, Pelachaud C, Picart B, Tilmanne J, Wagner J (2010) The AVLaughterCycle database. In: Proceedings of the seventh conference on international language resources and evaluation (LREC’10), European Language Resources Association (ELRA), Valletta, Malta

Vilhjálmsson H, Cantelmo N, Cassell J, Chafai NE, Kipp M, Kopp S, Mancini M, Marsella S, Marshall AN, Pelachaud C, Ruttkay Z, Thórisson KR, van Welbergen H, van der Werf R (2007) The behavior markup language: recent developments and challenges. In: 7th international conference on intelligent virtual agents, Paris, France, pp 99–111

Wagner J, André E, Jung F (2009) Smart sensor integration: a framework for multimodal emotion recognition in real-time. In: Affective computing and intelligent interaction (ACII 2009), Amsterdam, The Netherlands, pp 1–8

Zhang W, Wang Q, Tang X (2009) Performance driven face animation via non-rigid 3D tracking. In: MM ’09: proceedings of the seventeen ACM international conference on multimedia. ACM, New York, pp 1027–1028

Zign Creations: Zign Track (2009) http://www.zigncreations.com/zigntrack.html

Author information

Authors and Affiliations

Corresponding author

Additional information

Portions of this work have been presented in “Proceedings of eNTERFACE’09” [36].

Rights and permissions

About this article

Cite this article

Urbain, J., Niewiadomski, R., Bevacqua, E. et al. AVLaughterCycle. J Multimodal User Interfaces 4, 47–58 (2010). https://doi.org/10.1007/s12193-010-0053-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12193-010-0053-1