Abstract

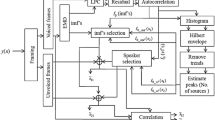

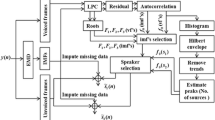

Multi-channel speech separation (SS) denotes the extraction of a multi-channel speaker's speech from the overlapping audio of the simultaneous speaker. So far, the use of visual modalities for multi-channel speech separation has shown great potential. The separation of multiple signals from their superposition recorded at several sensors is addressed. To overcome any existing drawbacks, this article proposes an effective method, such as the use of a novel hybrid method combining enthalpy-based direction of arrival (DOA) and krill herd-based matrix factorization (KHMF) to segment multi-channel speech signals, and Convolutional neural network (SCNN) estimation. First, calculate the short term Fourier transform (STFT) of the input signal. Then, the tracking branch starts to calculate the enthalpy of the analyzed signal. Enthalpy is the DOA-based spatial energy in each time frame. The Gaussian Mixture Model (GMM), which estimates the enthalpy function at each time frame, converts the spatial energy histogram into DOA measurements. Based on the output of the signal tracker, an enthalpy-based spatial covariance matrix model with DOA parameters is determined. Use multi-channel KHMF to estimate the spatial behavior of the source in time and the spectral model of the source from the tracking direction. Then, according to the spatial direction of the target speaker, effective features such as directivity and spatial features are extracted. Use score-based convolutional neural network (SCNN) relation masking. The STFT (iSTFT) operation is used to convert the generated speech spectrogram back to the extracted output signal. Experimental results show that our proposed approach accomplishes the most extreme SDR diff outcome of − 5 dB of 8.1. Comparable to the CTF-MINT, which achieves 8.05. The CTF-MPDR and CTF-BP had the SDR diff worst 7.71 and 7.4. The Unproc had the very worst SDR diff 5.71.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.References

Abbas Q, Ibrahim MEA, Arfan Jaffar M (2018) Video scene analysis: an overview and challenges on deep learning algorithms. Multimedia Tools and Applications 77(16): 20415–20453.

Agiomyrgiannakis Y, Stylianou Y Wrapped Gaussian mixture models for modeling and high-rate quantization of phase data of speech, IEEE Trans. Audio, Speech, Lang. Process., 17(4): 775–786, 2009.

Alam M, Samad MD, Vidyaratne L, Glandon A, Iftekharuddin KM (2020) Survey on deep neural networks in speech and vision systems. Neurocomputing 417:302–321

Arshad A, Riaz S, Jiao L, Murthy A (2018) Semi-supervised deep fuzzy c-mean clustering for software fault prediction. IEEE Access 10(6):25675–25685

Chen Z, Xiao X, Yoshioka T, Erdogan H, Li J, Gong Y Multi-channel overlapped speech recognition with location guided speech extraction network. In: 2018 IEEE Spoken Language Technology Workshop (SLT), pp. 558–565. IEEE, 2018.

Chen Z, Yoshioka T, Lu L, Zhou T, Meng Z, Luo Y, Wu J, Xiao X, Li J. Continuous speech separation: dataset and analysis. In: ICASSP 2020–2020 IEEE international conference on acoustics, speech and signal processing (ICASSP) 2020 May 4 (pp. 7284–7288). IEEE.

Croce P, Zappasodi F, Marzetti L, Merla A, Pizzella V, Maria Chiarelli A (2018) Deep Convolutional Neural Networks for feature-less automatic classification of Independent Components in multi-channel electrophysiological brain recordings. IEEE Transactions on Biomedical Engineering 66(8): 2372–2380.

Ding Y, Xu Y, Zhang S-X, Cong Y, Wang L Self-supervised learning for audio-visual speaker diarization. In: ICASSP 2020–2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pp. 4367–4371. IEEE, 2020.

Fan C, Liu B, Tao J, Yi J, Wen Z. Discriminative learning for monaural speech separation using deep embedding features. arXiv preprint arXiv:1907.09884. 2019 Jul 23.

Fan C, Liu B, Tao J, Yi J, Wen Z Spatial and spectral deep attention fusion for multi-channel speech separation using deep embedding features. arXiv preprint arXiv:2002.01626 (2020).

Fan C, Tao J, Liu B, Yi J, Wen Z Gated recurrent fusion of spatial and spectral features for multi-channel speech separation with deep embedding representations. In: Proc. Interspeech, vol. 2020. 2020.

Fischer T, Caversaccio M, Wimmer W Speech signal enhancement in cocktail party scenarios by deep learning based virtual sensing of head-mounted microphones. Hearing Res (2021): 108294.

Garofolo JS, Lamel LF, Fisher WM, Fiscus JG, Pallett DS, Dahlgren NL Getting started with the DARPA TIMIT CD-ROM: An acoustic phonetic continuous speech database, National Institute of Standards and Technology (NIST), Gaithersburgh, MD, vol. 107, 1988.

Garofolo JS, Lamel LF, Fisher WM, Fiscus JG, Pallett DS, Dahlgren NL DARPA TIMIT acoustic phonetic continuous speech corpus CDROM, 1993.

Gu R, Wu J, Zhang S-X, Chen L, Xu Y, Yu M, Su D, Zou Y, Yu D End-to-end multi-channel speech separation. arXiv preprint arXiv:1905.06286 (2019).

Gu Z, Lu J, Chen K. Speech separation using independent vector analysis with an amplitude variable gaussian mixture model. In: INTERSPEECH 2019 Sep (pp. 1358–1362).

Gul S, Khan MS, Shah SW (2021) Integration of deep learning with expectation maximization for spatial cue-based speech separation in reverberant conditions. Appl Acoust 1(179):108048

Hafsati M, Epain N, Gribonval R, Bertin N. Sound source separation in the higher order ambisonics domain. InDAFx 2019–22nd International Conference on Digital Audio Effects 2019 (pp. 1–7).

Kim K-W, Jee G-I (2020) Free-resolution probability distributions map-based precise vehicle localization in urban areas. Sensors 20(4):1220

Koteswararao YV, Rama Rao CB (2021) Multichannel speech separation using hybrid GOMF and enthalpy-based deep neural networks. Multimedia Syst 27(2): 271–286.

Li X, Girin L, Gannot S, Horaud R (2019) Multichannel speech separation and enhancement using the convolutive transfer function. IEEE/ACM Trans Audio Speech Lang Process 27(3):645–659

Li G, Liang S, Nie S, Liu W, Yang Z, Xiao L (2020) Deep neural network-based generalized sidelobe canceller for robust multi-channel speech recognition. Proc Interspeech 2020:51–55

Luo Y, Mesgarani N (2019) Conv-tasnet: Surpassing ideal time–frequency magnitude masking for speech separation. IEEE/ACM Trans Audio Speech Lang Process 27(8):1256–1266

Luo Yi, Chen Z, Mesgarani N (2018) Speaker-independent speech separation with deep attractor network. IEEE/ACM Trans Audio Speech Lang Process 26(4):787–796

Luo Y, Mesgarani N. Implicit Filter-and-sum Network for Multi-channel Speech Separation. arXiv preprint arXiv:2011.08401 (2020).

Luo Y, Chen Z, Mesgarani N, Yoshioka T End-to-end microphone permutation and number invariant multi-channel speech separation. In: ICASSP 2020–2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pp. 6394–6398. IEEE, 2020.

Narayanan A, Wang D (2015) Improving robustness of deep neural network acoustic models via speech separation and joint adaptive training. IEEE/ACM Transactions on Audio, Speech, and Language Processing 23(1):92–101

Nie S, Liang S, Liu W, Zhang X, Tao J (2018) Deep learning based speech separation via nmf-style reconstructions. IEEE/ACM Trans Audio Speech Lang Process 26(11):2043–2055

Nikunen J, Virtanen T (2014) Direction of arrival based spatial covariance model for blind sound source separation. IEEE/ACM Trans Audio Speech Lang Process 22(3):727–739

Peng C, Wu X, Qu T Beamforming and Deep Models Integrated Multi-talker Speech Separation. In: 2019 IEEE International Conference on Signal, Information and Data Processing (ICSIDP), pp. 1–4. IEEE, 2019.

Perotin L, Serizel R, Vincent and A, Guérin A Multichannel speech separation with recurrent neural networks from high-order ambisonics recordings. In: 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pp. 36–40. IEEE, 2018.

Qian Y-M, Weng C, Chang X-K, Wang S, Dong Yu (2018) Past review, current progress, and challenges ahead on the cocktail party problem. Front Inform Technol Electron Eng 19(1):40–63

SiSEC 2018: Signal Separation Evaluation Campaign, https://sisec.inria.fr. [Online]. http://sisec.inria.fr/ 2018-professionally-produced-music-recordings/

Sgouros T, Mitianoudis N (2020) A novel directional framework for source counting and source separation in instantaneous underdetermined audio mixtures. IEEE/ACM Trans Audio Speech Lang Process 22(28):2025–2035

Sgouros T, Mitianoudis N (2020) A novel directional framework for source counting and source separation in instantaneous underdetermined audio mixtures. IEEE/ACM Trans Audio Speech Lang Process 28:2025–2035

Subakan C, Ravanelli M, Cornell S, Bronzi M, Zhong J. Attention is all you need in speech separation. In: ICASSP 2021–2021 IEEE international conference on acoustics, speech and signal processing (ICASSP) 2021 Jun 6 (pp. 21–25). IEEE.

Thakallapalli S, Gangashetty SV, Madhu N (2021) NMF-weighted SRP for multi-speaker direction of arrival estimation: robustness to spatial aliasing while exploiting sparsity in the atom-time domain. EURASIP J Audio Speech Music Process 2021(1):1–8

Traa J Multichannel source separation and tracking with phase differences by random sample consensus, M.S. thesis, Graduate College, Univ. Illinois at Urbana-Champaign, Champaign, IL, USA, 2013.

Vincent E, Arberet S, Gribonval R Underdetermined instantaneous audio source separation via local Gaussian modeling. In: International conference on independent component analysis and signal separation, pp. 775–782. Springer, Berlin, Heidelberg, 2009.

Wang D, Chen J (2018) Supervised speech separation based on deep learning: an overview. IEEE/ACM Trans Audio Speech Lang Process 26(10):1702–1726

Wang D, Chen Z, Yoshioka T. Neural speech separation using spatially distributed microphones. arXiv preprint arXiv:2004.13670 (2020).

Wu J, Chen Z, Li J, Yoshioka T, Tan Z, Lin E, Luo Y, Xie L An End-to-end Architecture of Online Multi-channel Speech Separation. arXiv preprint arXiv:2009.03141 (2020).

Yoshioka T, Erdogan H, Chen Z, Xiao X, Alleva F (2018) Recognizing overlapped speech in meetings: a multichannel separation approach using neural networks. arXiv preprint arXiv:1810.03655.

Zhang Z, Xu Y, Yu M, Zhang SX, Chen L, Yu D (2020) ADL-MVDR: All deep learning MVDR beamformer for target speech separation. arXiv preprint arXiv:2008.06994.

Funding

In this research article has not been funded by anyone.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

All authors do not have any conflict of interest.

Ethical approval

This article does not contain any studies with human participants or animals performed by any of the authors.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Koteswararao, Y.V., Rao, C.B.R. Multichannel KHMF for speech separation with enthalpy based DOA and score based CNN (SCNN). Evolving Systems 14, 501–518 (2023). https://doi.org/10.1007/s12530-022-09473-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12530-022-09473-x