Abstract

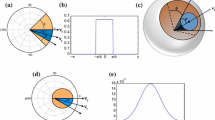

This paper proposes a learner-independent multi-task learning (MTL) scheme in which knowledge transfer (KT) is running beyond the learner. In the proposed KT approach, we use minimum enclosing balls (MEBs) as knowledge carriers to extract and transfer knowledge from one task to another. Since the knowledge presented in MEB can be decomposed as raw data, it can be incorporated into any learner as additional training data for a new learning task to improve the learning rate. The effectiveness and robustness of the proposed KT is evaluated, respectively, on multi-task pattern recognition problems derived from synthetic datasets, UCI datasets, and real face image datasets, using classifiers from different disciplines for MTL. The experimental results show that multi-task learners using KT via MEB carriers perform better than learners without-KT, and this has been successfully applied to different classifiers such as k nearest neighbor and support vector machines.

Similar content being viewed by others

References

McClelland J. Is a machine realization of truly human-like intelligence achievable? Cogn Comput. 2009;1(1):17–21.

Taylor JG. Cognitive computation. Cogn Comput. 2009;1(1):4–16.

Pang S, Ozawa S, Kasabov N. Incremental linear discriminant analysis for classification of data streams. IEEE Trans Neural Netw. 2005;35(5):905–914.

Pang S, Zhu L, Chen G, Sarrafzadeh A, Ban T, Inoue D. Dynamic class imbalance learning for incremental LPSVM. Neural Netw. 2013;44(2013):87–100.

Kumar P, Mitchell JSB, Yildirim EA. Approximate minimum enclosing balls in high dimensions using core-sets. J Exp Algorithm (JEA). 2003;8:11.

Chan TM. Approximating the diameter, width, smallest enclosing cylinder, and minimum-width annulus. In: Proceedings of the sixteenth annual symposium on computational geometry. Clear Water Bay, Kowloon, Hong Kong: ACM New York, NY, USA, 2000;300–309.

Pan SJ, Yang Q. A survey on transfer learning. Knowl Data Eng IEEE Trans. 2009;(99):1–1.

Argyriou A, Maurer A, Pontil M. An algorithm for transfer learning in a heterogeneous environment. In: proceedings of the 2008 European conference on machine learning and knowledge discovery in databases—Part I. Antwerp, Belgium: Springer; 2008. p. 71–85. http://dl.acm.org/citation.cfm?id=1431959.

Lawrence N. Platt J. Learning to learn with the informative vector machine. In: The 21st international conference on machine learning. 2004.

Knowledge Transfer via Multiple Model Local Structure Mapping, ser. Research papers, no. 978-1-60558-193-4. ACM, November 2008.

Mitchell TM. The need for biases in learning generalizations. Tech Rep.; 1980.

Ozawa S, Roy A, Roussinov D. A multitask learning model for online pattern recognition. IEEE Trans Neural Netw. 2009;20(3):430–445.

Yu K, Schwaighofer A, Tresp V, Ma W-Y, Zhang H. Collaborative ensemble learning: combining collaborative and content-based information filtering via hierarchical bayes. In: Proceedings of the 19th conference on uncertainty in artificial intelligence. 2003.

Silver D, Mercer R. Selective functional transfer: inductive bias from related tasks. In: Proceedings of the IASTED international conference on artificial intelligence and soft computing (ASC2001), p. 182–189.

Mitchell TM. Machine learning. New York; McGraw-Hill. 1997.

Silver DL, McCracken P. Selective transfer of task knowledge using stochastic noise. In: Xiang IY, Chaib-draa B, editors. Advances in artificial intelligence, proceedings of the 16th conference of the Canadian society for computational studies of intelligence (AI’2003). Berlin: Springer. 2003;190–205.

Ghosn J, Bengio Y. Bias learning, knowledge sharing. IEEE Trans Neural Netw. 2003;14(4):748–765.

Silver DL, Poirier R. Sequential consolidation of learned task knowledge. 2004.

Silver DL. Selective transfer of neural network task knowledge. PhD Thesis, University of Western Ontario. 2000.

Caruana R. Multitask learning. Machine Learn. 1997;28:41–75.

Jonathan B. Learning internal representations. In: Proceedings of the eighth international conference on computational learning theory. 1995.

Intrator N, Edelman S. Making a low-dimensional representation suitable for diverse tasks. Norwell, MA, USA: Kluwer Academic. 1998.

Thrun S. Is learning the n-th thing any easier than learning the first?. In: Advances in neural information processing systems. Cambridge: The MIT Press. 1996;640–646.

Roy A, Kim LS, Mukhopadhyay S. A polynomial time algorithm for the construction and training of a class of multilayer perceptrons. Neural Netw. 1993;6(4):535–545.

Roy A, Govil S, Miranda R. An algorithm to generate radial basis function (rbf)-like nets for classification problems. Neural Netw. 1995;8(2):179–202.

Roy A, Mukhopadhyay S. Iterative generation of higher-order nets in polynomial time using linear programming. IEEE Trans Neural Netw. 1997;8(2):402–412.

Roy A, Govil S, Miranda R. A neural network learning theory and a polynomial time rbf algorithm. IEEE Trans Neural Netw. 1997;8(6):1301–1313.

Xue Y, Liao X, Carin L. Multi-task learning for classification with dirichlet process priors. J Mach Learn Res Research. 2007;8:35–63.

Ferguson T. A bayesian analysis of some nonparametric problems. Ann Stat. 1973;1:209–230.

Good I. Some history of the hierarchical Bayesian methodology, ser. Bayesian statistics. Valencia, Valencia University Press. 1980

Evgeniou T, Pontil M. Regularized multi-task learning. In: Proceedings of the tenth ACM SIGKDD international conference on Knowledge discovery and data mining. ACM. 2004;109–117.

Ben-David S, Schuller R. Exploiting task relatedness for multiple task learning. In: Proceedings of computational learning theory (COLT). 2003.

Eaton E, desJardins M. Knowledge transfer with a multiresolution ensemble of classifiers. In: ICML-06 workshop on structural knowledge transfer for machine learning, June 29, Pittsburgh, PA. 2006.

Eaton E. Multi-resolution learning for knowledge transfer. Boston, MA; AAAI Press. July 16–20 2006, [Doctoral Consortium].

Eaton E, desJardins M, Stevenson J. Using multiresolution learning for transfer in image classification. In: aaai07. AAAI Press. 2007.

Megiddo N. Linear-time algorithms for linear programming in r 3 and related problems. SIAM J Comput. 1983;12(4):759–776.

Welzl E. Smallest enclosing disks (balls and ellipsoids). Results New Trends Comput Sci. 1991;359–370.

Badoiu M. Optimal core sets for balls. In: In DIMACS workshop on computational geometry. 2002.

Chapelle O, Scholkopf B, Zien A. Semi-supervised learning. Cambridge, MA: MIT Press. 2006.

Bache K, Lichman M. UCI machine learning repository,” 2013. [Online]. http://archive.ics.uci.edu/ml.

Kim M-S, Kim D, Lee S-Y. Face recognition descriptor using the embedded hmm with the 2nd-order block-specific eigenvectors. ISO/IEC JTC1/SC21/WG11/M7997, Jeju, 2002.

Ozawa S, Pang S, Kasabov N. Online feature selection for adaptive evolving connectionist systems. Int J Innov Comput Inf Control. 2006;2(1):181–192.

Ozawa S, Pang S, Kasabov N. Incremental learning of chunk data for on-line pattern classification systems. IEEE Trans Neural Netw. 2008;19(6):1061–1074.

Ozawa S, Pang S, Kasabov N. Incremental learning of feature space and classifier for on-line pattern recognition. Int J Knowl Based Intell Eng Syst. 2006;10:57–65.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Pang, S., Liu, F., Kadobayashi, Y. et al. A Learner-Independent Knowledge Transfer Approach to Multi-task Learning. Cogn Comput 6, 304–320 (2014). https://doi.org/10.1007/s12559-013-9238-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12559-013-9238-8