Abstract

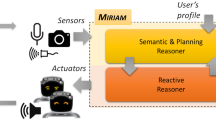

A service robot should be able to detect and identify the user in order to personalize its services and guarantee security, it should recognize the user’s emotions to allow affective interaction, and it should be able to communicate easily with the user and understand given commands by recognizing speech and gestures. Our research is motivated, for example, by the emerging needs of elderly care, health care, safety, and logistics. We have developed a distributed system for affective human–robot interaction which combines all the basic sensory elements and can collaborate with a smart environment and obtain further knowledge from the Internet. The realized HRI robot, Minotaurus, runs in real time and is capable of interacting with human beings. Minotaurus forms a rather generic platform for experimenting with human–robot collaboration in different applications and environments.

Similar content being viewed by others

References

Ortiz A, del Puy Carretero M, Oyarzun D, Yanguas J, Buiza C, Gonzalez M, Etxeberria I. Elderly users in ambient Intelligence: does an avatar improve the interaction? In: ERCIM UI4ALL Ws’06. Berlin: Springer; 2007. p 99–114.

Turk M. Perceptual user interfaces. Commun ACM. 2000;43(3):32–4.

Bethel C, Murphy R. Survey on non-facial/non-verbal affective expressions for appearance-constrained robots. IEEE Trans Syst Man Cybernet Part C: Appl Rev. 2008;38:83–92.

Miyake S, Ito A. A spoken dialogue system using virtual conversational agent with augmented reality. In: Proceedings of the APSIPA ASC’12. 2012. p. 1–4.

Leite I, Martinho C, Paiva A. Social robots for long-term interaction: a survey. Int J Soc Robot. 2013;5(2):291–308.

Kirby R, Forlizzi J, Simmons R. Affective social robots. Robot Auton Syst. 2010;58:322–332.

Jaimes A, Sebe N. Multimodal human computer interaction. A survey. In: Proceedings of the IEEE international workshop on human computer interaction. 2005.

Argall BD, Chernova S, Veloso M, Browning B. A survey of robot learning from demonstration. Robot Auton Syst Robot Auton Syst. 2009;57:469–483.

Srinivasa S, Ferguson D, Helfrich C, Berenson D, Romea AC, Diankov R, Gallagher G, Hollinger G, Kuffner J, Vandeweghe JM. HERB: a home exploring robotic butler. Auton Robots. 2010;28(1):5–20.

Srinivasa S, Berenson D, Cakmak M, Romea AC, Dogar M, Dragan A, Knepper RA, Niemueller TD, Strabala K, Vandeweghe JM, Ziegler J. HERB 2.0: lessons learned from developing a mobile manipulator for the home. Proc IEEE. 2012;100(8):1–19.

Ishiguro H, Ono T, Imai M, Maeda T, Kanda T, Nakatsu R. Robovie: an interactive humanoid robot. Int J Ind Robot. 2001;28(6):498–503.

Kanda T, Hirano T, Eaton D, Ishiguro H. Interactive robots as social partners and peer tutors for children: a field trial. Hum Comput Interact. 2004;19(1–2):61–84.

Kanda T, Ishiguro H, Imai M, Ono T. Development and evaluation of interactive humanoid robots. Proc IEEE. 2004;95(11):1839–50.

Shiomi M, Kanda T, Ishiguro H, Hagita N. Interactive humanoid robots for a science museum. IEEE Intell Syst. 2007;22(2):25–32.

Mitsunaga N, Smith C, Kanda T, Isiguro H, Hagita N. Adapting robot behavior for human–robot interaction. IEEE Trans Robot. 2008;24(4):911–6.

Glas F, Satake S, Ferreri F, Kanda T, Hagita N. The network robot system: enabling social human–robot interaction in public spaces. J Hum Robot Interact. 2012;1:5–32.

Vallivaara I, Haverinen J, Kemppainen A, Röning J. Magnetic field-based SLAM method for solving the localization problem in mobile robot floor-cleaning task. In: 2011 15th international conference on advanced robotics (ICAR). 2011. p. 198–203. ISBN 978-1-4577-1158-9.

Vallivaara I, Haverinen J, Kemppainen A, Röning J. Simultaneous localization and mapping using ambient magnetic field. In: 2010 IEEE international conference on multisensor fusion and integration for intelligent systems. Salt Lake City, Utah, USA: IEEE; 2010. p. 14–19.

Ahonen T, Hadid A, Pietikäinen M. Face description with local binary patterns: application to face recognition. IEEE Trans Pattern Anal Mach Intell. 2006;28(12):2037–41.

Viola P, Jones MJ. Robust real-time face detection. Int J Comput Vis. 2004;57(2):137–54.

Tan X, Triggs B. Enhanced local texture feature sets for face recognition under difficult lighting conditions. IEEE Trans Image Process. 2010;19(6):1635–50.

Ylioinas J, Hadid A, Pietikäinen M. Combining contrast information and local binary patterns for gender classification. In: Image analysis, SCIA 2011 proceedings. Lecture Notes in Computer Science. 2011;6688:676–86.

Ylioinas J, Hadid A, Pietikäinen M. Age classification in unconstrained conditions using LBP variants. In: Proceedings of the international conference on pattern recognition, Tsukuba, Japan. 2012. p. 1257–60.

Zeng Z, Pantic M, Roisman G, Huang T. A survey of affect recognition methods: audio, visual, and spontaneous expressions. IEEE Trans Pattern Anal Mach Intell. 2008;31(1):39–58.

Höök K. Affective computing. In: Soegaard M, Dam RF, editors. The encyclopedia of human–computer interaction. 2nd ed. Aarhus: The Interaction Design Foundation; 2013.

Zhao G, Pietikäinen M. Dynamic texture recognition using local binary patterns with an application to facial expressions. IEEE Trans Pattern Anal Mach Intell. 2007;29(6):915–28.

Huang X, Kortelainen J, Zhao G, Li X, Moilanen A, Seppänen T, Pietikäinen M. Combining facial expressions and electroencephalogram for emotion analysis. IEEE Trans Affect Comput. (in revision).

Huggins-Daines D, Kumar M, Chan A, Black AW, Ravishankar M, Rudnicky AI. Pocketsphinx: A free, real-time continuous speech recognition system for hand-held devices. In: Proceedings of the IEEE international conference on acoustics, speech and signal processing, vol 1. doi:10.1109/ICASSP.2006.1659988.

Archer D. Unspoken diversity: cultural differences in gestures. Qual Sociol. 1997;20(1):79–105.

Shotton J, Fitzgibbon A, Cook M, Sharp T, Finocchio M, Moore R, Kipman A, Blake A. Real-time human pose recognition in parts from a single depth image. In: Proceedings of the IEEE conference on computer vision and pattern recognition (CVPR). 2011.

Kellokumpu V, Zhao G, Pietikäinen M. Human activity recognition using a dynamic texture based method. In: Proceedings of the British machine vision conference. 2008.

Dalal N, Triggs B. Histograms of oriented gradients for human detection. In: Proceedings of the IEEE conference on computer vision and pattern recognition. 2005. p. 886–893.

Zhou Z, Zhao G, Guo Y, Pietikäinen M. An image-based visual speech animation system. IEEE Trans Circ Syst Video Technol. 2012;22(10):1420–32.

Tikanmäki A, Röning J. Markers—towards general purpose information representation. In: IROS2011 workshop: knowledge representation for autonomous robots. 2011.

Borenstein J, Koren Y. The vector field histogram fast obstacle-avoidance for mobile robots. IEEE J Robot Autom. 1991;7(3):278–88.

Melchior NA, Kwak J, Simmons R. Particle RRT for path planning in very rough terrain. In: NASA science technology conference 2007 (NSTC). 2007.

Acknowledgments

We would like to thank the European Regional Fund and the Academy of Finland for providing financial support. We are also grateful for the contributions of Janne Haverinen, Jaakko Suutala, Ilari Vallivaara, Juha Ylioinas, Guoying Zhao, and Ziheng Zhou.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Röning, J., Holappa, J., Kellokumpu, V. et al. Minotaurus: A System for Affective Human–Robot Interaction in Smart Environments. Cogn Comput 6, 940–953 (2014). https://doi.org/10.1007/s12559-014-9285-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12559-014-9285-9